Monitor filesystem events using inotify-tools

# inotify-tools

This is a basic guide to use inotify-tools.

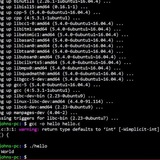

```bash

apt-get install inotify-tools

```

## Initial Command

This is basic command of inotify-tools.

* `inotifywait` is a part of inotify-tools.

* `-m` monitor for events continuously (don't exit after the first event).

* `-e create` watch for file creation events specifically.

* `/path/to/directory` The directory to monitor.

```bash

inotifywait -m -e create /path/to/directory

```

When a new file is created, inotifywait will print a line like -

```

CREATE /path/to/directory/new_file.txt

```

Capture this output in a noscript or command to perform actions on the new file.

## Using while loop

```bash

inotifywait -m -e create /path/to/directory | while read line; do

# Extract the filename from the output

filename=$(echo $line | cut -d' ' -f3)

# Do something with the new file

echo "New file created: $filename"

done

```

## Additional options

* `-r` Monitor recursively for changes in subdirectories as well.

* `--format %f` Print only the filename in the output.

* `--timefmt %Y%m%d%H%M%S` Specify a custom timestamp format.

https://redd.it/1ad1pgp

@r_bash

# inotify-tools

This is a basic guide to use inotify-tools.

```bash

apt-get install inotify-tools

```

## Initial Command

This is basic command of inotify-tools.

* `inotifywait` is a part of inotify-tools.

* `-m` monitor for events continuously (don't exit after the first event).

* `-e create` watch for file creation events specifically.

* `/path/to/directory` The directory to monitor.

```bash

inotifywait -m -e create /path/to/directory

```

When a new file is created, inotifywait will print a line like -

```

CREATE /path/to/directory/new_file.txt

```

Capture this output in a noscript or command to perform actions on the new file.

## Using while loop

```bash

inotifywait -m -e create /path/to/directory | while read line; do

# Extract the filename from the output

filename=$(echo $line | cut -d' ' -f3)

# Do something with the new file

echo "New file created: $filename"

done

```

## Additional options

* `-r` Monitor recursively for changes in subdirectories as well.

* `--format %f` Print only the filename in the output.

* `--timefmt %Y%m%d%H%M%S` Specify a custom timestamp format.

https://redd.it/1ad1pgp

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Create bash noscripts 100x faster using libray

# bash-sdk 🔥

A bash library to create standalone noscripts.

https://ourcodebase.gitlab.io/bashsdk-docs/

## Features ✨

There are some features of bash-sdk are mentioned here.

OOPS like code 💎.

Module based code 🗂️.

Similar functions to python 🐍.

Standalone noscript creation 📔.

## Beauty 🏵️

Checkout the ui of this cli project here.

## General 🍷

There are some rules or things to keep in mind while using this library.

The rules are mentioned here.

## Installation 🌀

Just clone it to anywhere.

git clone --depth=1 https://github.com/OurCodeBase/bash-sdk.git

## Modules 📚

These are the modules created in bash-sdk library. You can read about their functions by clicking on them.

[ask.sh](/docs/ask)

cursor.sh

[db](/docs/db)

file.sh

[inspect.sh](/docs/inspect)

os.sh

[package.sh](/docs/package)

repo.sh

[say.sh](/docs/say)

screen.sh

[spinner.sh](/docs/spinner)

string.sh

[url.sh](/docs/url)

## Structure 🗃️

File structure of bash-sdk is like:

bash-sdk

├── docs # docs for bash-sdk.

├── _uri.sh # helper of builder.

├── builder.sh

└── src

├── ask.sh

├── cursor.sh

├── db.sh

├── file.sh

├── inspect.sh

├── os.sh

├── package.sh

├── repo.sh

├── say.sh

├── screen.sh

├── spinner.sh

├── string.sh

└── url.sh

## Compiler 🧭

Compiler does combine all codes in a standalone bash file.

bash builder.sh -i "path/to/input.sh" -o "path/to/output.sh";

input file is the file that you are going to compile.

output file is the standalone result file.

Then you can directly execute output file without bash-sdk library.

## Queries 📢

If you have any questions or doubt related to this library you can directly ask everything [here](https://github.com/OurCodeBase/bash-sdk/issues).

## Suggestion 👌

bash-lsp to your code editor to get auto completions.

[shellcheck](https://github.com/koalaman/shellcheck) to debug bash code.

cooked.nvim code editor to get best compatibility.

## Author 🦋

Created By [@OurCodeBase](https://github.com/OurCodeBase)

Inspired By @mayTermux

https://redd.it/1acumxu

@r_bash

# bash-sdk 🔥

A bash library to create standalone noscripts.

https://ourcodebase.gitlab.io/bashsdk-docs/

## Features ✨

There are some features of bash-sdk are mentioned here.

OOPS like code 💎.

Module based code 🗂️.

Similar functions to python 🐍.

Standalone noscript creation 📔.

## Beauty 🏵️

Checkout the ui of this cli project here.

## General 🍷

There are some rules or things to keep in mind while using this library.

The rules are mentioned here.

## Installation 🌀

Just clone it to anywhere.

git clone --depth=1 https://github.com/OurCodeBase/bash-sdk.git

## Modules 📚

These are the modules created in bash-sdk library. You can read about their functions by clicking on them.

[ask.sh](/docs/ask)

cursor.sh

[db](/docs/db)

file.sh

[inspect.sh](/docs/inspect)

os.sh

[package.sh](/docs/package)

repo.sh

[say.sh](/docs/say)

screen.sh

[spinner.sh](/docs/spinner)

string.sh

[url.sh](/docs/url)

## Structure 🗃️

File structure of bash-sdk is like:

bash-sdk

├── docs # docs for bash-sdk.

├── _uri.sh # helper of builder.

├── builder.sh

└── src

├── ask.sh

├── cursor.sh

├── db.sh

├── file.sh

├── inspect.sh

├── os.sh

├── package.sh

├── repo.sh

├── say.sh

├── screen.sh

├── spinner.sh

├── string.sh

└── url.sh

## Compiler 🧭

Compiler does combine all codes in a standalone bash file.

bash builder.sh -i "path/to/input.sh" -o "path/to/output.sh";

input file is the file that you are going to compile.

output file is the standalone result file.

Then you can directly execute output file without bash-sdk library.

## Queries 📢

If you have any questions or doubt related to this library you can directly ask everything [here](https://github.com/OurCodeBase/bash-sdk/issues).

## Suggestion 👌

bash-lsp to your code editor to get auto completions.

[shellcheck](https://github.com/koalaman/shellcheck) to debug bash code.

cooked.nvim code editor to get best compatibility.

## Author 🦋

Created By [@OurCodeBase](https://github.com/OurCodeBase)

Inspired By @mayTermux

https://redd.it/1acumxu

@r_bash

ourcodebase.gitlab.io

bash-sdk 🔥 | OurCodeBase

A docs for bash-sdk

BEE-GENTLE-1ST-BASH-SCRIPT

so, I am looking to make my life a bit simple, I use nmap and use some pentesting labs. IP of target always changes and instead of remembering the ip of the target, it would be nice to just use TARGET in my commands that I pass.

I sudo this file and it was giving me ability to append a new targetname and ip to /etc/hosts file

e.g. target1 10.10.10.101

I tried it and it worked, so I added in a command where I need some guidance, pointers on how I can add the delete option. I googled and saw sed command but not sure how to incorporate it.

My expectation is to cat the /etc/hosts file and see whats there, then add or delete as needed before a new pentest box is being worked on.

filename: addtarget.sh

#!/bin/sh

echo "What is the TARGET # please"

read TARGET

echo "Enter IP address please"

read IP

/new line added to delete/ echo "Enter TARGET # to delete from /etc/hosts"

/new line added to delete/ read DEL

/new line added to delete/ sed -i.bak '/target$DEL\'./d' /etc/hosts # will delete lines containing "target."

echo "Adding $TARGET and its associated $IP address for you"

echo "$TARGET $IP" >> /etc/hosts

++++++++++++++++++++++

Thank you in advance to this community and any support you can provide me.

https://redd.it/1acytdx

@r_bash

so, I am looking to make my life a bit simple, I use nmap and use some pentesting labs. IP of target always changes and instead of remembering the ip of the target, it would be nice to just use TARGET in my commands that I pass.

I sudo this file and it was giving me ability to append a new targetname and ip to /etc/hosts file

e.g. target1 10.10.10.101

I tried it and it worked, so I added in a command where I need some guidance, pointers on how I can add the delete option. I googled and saw sed command but not sure how to incorporate it.

My expectation is to cat the /etc/hosts file and see whats there, then add or delete as needed before a new pentest box is being worked on.

filename: addtarget.sh

#!/bin/sh

echo "What is the TARGET # please"

read TARGET

echo "Enter IP address please"

read IP

/new line added to delete/ echo "Enter TARGET # to delete from /etc/hosts"

/new line added to delete/ read DEL

/new line added to delete/ sed -i.bak '/target$DEL\'./d' /etc/hosts # will delete lines containing "target."

echo "Adding $TARGET and its associated $IP address for you"

echo "$TARGET $IP" >> /etc/hosts

++++++++++++++++++++++

Thank you in advance to this community and any support you can provide me.

https://redd.it/1acytdx

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Utility that Scans A Bash Script And Lists All Required Commands

#### I'm looking for a utility that scans a bash noscript and lists all required commands. Some reqs and specs, v00.03.

Do you know of such a beast?

I have not been able to frame a valid web query, other than ones that generate terabytes of cruft.

Barring that, I could use some help with specs.

**Shortcuts?**

* I can safely ignore or disallow command names and functions with embedded spaces.

* Would running the bash '-x' option provide a better basis for the scan, or is the source better?

* Or, what?

* I have a few noscripts that write other noscripts (templates, with an intervening editing session). I suppose that if I have a utility that can scan a "non-recursive" noscript, I could use the utility recursively?

**"Specs"**

* I only want the list to include external commands; if Bash internals are included, I would prefer that they be listed separately.

* There are quite a few Bash internals that have external equivalents, e.g., 'echo' and 'test'. I need to be able to distinguish between the two like-named commands. Is a format like '/bin/test', for example, sufficient to distinguish between the two?

* Ignore first <words> followed by '()'

* Ignore everything to the right of a '#' not quoted or escaped.

This gets pretty complicated on multiline quotes with embedded quotes, i.e.:

- \>"...'...'..."<

- \>"...'...'..."<

- \>"...\"...<

- etc.

* First <word> on a line.

* First <word> following a ';'.

* First <word> following a '|'.

* First <word> following a '$(', or '`'.

https://redd.it/1acparm

@r_bash

#### I'm looking for a utility that scans a bash noscript and lists all required commands. Some reqs and specs, v00.03.

Do you know of such a beast?

I have not been able to frame a valid web query, other than ones that generate terabytes of cruft.

Barring that, I could use some help with specs.

**Shortcuts?**

* I can safely ignore or disallow command names and functions with embedded spaces.

* Would running the bash '-x' option provide a better basis for the scan, or is the source better?

* Or, what?

* I have a few noscripts that write other noscripts (templates, with an intervening editing session). I suppose that if I have a utility that can scan a "non-recursive" noscript, I could use the utility recursively?

**"Specs"**

* I only want the list to include external commands; if Bash internals are included, I would prefer that they be listed separately.

* There are quite a few Bash internals that have external equivalents, e.g., 'echo' and 'test'. I need to be able to distinguish between the two like-named commands. Is a format like '/bin/test', for example, sufficient to distinguish between the two?

* Ignore first <words> followed by '()'

* Ignore everything to the right of a '#' not quoted or escaped.

This gets pretty complicated on multiline quotes with embedded quotes, i.e.:

- \>"...'...'..."<

- \>"...'...'..."<

- \>"...\"...<

- etc.

* First <word> on a line.

* First <word> following a ';'.

* First <word> following a '|'.

* First <word> following a '$(', or '`'.

https://redd.it/1acparm

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

bash noscript developer

Hi I am a researcher and I have to write some bash noscripts for my project but I am too new here. Could you please help me (as a consultant or paid bash noscript writer)?

https://redd.it/1acdtxz

@r_bash

Hi I am a researcher and I have to write some bash noscripts for my project but I am too new here. Could you please help me (as a consultant or paid bash noscript writer)?

https://redd.it/1acdtxz

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Iterating over ls output is fragile. Use globs.

My editor gave me this warning, can y'all help me understand why?

The warnig again is:

`Iterating over ls output is fragile. Use globs.`

Here is my lil noscript:

#!/usr/bin/env bash

for ZIPFILE in $(ls *.zip)

do

echo "Unzipping $ZIPFILE..."

unzip -o "$ZIPFILE"

done

What should I use instead of `$(ls *.zip)` and why?

https://redd.it/1abus2n

@r_bash

My editor gave me this warning, can y'all help me understand why?

The warnig again is:

`Iterating over ls output is fragile. Use globs.`

Here is my lil noscript:

#!/usr/bin/env bash

for ZIPFILE in $(ls *.zip)

do

echo "Unzipping $ZIPFILE..."

unzip -o "$ZIPFILE"

done

What should I use instead of `$(ls *.zip)` and why?

https://redd.it/1abus2n

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

A Bash noscript thatspurpose is to source the latest release version number of a GitHub repository

To use it set the variable

repo example 1

url=https://github.com/rust-lang/rust.git

repo example 2

url=https://github.com/llvm/llvm-project.git

And run this command in your bash noscript

curl -sH "Content-Type: text/plain" "https://raw.githubusercontent.com/slyfox1186/noscript-repo/main/Bash/Misc/source-git-version.sh" | bash -s "$url"

These examples should return the following version numbers for llvm and rust respectively...

It works for all the repos I have tested so far but I'm sure one will throw an error.

If a repo doesn't work let me know and I'll see if I can fix it.

https://redd.it/1abhv75

@r_bash

To use it set the variable

url (or name it whatever)repo example 1

url=https://github.com/rust-lang/rust.git

repo example 2

url=https://github.com/llvm/llvm-project.git

And run this command in your bash noscript

curl -sH "Content-Type: text/plain" "https://raw.githubusercontent.com/slyfox1186/noscript-repo/main/Bash/Misc/source-git-version.sh" | bash -s "$url"

These examples should return the following version numbers for llvm and rust respectively...

17.0.61.75.0It works for all the repos I have tested so far but I'm sure one will throw an error.

If a repo doesn't work let me know and I'll see if I can fix it.

https://redd.it/1abhv75

@r_bash

GitHub

GitHub - rust-lang/rust: Empowering everyone to build reliable and efficient software.

Empowering everyone to build reliable and efficient software. - rust-lang/rust

finding files and [sub]directories with exclusions

So, I recently discovered while using `find` and trying to exclude a particular directory via something like

find "${base_dir}" ! -path "${exclude_dir}" ! -wholename "${exclude_dir}/*"

that `find` still scans the excluded directory and then removes them from the output. i.e., it doesnt "skip" this directory, it scans it like all the rest and then removes any results that match the `! -path` or `! -wholename` rule.

This can be a bit annoying (and make the `find` run *really* slow) if the directory you are excluding is, for example:

* the mount point of a huge mounted zfs raidz2 pool storing some 40 TB of data

* the mount point of a 5 tb usb attached HDD to an embedded system that can only read it at a maximum of ~20 MB/s

Being that I ran into both of these in the last few days, In wrote up a little function to exclude directories from find without having to scan through them. That said, its decently robust but Im sure some edge cases will give it trouble, and I feel there is probably a tool that does this more robustly, so if anyone knows what it is by all means let me know.

The function below works by figuring out a minimal file/directory list that is searched (with `find`) that covers everything under the base search dir except the excluded stuff. For example: if you wanted to list everything under `/a/b` except for `a/b/c`, `a/b/d/e`, `a/b/d/f`, and any subdirectories under those three exclusions, this list of "files and directories to search" would include:

* everything immediately under `/a/b` except for `/a/b/c` and `/a/b/d` \-\-AND\-\-

* everything immediately under `/a/b/d`, except for `/a/b/d/e` and `/a/b/d/f`

This function constructs this list by breaking apart excluded directories into "nesting levels" relative to the base search dir and then on each doing `find -mindepth 1 -maxdepth 1 ! -path ...` in a loop on each unique dir from each nesting level.

***

OK, heres the code:

efind () {

## find files/directories under a base search directory with certain files and directories(+sub-directories) excluded

#

# IMPORTANT NOTE:

# excluded directories are not queried at all, making it fast in cases where the excluded directory contains A LOT of data

# (unlike `find "$base_dir" ! -path "$exclude_dir/*"`, which traverses the excluded directory and then drops the results)

#

# USAGE:

# 1st input is the base search directory that you are searching for things under

# All remaining inputs are excluded files and/or directories

#

# dependencies: `realpath` and `find`

local -a eLevels edir efile A B F;

local bdir a b nn;

local -i kk;

shopt -s extglob;

# get base search directory

bdir="${1%\/}";

shift 1;

[[ "${bdir}" == \/* ]] || bdir="$(realpath "${bdir}")";

# parse additional inputs. Split valid ones into seperate lists of files / directories to exclude

for nn in "${@%/}"; do

# get real paths. If path is relative (doesnt start with / or ./ or ~/) assume it is relative to the base search directory (NOT PWD)

case "${nn:0:1}" in

[\~\.\/])

nn="$(realpath "$nn")";

;;

*)

nn="$(realpath "${bdir}/${nn}")";

;;

esac

# ensure path is under base search directory

[[ "$nn" == "${bdir}"\/* ]] || {

printf 'WARNING: "%s" not under base search dir ("%s")\n ignoring "%s"\n\n' "$nn" "${bdir}" "$nn";

continue;

}

# split into files list or directories list

if [[ -f "$nn" ]]; then

efile+=("$nn");

elif [[ -d "$nn" ]]; then

edir+=("$nn");

else

printf 'WARNING: "%s" not found as file or dir.\n Could be a "lack of permissions" issue?\n ignoring "%s"\n\n' "$nn" "$nn";

fi;

done;

So, I recently discovered while using `find` and trying to exclude a particular directory via something like

find "${base_dir}" ! -path "${exclude_dir}" ! -wholename "${exclude_dir}/*"

that `find` still scans the excluded directory and then removes them from the output. i.e., it doesnt "skip" this directory, it scans it like all the rest and then removes any results that match the `! -path` or `! -wholename` rule.

This can be a bit annoying (and make the `find` run *really* slow) if the directory you are excluding is, for example:

* the mount point of a huge mounted zfs raidz2 pool storing some 40 TB of data

* the mount point of a 5 tb usb attached HDD to an embedded system that can only read it at a maximum of ~20 MB/s

Being that I ran into both of these in the last few days, In wrote up a little function to exclude directories from find without having to scan through them. That said, its decently robust but Im sure some edge cases will give it trouble, and I feel there is probably a tool that does this more robustly, so if anyone knows what it is by all means let me know.

The function below works by figuring out a minimal file/directory list that is searched (with `find`) that covers everything under the base search dir except the excluded stuff. For example: if you wanted to list everything under `/a/b` except for `a/b/c`, `a/b/d/e`, `a/b/d/f`, and any subdirectories under those three exclusions, this list of "files and directories to search" would include:

* everything immediately under `/a/b` except for `/a/b/c` and `/a/b/d` \-\-AND\-\-

* everything immediately under `/a/b/d`, except for `/a/b/d/e` and `/a/b/d/f`

This function constructs this list by breaking apart excluded directories into "nesting levels" relative to the base search dir and then on each doing `find -mindepth 1 -maxdepth 1 ! -path ...` in a loop on each unique dir from each nesting level.

***

OK, heres the code:

efind () {

## find files/directories under a base search directory with certain files and directories(+sub-directories) excluded

#

# IMPORTANT NOTE:

# excluded directories are not queried at all, making it fast in cases where the excluded directory contains A LOT of data

# (unlike `find "$base_dir" ! -path "$exclude_dir/*"`, which traverses the excluded directory and then drops the results)

#

# USAGE:

# 1st input is the base search directory that you are searching for things under

# All remaining inputs are excluded files and/or directories

#

# dependencies: `realpath` and `find`

local -a eLevels edir efile A B F;

local bdir a b nn;

local -i kk;

shopt -s extglob;

# get base search directory

bdir="${1%\/}";

shift 1;

[[ "${bdir}" == \/* ]] || bdir="$(realpath "${bdir}")";

# parse additional inputs. Split valid ones into seperate lists of files / directories to exclude

for nn in "${@%/}"; do

# get real paths. If path is relative (doesnt start with / or ./ or ~/) assume it is relative to the base search directory (NOT PWD)

case "${nn:0:1}" in

[\~\.\/])

nn="$(realpath "$nn")";

;;

*)

nn="$(realpath "${bdir}/${nn}")";

;;

esac

# ensure path is under base search directory

[[ "$nn" == "${bdir}"\/* ]] || {

printf 'WARNING: "%s" not under base search dir ("%s")\n ignoring "%s"\n\n' "$nn" "${bdir}" "$nn";

continue;

}

# split into files list or directories list

if [[ -f "$nn" ]]; then

efile+=("$nn");

elif [[ -d "$nn" ]]; then

edir+=("$nn");

else

printf 'WARNING: "%s" not found as file or dir.\n Could be a "lack of permissions" issue?\n ignoring "%s"\n\n' "$nn" "$nn";

fi;

done;

# split directories up into nesting levels relative to base search directory

# is base search directory is '/a/b'; then: level 0 is '/a/b', level 1 is '/a/b/_', level 2 is '/a/b/_/_, level 3 is '/a/b/_/_/_' etc.)'

eLevels[0]="${bdir}";

for nn in "${edir[@]%%+([\/\*])}"; do

b="${nn#"${bdir%/}/"}/";

a="${bdir%/}/";

kk=1;

until [[ -z $b ]] || [[ "$a" == "$nn" ]]; do

a+="${b%%/*}/";

b="${b#*/}";

eLevels[$kk]+="${a%\/}"$'\n';

((kk++));

done;

done;

# construct minimal list of files/directories to search that doesnt contain excluded directories and save in array F

# EXAMPLE:

# if the base search directory is '/a/b' and you want to exclude 'a/b/c', 'a/b/d/e', and 'a/b/d/f', this includes:

# everything immediately under '/a/b' except for '/a/b/c' and '/a/b/d' --AND--

# everything immediately under '/a/b/d', except for '/a/b/d/e' and '/a/b/d/f'

mapfile -t F < <(for ((kk=1; kk<${#eLevels[@]}; kk++ )); do

mapfile -t A < <(printf '%s' "${eLevels[$(( $kk - 1 ))]}" | sort -u)

A=("${A[@]}");

for nn in "${A[@]}"; do

mapfile -t B < <(printf '%s' "${eLevels[$kk]}" | grep -F "${nn}" | sort -u)

B=("${B[@]}");

[[ -n "$(printf '%s' "${B[@]//[ \t]/}")" ]] && source /proc/self/fd/0 <<< "find \"${nn}\" -maxdepth 1 -mindepth 1 $(printf '! -path "%s" ' "${B[@]}"; printf '! -wholename "%s/*" ' "${B[@]}")";

done;

done);

# run `find -O3` on the dir list saved in array F, with excluded files (from command line) now being excluded

source /proc/self/fd/0 <<<"find -O3 \"\${F[@]}\" $(printf '! -path "%s" ' "${efile[@]}")";

}

https://redd.it/1abct5q

@r_bash

# is base search directory is '/a/b'; then: level 0 is '/a/b', level 1 is '/a/b/_', level 2 is '/a/b/_/_, level 3 is '/a/b/_/_/_' etc.)'

eLevels[0]="${bdir}";

for nn in "${edir[@]%%+([\/\*])}"; do

b="${nn#"${bdir%/}/"}/";

a="${bdir%/}/";

kk=1;

until [[ -z $b ]] || [[ "$a" == "$nn" ]]; do

a+="${b%%/*}/";

b="${b#*/}";

eLevels[$kk]+="${a%\/}"$'\n';

((kk++));

done;

done;

# construct minimal list of files/directories to search that doesnt contain excluded directories and save in array F

# EXAMPLE:

# if the base search directory is '/a/b' and you want to exclude 'a/b/c', 'a/b/d/e', and 'a/b/d/f', this includes:

# everything immediately under '/a/b' except for '/a/b/c' and '/a/b/d' --AND--

# everything immediately under '/a/b/d', except for '/a/b/d/e' and '/a/b/d/f'

mapfile -t F < <(for ((kk=1; kk<${#eLevels[@]}; kk++ )); do

mapfile -t A < <(printf '%s' "${eLevels[$(( $kk - 1 ))]}" | sort -u)

A=("${A[@]}");

for nn in "${A[@]}"; do

mapfile -t B < <(printf '%s' "${eLevels[$kk]}" | grep -F "${nn}" | sort -u)

B=("${B[@]}");

[[ -n "$(printf '%s' "${B[@]//[ \t]/}")" ]] && source /proc/self/fd/0 <<< "find \"${nn}\" -maxdepth 1 -mindepth 1 $(printf '! -path "%s" ' "${B[@]}"; printf '! -wholename "%s/*" ' "${B[@]}")";

done;

done);

# run `find -O3` on the dir list saved in array F, with excluded files (from command line) now being excluded

source /proc/self/fd/0 <<<"find -O3 \"\${F[@]}\" $(printf '! -path "%s" ' "${efile[@]}")";

}

https://redd.it/1abct5q

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

like grep -A4 but instead of 4 lines go until matched pattern?

Does

https://redd.it/1advlq7

@r_bash

Does

grep have a way of instead of specifying 4 like grep -A4 to print the lines until it encounters a regex match like ^---? It looks like this is called context line control in the manpage, but that section doesn't give a way to have it variable, the number must be fixed. Does grep have another mechanism that could be used? Right now I've got a python noscript that does this, but I'm very curious about a bash-1-liner. My matches can be printed properly from grep -A2 to grep -A7 to larger like -A33 and there's no way to know what the number is without counting the number of lines until the ^--- is encountered. Is grep capable of doing this on its own or do I need another tool?https://redd.it/1advlq7

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Tool for fast tables in Bash + request for design opinions

I created a table tool for high-performance data access in Bash to support a user interface tool (I'm calling it

Array Table Extension (ate)

The tool is Bash builtin written in C, and as such it can work in the noscript's process space and access elements of the noscript in which it is called, including noscript functions which are sometimes called from the tool.

I would love to find a forum to discuss several design ideas I implemented, but the

I'll appreciate any comments or insights.

https://redd.it/1adz42r

@r_bash

I created a table tool for high-performance data access in Bash to support a user interface tool (I'm calling it

pwb for Pager with Benefits) I'm finishing up:Array Table Extension (ate)

The tool is Bash builtin written in C, and as such it can work in the noscript's process space and access elements of the noscript in which it is called, including noscript functions which are sometimes called from the tool.

I would love to find a forum to discuss several design ideas I implemented, but the

ate feature about which I am soliciting opinions is one I am also considering for the pwb project is how I create a "handle" with which one accesses the ate features. I think it's pretty developer-friendly, but if I'm mistaken, I might avoid making the same mistake on the new project.I'll appreciate any comments or insights.

https://redd.it/1adz42r

@r_bash

GitHub

GitHub - cjungmann/ate: Loadable Bash builtin for using an array as a table

Loadable Bash builtin for using an array as a table - GitHub - cjungmann/ate: Loadable Bash builtin for using an array as a table

Readline parsing in command completion

Can someone help me with command completion?

Or perhaps this is more about readline library but still.

BASH_VERSION="4.4.20(1)-release"

I use this simple function to test COMP_* variables during command completion:

complete -F compvars compvars

compvars() { echo >\&2; declare -p ${!COMP_*} >\&2; return 1; }

​

And I use arguments like 'name=' and 'name=value' in my noscripts.

For example this works as I expect and COMP_WORDS is easy to use:

​

$ compvars a='' b<TAB>

declare -- COMP_CWORD="3"

declare -- COMP_KEY="9"

declare -- COMP_LINE="compvars a='' b"

declare -- COMP_POINT="15"

declare -- COMP_TYPE="33"

declare -- COMP_WORDBREAKS="

\\"'><=;|&(:"

declare -a COMP_WORDS=([0\]="compvars" [1\]="a" [2\]="=''" [3\]="b")

\^C

​

But when I use hyphens (to allow spaces in arguments) things get complicated:

​

$ compvars a='x' b<TAB>

declare -- COMP_CWORD="3"

declare -- COMP_KEY="9"

declare -- COMP_LINE="compvars a='x' b"

declare -- COMP_POINT="16"

declare -- COMP_TYPE="33"

declare -- COMP_WORDBREAKS="

\\"'><=;|&(:"

declare -a COMP_WORDS=([0\]="compvars" [1\]="a" [2\]="='" [3\]="x' b")

\^C

​

Common sense says I should get "b" as separate element in COMP_WORDS also in last completion. Why the last hyphen in COMP_WORDS[3\] doesn't also act as a word break and further split COMP_WORDS[3\] into [3\]="x'" and [4\]="b"?

Is there some solution out there to reassemble COMP_WORDS to overcome cases like this?

​

https://redd.it/1ae4rp4

@r_bash

Can someone help me with command completion?

Or perhaps this is more about readline library but still.

BASH_VERSION="4.4.20(1)-release"

I use this simple function to test COMP_* variables during command completion:

complete -F compvars compvars

compvars() { echo >\&2; declare -p ${!COMP_*} >\&2; return 1; }

​

And I use arguments like 'name=' and 'name=value' in my noscripts.

For example this works as I expect and COMP_WORDS is easy to use:

​

$ compvars a='' b<TAB>

declare -- COMP_CWORD="3"

declare -- COMP_KEY="9"

declare -- COMP_LINE="compvars a='' b"

declare -- COMP_POINT="15"

declare -- COMP_TYPE="33"

declare -- COMP_WORDBREAKS="

\\"'><=;|&(:"

declare -a COMP_WORDS=([0\]="compvars" [1\]="a" [2\]="=''" [3\]="b")

\^C

​

But when I use hyphens (to allow spaces in arguments) things get complicated:

​

$ compvars a='x' b<TAB>

declare -- COMP_CWORD="3"

declare -- COMP_KEY="9"

declare -- COMP_LINE="compvars a='x' b"

declare -- COMP_POINT="16"

declare -- COMP_TYPE="33"

declare -- COMP_WORDBREAKS="

\\"'><=;|&(:"

declare -a COMP_WORDS=([0\]="compvars" [1\]="a" [2\]="='" [3\]="x' b")

\^C

​

Common sense says I should get "b" as separate element in COMP_WORDS also in last completion. Why the last hyphen in COMP_WORDS[3\] doesn't also act as a word break and further split COMP_WORDS[3\] into [3\]="x'" and [4\]="b"?

Is there some solution out there to reassemble COMP_WORDS to overcome cases like this?

​

https://redd.it/1ae4rp4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Simple bash noscript help

Hi,

I am hoping I can get some assistance with a simple bash noscript that I will run in a cron

If any file in one particular directory is older than 1 minute, then execute something

I cannot get find to work simply like that

any thoughts?

Thank you !

https://redd.it/1aeawaj

@r_bash

Hi,

I am hoping I can get some assistance with a simple bash noscript that I will run in a cron

If any file in one particular directory is older than 1 minute, then execute something

I cannot get find to work simply like that

any thoughts?

Thank you !

https://redd.it/1aeawaj

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

bash-workout, bash based timed workouts

Today I wrote a small utility for myself. I've been working on leveling up my bash skills recently.

This tool takes a CSV of workouts and time (in seconds) and runs through the workout allowing you to perfectly time the workout. It comes built in with rest times. If you're on MacOS it also says the workouts out loud. If you're on another form of Linux you can easily swap to using something like espeak via the `$SPEACH_COMMAND` variable.

I'm probably the only person who'll ever use this, but on the off chance you can either learn something from it or use it here it is. https://github.com/cogwizzle/bash-workout/tree/main

https://redd.it/1aeeikq

@r_bash

Today I wrote a small utility for myself. I've been working on leveling up my bash skills recently.

This tool takes a CSV of workouts and time (in seconds) and runs through the workout allowing you to perfectly time the workout. It comes built in with rest times. If you're on MacOS it also says the workouts out loud. If you're on another form of Linux you can easily swap to using something like espeak via the `$SPEACH_COMMAND` variable.

I'm probably the only person who'll ever use this, but on the off chance you can either learn something from it or use it here it is. https://github.com/cogwizzle/bash-workout/tree/main

https://redd.it/1aeeikq

@r_bash

GitHub

GitHub - cogwizzle/bash-workout: A small tool to help you run through your workout routine via bash.

A small tool to help you run through your workout routine via bash. - GitHub - cogwizzle/bash-workout: A small tool to help you run through your workout routine via bash.

Weird Loop Behavior? No -negs allowed?

Hi all. I'm trying to generate an array of integers from -5 to 5.

for ((i = -5; i < 11; i++)); do

newoffsets+=("$i")

done

echo "Checking final array:"

for all in "${newoffsets@}"; do

echo " $all"

done

But the output extends to positive 11 instead. Even Bard is confused.

My guess is that negatives don't truly work in a c-style loop.

Finally, since I couldn't use negative number variables in the c-style loop, as expected, I just added some new variables and did each calculation in the loop and incrementing a different counter. It's best to use the c-style loop in an absolute-value manner instead of using its

Thus, the solution:

declare -i viewportsize=11

declare -i viewradius=$(((viewportsize - 1) / 2))

declare -i lowerbound=$((viewradius * -1))

unset newoffsets

for ((i = 0; i < viewportsize; i++)); do

# bash can't employ negative c-loops; manual method:

newoffsets+=("$lowerbound")

((lowerbound++))

done

​

https://redd.it/1aepy2i

@r_bash

Hi all. I'm trying to generate an array of integers from -5 to 5.

for ((i = -5; i < 11; i++)); do

newoffsets+=("$i")

done

echo "Checking final array:"

for all in "${newoffsets@}"; do

echo " $all"

done

But the output extends to positive 11 instead. Even Bard is confused.

My guess is that negatives don't truly work in a c-style loop.

Finally, since I couldn't use negative number variables in the c-style loop, as expected, I just added some new variables and did each calculation in the loop and incrementing a different counter. It's best to use the c-style loop in an absolute-value manner instead of using its

$i counter when negatives are needed, etc.Thus, the solution:

declare -i viewportsize=11

declare -i viewradius=$(((viewportsize - 1) / 2))

declare -i lowerbound=$((viewradius * -1))

unset newoffsets

for ((i = 0; i < viewportsize; i++)); do

# bash can't employ negative c-loops; manual method:

newoffsets+=("$lowerbound")

((lowerbound++))

done

​

https://redd.it/1aepy2i

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Maintain list of env variables for both shell and systemd

I have a bunch of applications autostarted as systemd user services and I would like them to inherit environment variables defined in the shell config (.zprofile because these are rarely changed). I don't want to maintain two identical list of variables (one for login shell environment and one for

I thought about using systemd's

What is a good way to go about this? I suppose the shell config can be parsed for the variables but it seems pretty hacky. Associative array for env variables, then parse for the keys of the arrays for the variable names for

https://redd.it/1afawp3

@r_bash

I have a bunch of applications autostarted as systemd user services and I would like them to inherit environment variables defined in the shell config (.zprofile because these are rarely changed). I don't want to maintain two identical list of variables (one for login shell environment and one for

systemctl import-environment <same list of these variables>).I thought about using systemd's

~/.config/environment.d and then have my shell export everything on this list, but there are caveats mentioned here (I won't pretend I fully understand it all), hence why I'm thinking of just going with the initial approach (the shell config is also more flexible allowing setting variables conditionally based on more complicated logic). Parsing output of env is also not reliable as it contains some variables I didn't explicitly set and may not be appropriate for importing by systemd.What is a good way to go about this? I suppose the shell config can be parsed for the variables but it seems pretty hacky. Associative array for env variables, then parse for the keys of the arrays for the variable names for

systemctl import-environment? Any help/examples are much appreciated.https://redd.it/1afawp3

@r_bash

GitHub

Discuss: A better way to handle environment variables? · Issue #6 · alebastr/sway-systemd

This project embeds some environment variables in a shell noscript. It advises modifying the shell noscript to update the environment variables, which is not ideal. Is there a better way? Is systemd...

Video Contact Sheet

I am trying to make a video contact sheet noscript and i dont know how how to calculate the time duration of the video and pipe the input to this ffmpeg noscript.

https://redd.it/1afdtgl

@r_bash

I am trying to make a video contact sheet noscript and i dont know how how to calculate the time duration of the video and pipe the input to this ffmpeg noscript.

https://redd.it/1afdtgl

@r_bash

Imgur

Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and so much more from users.

Are there terminal apps like Fig but for windows?

I am looking for a nice-looking command line like Fig with features like autocomplete, are there windows alternatives?

https://redd.it/1affapj

@r_bash

I am looking for a nice-looking command line like Fig with features like autocomplete, are there windows alternatives?

https://redd.it/1affapj

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

What is the best way to run a server polling noscript for few days only, every 1 minute? Will it cause any hamper to server status?

host=www.google.com

port=443

while true; do

currenttime=$(date +%H:%M:%S)

r=$(bash -c 'exec 3<> /dev/tcp/'$host'/'$port';echo $?' 2>/dev/null)

if [ "$r" = "0" ]; then

echo "[$currenttime] $host $port is open" >>tori.txt

else

echo "$current_time $host $port is closed" >>tori.txt

fi

sleep 60

done

This is the noscript. It runs every 1 minute. I was planning to use setsid ./noscript.sh but I didn't find a way to exit that process easily. So, I am not doing that. Should I run it as a cronjob(It doesn't make much sense to run this as a cronjob though. as it's already sleeping for 60 seconds. Anything you can think of?)

https://redd.it/1afjbg7

@r_bash

host=www.google.com

port=443

while true; do

currenttime=$(date +%H:%M:%S)

r=$(bash -c 'exec 3<> /dev/tcp/'$host'/'$port';echo $?' 2>/dev/null)

if [ "$r" = "0" ]; then

echo "[$currenttime] $host $port is open" >>tori.txt

else

echo "$current_time $host $port is closed" >>tori.txt

fi

sleep 60

done

This is the noscript. It runs every 1 minute. I was planning to use setsid ./noscript.sh but I didn't find a way to exit that process easily. So, I am not doing that. Should I run it as a cronjob(It doesn't make much sense to run this as a cronjob though. as it's already sleeping for 60 seconds. Anything you can think of?)

https://redd.it/1afjbg7

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Running a command inside another command in a one liner?

Im not too familiar with bash so i might not be using the correct terms. What im trying to do is make a one liner that makes a PUT request to a page with its body being the output of a command.

Im trying to make this

date -Iseconds | head -c -7

go in the "value" of this command

curl -X PUT -H "Content-Type: application/json" -d '{"UTC":"value"}' address

and idea is ill run this with crontab every minute or so to update the time of a "smart" appliance (philips hue bridge)

https://redd.it/1afmquq

@r_bash

Im not too familiar with bash so i might not be using the correct terms. What im trying to do is make a one liner that makes a PUT request to a page with its body being the output of a command.

Im trying to make this

date -Iseconds | head -c -7

go in the "value" of this command

curl -X PUT -H "Content-Type: application/json" -d '{"UTC":"value"}' address

and idea is ill run this with crontab every minute or so to update the time of a "smart" appliance (philips hue bridge)

https://redd.it/1afmquq

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Need to create a loop that make a mkdir -p with the openssl rand -hex 2 output.

Hi guys.

Need to create a loop that make a mkdir -p with the openssl rand -hex 2 output.

I try this

\#!/bin/bash

var=$(openssl rand -hex 2)

for line in $var

do

mkdir "${line}"

done

But interact once and then stop!

I need to fullfill every possible combination which openssl rand -hex 2 gives.

Short history: need create hex directory from 0000 to FFFF

​

Thanks

https://redd.it/1afrne9

@r_bash

Hi guys.

Need to create a loop that make a mkdir -p with the openssl rand -hex 2 output.

I try this

\#!/bin/bash

var=$(openssl rand -hex 2)

for line in $var

do

mkdir "${line}"

done

But interact once and then stop!

I need to fullfill every possible combination which openssl rand -hex 2 gives.

Short history: need create hex directory from 0000 to FFFF

​

Thanks

https://redd.it/1afrne9

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community