Bash, count IPs in subnet / range

I've been all over Google. Maybe I'm typing the wrong words.

I'm trying to look at a method to extract the number of IP addresses by simply providing a range.

`25.25.25.25/\`\`17` would return `32768`

I found a few older bash noscripts from back in 2013, but one did not return the correct results, and it was a massive noscript. I don't know enough about IPv4 addresses to attempt this on my own.

Just looking for a template or a direction to go in.

A vanilla solution would be nice, where no extra packages need to be installed, but if it absolutely must be a package, then I can live with that. Pretty sure I can install whatever package I need.

I found ipcalc, which makes it easy, but I'd need a way to extract just the value

```

$ ipcalc 10.10.10.10/32

Address: 10.10.10.10 00001010.00001010.00001010.00001010

Netmask: 255.255.255.255 = 32 11111111.11111111.11111111.11111111

Wildcard: 0.0.0.0 00000000.00000000.00000000.00000000

=>

Hostroute: 10.10.10.10 00001010.00001010.00001010.00001010

Hosts/Net: 1 Class A, Private Internet

```

```

$ ipcalc 10.10.10.10/17

Address: 10.10.10.10 00001010.00001010.0 0001010.00001010

Netmask: 255.255.128.0 = 17 11111111.11111111.1 0000000.00000000

Wildcard: 0.0.127.255 00000000.00000000.0 1111111.11111111

=>

Network: 10.10.0.0/17 00001010.00001010.0 0000000.00000000

HostMin: 10.10.0.1 00001010.00001010.0 0000000.00000001

HostMax: 10.10.127.254 00001010.00001010.0 1111111.11111110

Broadcast: 10.10.127.255 00001010.00001010.0 1111111.11111111

Hosts/Net: 32766 Class A, Private Internet

```

Using

```

ipcalc 10.10.10.10/32 | grep "Hosts/Net"

```

Allows me to narrow it down, but there's still text aside from just the number:

```

$ ipcalc 10.10.10.10/32 | grep "Hosts/Net"

Hosts/Net: 1 Class A, Private Internet

```

https://redd.it/1g9yl0t

@r_bash

I've been all over Google. Maybe I'm typing the wrong words.

I'm trying to look at a method to extract the number of IP addresses by simply providing a range.

`25.25.25.25/\`\`17` would return `32768`

I found a few older bash noscripts from back in 2013, but one did not return the correct results, and it was a massive noscript. I don't know enough about IPv4 addresses to attempt this on my own.

Just looking for a template or a direction to go in.

A vanilla solution would be nice, where no extra packages need to be installed, but if it absolutely must be a package, then I can live with that. Pretty sure I can install whatever package I need.

I found ipcalc, which makes it easy, but I'd need a way to extract just the value

```

$ ipcalc 10.10.10.10/32

Address: 10.10.10.10 00001010.00001010.00001010.00001010

Netmask: 255.255.255.255 = 32 11111111.11111111.11111111.11111111

Wildcard: 0.0.0.0 00000000.00000000.00000000.00000000

=>

Hostroute: 10.10.10.10 00001010.00001010.00001010.00001010

Hosts/Net: 1 Class A, Private Internet

```

```

$ ipcalc 10.10.10.10/17

Address: 10.10.10.10 00001010.00001010.0 0001010.00001010

Netmask: 255.255.128.0 = 17 11111111.11111111.1 0000000.00000000

Wildcard: 0.0.127.255 00000000.00000000.0 1111111.11111111

=>

Network: 10.10.0.0/17 00001010.00001010.0 0000000.00000000

HostMin: 10.10.0.1 00001010.00001010.0 0000000.00000001

HostMax: 10.10.127.254 00001010.00001010.0 1111111.11111110

Broadcast: 10.10.127.255 00001010.00001010.0 1111111.11111111

Hosts/Net: 32766 Class A, Private Internet

```

Using

```

ipcalc 10.10.10.10/32 | grep "Hosts/Net"

```

Allows me to narrow it down, but there's still text aside from just the number:

```

$ ipcalc 10.10.10.10/32 | grep "Hosts/Net"

Hosts/Net: 1 Class A, Private Internet

```

https://redd.it/1g9yl0t

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

I don't know bash. I need a noscript to find big folders

*bigger than 100MB. Then, move them to /drive/.links/ and create a link from the old folder to the new one.

https://redd.it/1g96wgf

@r_bash

*bigger than 100MB. Then, move them to /drive/.links/ and create a link from the old folder to the new one.

https://redd.it/1g96wgf

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

I prerer eza rather than ls

Eza (fork of exa) https://github.com/eza-community/eza is similar to

So, if -Z is present, than use

https://redd.it/1gabtlo

@r_bash

Eza (fork of exa) https://github.com/eza-community/eza is similar to

ls but with color output and fancy Unicode icons for file type and few other improvements. However if you make alias ls=eza --icons it may not work all the time, because it is missing -Z for SELinux or put icons to output. But it is quite easy to fix in my ~/.bashrc:function ls() {

if [[ $* == *-Z* ]] ; then

/usr/bin/ls $*

fi

if [ -t 1 ] ; then

# Output to TTY

eza --icons $*

else

/usr/bin/ls $*

fi

}

So, if -Z is present, than use

ls, or if output is not TTY (else-block for -t) it will use /usr/bin/ls instead (if I will use just ls the new function will recursivelly call itself :).https://redd.it/1gabtlo

@r_bash

GitHub

GitHub - eza-community/eza: A modern alternative to ls

A modern alternative to ls. Contribute to eza-community/eza development by creating an account on GitHub.

How a Non-Interactive Shell Have Access to Its Parent Interactive Shell?

Hi. I'm just curious what things a noscript that is launched from an interactive shell has access to about the interactive shell? can it see what options are enabled in the shell? does the non interactive shell even know it was launched from an interactive shell? or is it like a sandbox? Idk if I'm converying what I mean.

https://redd.it/1gaojfj

@r_bash

Hi. I'm just curious what things a noscript that is launched from an interactive shell has access to about the interactive shell? can it see what options are enabled in the shell? does the non interactive shell even know it was launched from an interactive shell? or is it like a sandbox? Idk if I'm converying what I mean.

https://redd.it/1gaojfj

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Read from standard input

Quick question: in a noscript, how to read from standard input and store into string variable or array if first argument to a noscript is a

https://redd.it/1gawaq4

@r_bash

Quick question: in a noscript, how to read from standard input and store into string variable or array if first argument to a noscript is a

-? The noscript also takes other arguments, in which case it shouldn't read from standard input.https://redd.it/1gawaq4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Deployment, Bash, and Best Practices.

Hi guys, I have a few questions related to deployment process. While this might not be strictly about Bash, I’m currently using Bash for my deployment process, so I hope this is the right place to ask.

I’ve created a simple deployment noscript that copies files to a server and then connects to it to execute various commands remotely. Here’s the noscript I’m using:

```bash

#!/bin/bash

# Source the .env file to load environment variables

if [ -f ".env" ]; then

source .env

else

echo "Error: .env file not found."

exit 1

fi

# Check if the first argument is "true" or "false"

if [[ "$1" != "true" && "$1" != "false" ]]; then

printf "Usage: ./main_setup.sh [true|false]\n"

printf "\ttrue - Perform full server setup (install Nginx, set up authentication and systemd)\n"

printf "\tfalse - Skip server setup and only deploy the Rust application\n"

exit 1

fi

# Ensure required variables are loaded

if [[ -z "$SERVER_IP" || -z "$SERVER_USER" || -z "$BASIC_AUTH_USER" || -z "$BASIC_AUTH_PASSWORD" ]]; then

printf "Error: Deploy environment variables are not set correctly in the .env file.\n"

exit 1

fi

printf "Building the Rust app...\n"

cargo build --release --target x86_64-unknown-linux-gnu

# If the first argument is "true", perform full server setup

if [[ "$1" == "true" ]]; then

printf "Setting up the server...\n"

# Upload the configuration files

scp -i "$PATH_TO_SSH_KEY" nginx_config.conf "$SERVER_USER@$SERVER_IP:/tmp/nginx_config.conf"

scp -i "$PATH_TO_SSH_KEY" logrotate_nginx.conf "$SERVER_USER@$SERVER_IP:/tmp/logrotate_nginx.conf"

scp -i "$PATH_TO_SSH_KEY" logrotate_rust_app.conf "$SERVER_USER@$SERVER_IP:/tmp/logrotate_rust_app.conf"

scp -i "$PATH_TO_SSH_KEY" rust_app.service "$SERVER_USER@$SERVER_IP:/tmp/rust_app.service"

# Upload app files

scp -i "$PATH_TO_SSH_KEY" ../target/x86_64-unknown-linux-gnu/release/rust_app "$SERVER_USER@$SERVER_IP:/tmp/rust_app"

scp -i "$PATH_TO_SSH_KEY" ../.env "$SERVER_USER@$SERVER_IP:/tmp/.env"

# Connect to the server and execute commands remotely

ssh -i "$PATH_TO_SSH_KEY" "$SERVER_USER@$SERVER_IP" << EOF

# Update system and install necessary packages

sudo apt-get -y update

sudo apt -y install nginx apache2-utils

# Create password file for basic authentication

echo "$BASIC_AUTH_PASSWORD" | sudo htpasswd -ci /etc/nginx/.htpasswd $BASIC_AUTH_USER

# Copy configuration files with root ownership

sudo cp /tmp/nginx_config.conf /etc/nginx/sites-available/rust_app

sudo rm -f /etc/nginx/sites-enabled/rust_app

sudo ln -s /etc/nginx/sites-available/rust_app /etc/nginx/sites-enabled/

sudo cp /tmp/logrotate_nginx.conf /etc/logrotate.d/nginx

sudo cp /tmp/logrotate_rust_app.conf /etc/logrotate.d/rust_app

sudo cp /tmp/rust_app.service /etc/systemd/system/rust_app.service

# Copy the Rust app and .env file

mkdir -p /home/$SERVER_USER/rust_app_folder

mv /tmp/rust_app /home/$SERVER_USER/rust_app_folder/rust_app

mv /tmp/.env /home/$SERVER_USER/rust_app/.env

# Clean up temporary files

sudo rm -f /tmp/nginx_config.conf /tmp/logrotate_nginx.conf /tmp/logrotate_rust_app.conf /tmp/rust_app.service

# Enable and start the services

sudo systemctl daemon-reload

sudo systemctl enable nginx

sudo systemctl start nginx

sudo systemctl enable rust_app

sudo systemctl start rust_app

# Add the crontab task

sudo mkdir -p /var/log/rust_app/crontab/log

(sudo crontab -l 2>/dev/null | grep -q "/usr/bin/curl -X POST http://localhost/rust_app/full_job" || (sudo crontab -l 2>/dev/null; echo "00 21 * * * /usr/bin/curl -X POST http://localhost/rust_app/full_job >> /var/log/rust_app/crontab/\\\$(date +\\%Y-\\%m-\\%d).log 2>&1") | sudo crontab -)

EOF

else

# Only deploy the Rust application

scp -i "$PATH_TO_SSH_KEY"

Hi guys, I have a few questions related to deployment process. While this might not be strictly about Bash, I’m currently using Bash for my deployment process, so I hope this is the right place to ask.

I’ve created a simple deployment noscript that copies files to a server and then connects to it to execute various commands remotely. Here’s the noscript I’m using:

```bash

#!/bin/bash

# Source the .env file to load environment variables

if [ -f ".env" ]; then

source .env

else

echo "Error: .env file not found."

exit 1

fi

# Check if the first argument is "true" or "false"

if [[ "$1" != "true" && "$1" != "false" ]]; then

printf "Usage: ./main_setup.sh [true|false]\n"

printf "\ttrue - Perform full server setup (install Nginx, set up authentication and systemd)\n"

printf "\tfalse - Skip server setup and only deploy the Rust application\n"

exit 1

fi

# Ensure required variables are loaded

if [[ -z "$SERVER_IP" || -z "$SERVER_USER" || -z "$BASIC_AUTH_USER" || -z "$BASIC_AUTH_PASSWORD" ]]; then

printf "Error: Deploy environment variables are not set correctly in the .env file.\n"

exit 1

fi

printf "Building the Rust app...\n"

cargo build --release --target x86_64-unknown-linux-gnu

# If the first argument is "true", perform full server setup

if [[ "$1" == "true" ]]; then

printf "Setting up the server...\n"

# Upload the configuration files

scp -i "$PATH_TO_SSH_KEY" nginx_config.conf "$SERVER_USER@$SERVER_IP:/tmp/nginx_config.conf"

scp -i "$PATH_TO_SSH_KEY" logrotate_nginx.conf "$SERVER_USER@$SERVER_IP:/tmp/logrotate_nginx.conf"

scp -i "$PATH_TO_SSH_KEY" logrotate_rust_app.conf "$SERVER_USER@$SERVER_IP:/tmp/logrotate_rust_app.conf"

scp -i "$PATH_TO_SSH_KEY" rust_app.service "$SERVER_USER@$SERVER_IP:/tmp/rust_app.service"

# Upload app files

scp -i "$PATH_TO_SSH_KEY" ../target/x86_64-unknown-linux-gnu/release/rust_app "$SERVER_USER@$SERVER_IP:/tmp/rust_app"

scp -i "$PATH_TO_SSH_KEY" ../.env "$SERVER_USER@$SERVER_IP:/tmp/.env"

# Connect to the server and execute commands remotely

ssh -i "$PATH_TO_SSH_KEY" "$SERVER_USER@$SERVER_IP" << EOF

# Update system and install necessary packages

sudo apt-get -y update

sudo apt -y install nginx apache2-utils

# Create password file for basic authentication

echo "$BASIC_AUTH_PASSWORD" | sudo htpasswd -ci /etc/nginx/.htpasswd $BASIC_AUTH_USER

# Copy configuration files with root ownership

sudo cp /tmp/nginx_config.conf /etc/nginx/sites-available/rust_app

sudo rm -f /etc/nginx/sites-enabled/rust_app

sudo ln -s /etc/nginx/sites-available/rust_app /etc/nginx/sites-enabled/

sudo cp /tmp/logrotate_nginx.conf /etc/logrotate.d/nginx

sudo cp /tmp/logrotate_rust_app.conf /etc/logrotate.d/rust_app

sudo cp /tmp/rust_app.service /etc/systemd/system/rust_app.service

# Copy the Rust app and .env file

mkdir -p /home/$SERVER_USER/rust_app_folder

mv /tmp/rust_app /home/$SERVER_USER/rust_app_folder/rust_app

mv /tmp/.env /home/$SERVER_USER/rust_app/.env

# Clean up temporary files

sudo rm -f /tmp/nginx_config.conf /tmp/logrotate_nginx.conf /tmp/logrotate_rust_app.conf /tmp/rust_app.service

# Enable and start the services

sudo systemctl daemon-reload

sudo systemctl enable nginx

sudo systemctl start nginx

sudo systemctl enable rust_app

sudo systemctl start rust_app

# Add the crontab task

sudo mkdir -p /var/log/rust_app/crontab/log

(sudo crontab -l 2>/dev/null | grep -q "/usr/bin/curl -X POST http://localhost/rust_app/full_job" || (sudo crontab -l 2>/dev/null; echo "00 21 * * * /usr/bin/curl -X POST http://localhost/rust_app/full_job >> /var/log/rust_app/crontab/\\\$(date +\\%Y-\\%m-\\%d).log 2>&1") | sudo crontab -)

EOF

else

# Only deploy the Rust application

scp -i "$PATH_TO_SSH_KEY"

../target/x86_64-unknown-linux-gnu/release/rust_app "$SERVER_USER@$SERVER_IP:/tmp/rust_app"

scp -i "$PATH_TO_SSH_KEY" ../.env "$SERVER_USER@$SERVER_IP:/tmp/.env"

ssh -i "$PATH_TO_SSH_KEY" "$SERVER_USER@$SERVER_IP" << EOF

mv /tmp/rust-app /home/$SERVER_USER/rust_app_folder/rust_app

mv /tmp/.env /home/$SERVER_USER/rust_app_folder/.env

sudo systemctl restart rust_app

EOF

fi

```

So the first question is using Bash for deployment a good practice? I’m wondering if it's best practice to do it or should I be using something more specialized, like Ansible or Jenkins?

The second question is related to Bash. When executing multiple commands on a remote server using an EOF block, the commands often appear as plain text in editors like Vim, without proper syntax highlighting or formatting. Is there a more elegant way to manage this? For example, could I define a function locally that contains all the commands, evaluate certain variables (such as $SERVER_USER) beforehand, and then send the complete function to the remote server for execution? Alternatively, is there a way to print the evaluated function and pass it to an EOF block as a sequence of commands, similar to how it's done now?

Thanks!

https://redd.it/1gbaanm

@r_bash

scp -i "$PATH_TO_SSH_KEY" ../.env "$SERVER_USER@$SERVER_IP:/tmp/.env"

ssh -i "$PATH_TO_SSH_KEY" "$SERVER_USER@$SERVER_IP" << EOF

mv /tmp/rust-app /home/$SERVER_USER/rust_app_folder/rust_app

mv /tmp/.env /home/$SERVER_USER/rust_app_folder/.env

sudo systemctl restart rust_app

EOF

fi

```

So the first question is using Bash for deployment a good practice? I’m wondering if it's best practice to do it or should I be using something more specialized, like Ansible or Jenkins?

The second question is related to Bash. When executing multiple commands on a remote server using an EOF block, the commands often appear as plain text in editors like Vim, without proper syntax highlighting or formatting. Is there a more elegant way to manage this? For example, could I define a function locally that contains all the commands, evaluate certain variables (such as $SERVER_USER) beforehand, and then send the complete function to the remote server for execution? Alternatively, is there a way to print the evaluated function and pass it to an EOF block as a sequence of commands, similar to how it's done now?

Thanks!

https://redd.it/1gbaanm

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

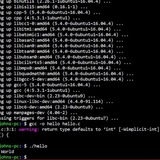

bash: java: command not found

My Linux distro is Debian 12.7.0, 64bit, English.

I modified the guide noscriptd [How to install Java JDK 21 or OpenJDK 21 on Debian 12](https://green.cloud/docs/how-to-install-java-jdk-21-or-openjdk-21-on-debian-12/) so that I could "install"/use the latest production-ready release of OpenJDK 23.0.1 (FYI Debian's official repos contain OpenJDK 17 which is outdated for my use.)

I clicked the link [https://download.java.net/java/GA/jdk23.0.1/c28985cbf10d4e648e4004050f8781aa/11/GPL/openjdk-23.0.1\_linux-x64\_bin.tar.gz](https://download.java.net/java/GA/jdk23.0.1/c28985cbf10d4e648e4004050f8781aa/11/GPL/openjdk-23.0.1_linux-x64_bin.tar.gz) to download the software to my computer.

Next I extracted the zipped file using the below command:

`tar xvf openjdk-23.0.1_linux-x64_bin.tar.gz`

A new directory was created on my device. It is called *jdk-23.0.1*

I copied said directory to `/usr/local`

`sudo cp -r jdk-23.0.1 /usr/local`

I created a new source noscript to set the Java environment by issuing the following command:

su -i

tee -a /etc/profile.d/jdk23.0.1.sh<<EOF

> export JAVA_HOME=/usr/local/jdk-23.0.1

> export PATH=$PATH:$JAVA_HOME/bin

> EOF

After having done the above, I opened [*jdk23.0.1.sh*](http://jdk23.0.1.sh) using FeatherPad and the contents showed the following:

export JAVA_HOME=/usr/local/jdk-23.0.1

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/bin

Based on the guide, I typed the following command:

`source /etc/profile.d/jdk23.0.1.sh`

To check the OpenJDK version on my computer, I typed:

`java --version`

An error message appeared:

`bash: java: command not found`

Could someone show me what I did wrong please? Thanks.

https://redd.it/1gcjouf

@r_bash

My Linux distro is Debian 12.7.0, 64bit, English.

I modified the guide noscriptd [How to install Java JDK 21 or OpenJDK 21 on Debian 12](https://green.cloud/docs/how-to-install-java-jdk-21-or-openjdk-21-on-debian-12/) so that I could "install"/use the latest production-ready release of OpenJDK 23.0.1 (FYI Debian's official repos contain OpenJDK 17 which is outdated for my use.)

I clicked the link [https://download.java.net/java/GA/jdk23.0.1/c28985cbf10d4e648e4004050f8781aa/11/GPL/openjdk-23.0.1\_linux-x64\_bin.tar.gz](https://download.java.net/java/GA/jdk23.0.1/c28985cbf10d4e648e4004050f8781aa/11/GPL/openjdk-23.0.1_linux-x64_bin.tar.gz) to download the software to my computer.

Next I extracted the zipped file using the below command:

`tar xvf openjdk-23.0.1_linux-x64_bin.tar.gz`

A new directory was created on my device. It is called *jdk-23.0.1*

I copied said directory to `/usr/local`

`sudo cp -r jdk-23.0.1 /usr/local`

I created a new source noscript to set the Java environment by issuing the following command:

su -i

tee -a /etc/profile.d/jdk23.0.1.sh<<EOF

> export JAVA_HOME=/usr/local/jdk-23.0.1

> export PATH=$PATH:$JAVA_HOME/bin

> EOF

After having done the above, I opened [*jdk23.0.1.sh*](http://jdk23.0.1.sh) using FeatherPad and the contents showed the following:

export JAVA_HOME=/usr/local/jdk-23.0.1

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/bin

Based on the guide, I typed the following command:

`source /etc/profile.d/jdk23.0.1.sh`

To check the OpenJDK version on my computer, I typed:

`java --version`

An error message appeared:

`bash: java: command not found`

Could someone show me what I did wrong please? Thanks.

https://redd.it/1gcjouf

@r_bash

GreenCloud Documentation

How to install Java JDK 21 or OpenJDK 21 on Debian 12 - GreenCloud Documentation

Java is an open-source, and high-level programming language known to be reliable, robust, and portable. It was originally created by Sun Microsystems in the 1990s but is now being owned and maintained by Oracle Corporation. Java is one of the widely adopted…

Would you consider these silly aliases?

alias vi="test -f ./.vim/viminfo.vim && VIMINFO=./.vim/viminfo.vim || VIMINFO=~/.viminfo; vim -i \$VIMINFO"

alias make='vim Makefile && make'

The first one is so that I don't have my registers for prose-writing available whenever I'm doing Python stuff, and vice versa.

The second one is basically akin to

https://redd.it/1gczyt4

@r_bash

alias vi="test -f ./.vim/viminfo.vim && VIMINFO=./.vim/viminfo.vim || VIMINFO=~/.viminfo; vim -i \$VIMINFO"

alias make='vim Makefile && make'

The first one is so that I don't have my registers for prose-writing available whenever I'm doing Python stuff, and vice versa.

The second one is basically akin to

git commit.https://redd.it/1gczyt4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

What is it called when you ad an interface tu your terminal?

I apologize if this isn't the right sub but I do plan on using bash to do this. So I can use it across platforms. I'm trying to figure out what it's called, as I don't think shell is the proper term. And visor seems unrelated, Basically something with buttons for functions that sticks around at the top of terminals active area, active just meaning the space you can change the color of and nowhere outside it.

?

Thing is I don't want any input or output going underneath the buttons, which I want to use ANSI for. To me I would just called it an interface but that's way too vague, and it would be way too little to call a shell.

Like it would look similar to a HUD placed on you terminal, with active areas you could click with HID, any idea what this is called?

https://redd.it/1gd8pq1

@r_bash

I apologize if this isn't the right sub but I do plan on using bash to do this. So I can use it across platforms. I'm trying to figure out what it's called, as I don't think shell is the proper term. And visor seems unrelated, Basically something with buttons for functions that sticks around at the top of terminals active area, active just meaning the space you can change the color of and nowhere outside it.

?

Thing is I don't want any input or output going underneath the buttons, which I want to use ANSI for. To me I would just called it an interface but that's way too vague, and it would be way too little to call a shell.

Like it would look similar to a HUD placed on you terminal, with active areas you could click with HID, any idea what this is called?

https://redd.it/1gd8pq1

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

sensors_t: a simple bash function for monitoring the temperature of various system components with sensors

[LINK TO THE CODE ON GITHUB](https://github.com/jkool702/misc-public-noscripts/blob/main/sensors_t.bash)

`sensors_t` is a fairly short and simple bash function that makes it easy to monitor temperatures for your CPU and other various system components using `sensors` (from the `lm_sensors` package).

***

###FEATURES

`sensors_t` is not *drastically* different than a simple infinite loop that repeatedly calls `sensors; sleep 1`, but `sensors_t` does a few extra things:

1. `sensors_t` "cleans up" the output from `sensors` a bit, distilling it down to the sensor group name and the actual sensor outputs that report a temperature or a fan/pump RPM speed.

2. for each temperature reported, `sensors_t` keeps track of the maximum temperature seen since it started running, and adds this info to the end of the line in the displayed `sensors` output.

3. `sensors_t` attempts to identify which temperatures are from the CPU (package or individual coreS), and adds a line showing the single hottest temperature from the CPU.^(1)

4. if you have a nvidia GPU and have `nvidia-smi` available, `sensors_t` will ue it to get the GPU temp and adds a line displaying it.^(2)

NOTE: the only systems I have available to test `sensors_t` use older (pre-p/e-core) intel CPU's and nvidia GPU's.

^(1)This (identifying which sensors are from the CPU) assumes that [only] these lines all begin with either "Core" or "Package". This assumption may not be true for all CPU's, meaning the "hottest core temp" line may not work on some CPU's. If it doesnt work and you leave your CPU name and the output from calling `sensors` I'll try to add in support for that CPU.

^(2)If someone with an AMD or intel GPU can provide a 1-liner to get the GPU temp, i'll try to incorporate it and add in support for non-nvidia GPU's too.

***

###USAGE

Usage is very simple: source the `sensors_t.bash` noscript, then run

sensors_t [N] [CHIP(S)]

`N` is an optional input to change the waiting period between updates (default is 1 second). If provided it must be the 1st argument.

`CHIP(S)` are optional inputs to limit which sensor chips have their data displayed (default is to omit this and display all sensors temp data). To see possible values for `CHIP(S)`, first run `sensors_t` without this parameter.

# example invocations

sensors_t # 1 second updates, all sensors

sensors_t 5 # 5 second updates, all sensors

sensors_t coretemp-isa-0000 # 1 second updates, only CPU temp sensors

***

###EXAMPLE OUTPUT PAGE

___________________________________________

___________________________________________

Monitor has been running for: 173 seconds

-------------------------------------------

----------------

coretemp-isa-0000

----------------

Package id 0: +46.0°C ( MAX = +98.0°C )

Core 0: +46.0°C ( MAX = +81.0°C )

Core 1: +46.0°C ( MAX = +88.0°C )

Core 2: +48.0°C ( MAX = +87.0°C )

Core 3: +45.0°C ( MAX = +98.0°C )

Core 4: +43.0°C ( MAX = +91.0°C )

Core 5: +45.0°C ( MAX = +99.0°C )

Core 6: +45.0°C ( MAX = +82.0°C )

Core 8: +44.0°C ( MAX = +84.0°C )

Core 9: +43.0°C ( MAX = +90.0°C )

Core 10: +43.0°C ( MAX = +93.0°C )

Core 11: +44.0°C ( MAX = +80.0°C )

Core 12: +43.0°C ( MAX = +93.0°C )

Core 13: +46.0°C ( MAX = +79.0°C )

Core 14: +44.0°C ( MAX = +81.0°C )

----------------

kraken2-hid-3-1

----------------

Fan: 0 RPM

Pump: 2826 RPM

Coolant: +45.1°C ( MAX = +45.4°C )

----------------

nvme-pci-0c00

----------------

Composite: +42.9°C ( MAX = +46.9°C )

----------------

enp10s0-pci-0a00

----------------

MAC Temperature: +53.9°C ( MAX = +59.3°C )

----------------

nvme-pci-b300

----------------

[LINK TO THE CODE ON GITHUB](https://github.com/jkool702/misc-public-noscripts/blob/main/sensors_t.bash)

`sensors_t` is a fairly short and simple bash function that makes it easy to monitor temperatures for your CPU and other various system components using `sensors` (from the `lm_sensors` package).

***

###FEATURES

`sensors_t` is not *drastically* different than a simple infinite loop that repeatedly calls `sensors; sleep 1`, but `sensors_t` does a few extra things:

1. `sensors_t` "cleans up" the output from `sensors` a bit, distilling it down to the sensor group name and the actual sensor outputs that report a temperature or a fan/pump RPM speed.

2. for each temperature reported, `sensors_t` keeps track of the maximum temperature seen since it started running, and adds this info to the end of the line in the displayed `sensors` output.

3. `sensors_t` attempts to identify which temperatures are from the CPU (package or individual coreS), and adds a line showing the single hottest temperature from the CPU.^(1)

4. if you have a nvidia GPU and have `nvidia-smi` available, `sensors_t` will ue it to get the GPU temp and adds a line displaying it.^(2)

NOTE: the only systems I have available to test `sensors_t` use older (pre-p/e-core) intel CPU's and nvidia GPU's.

^(1)This (identifying which sensors are from the CPU) assumes that [only] these lines all begin with either "Core" or "Package". This assumption may not be true for all CPU's, meaning the "hottest core temp" line may not work on some CPU's. If it doesnt work and you leave your CPU name and the output from calling `sensors` I'll try to add in support for that CPU.

^(2)If someone with an AMD or intel GPU can provide a 1-liner to get the GPU temp, i'll try to incorporate it and add in support for non-nvidia GPU's too.

***

###USAGE

Usage is very simple: source the `sensors_t.bash` noscript, then run

sensors_t [N] [CHIP(S)]

`N` is an optional input to change the waiting period between updates (default is 1 second). If provided it must be the 1st argument.

`CHIP(S)` are optional inputs to limit which sensor chips have their data displayed (default is to omit this and display all sensors temp data). To see possible values for `CHIP(S)`, first run `sensors_t` without this parameter.

# example invocations

sensors_t # 1 second updates, all sensors

sensors_t 5 # 5 second updates, all sensors

sensors_t coretemp-isa-0000 # 1 second updates, only CPU temp sensors

***

###EXAMPLE OUTPUT PAGE

___________________________________________

___________________________________________

Monitor has been running for: 173 seconds

-------------------------------------------

----------------

coretemp-isa-0000

----------------

Package id 0: +46.0°C ( MAX = +98.0°C )

Core 0: +46.0°C ( MAX = +81.0°C )

Core 1: +46.0°C ( MAX = +88.0°C )

Core 2: +48.0°C ( MAX = +87.0°C )

Core 3: +45.0°C ( MAX = +98.0°C )

Core 4: +43.0°C ( MAX = +91.0°C )

Core 5: +45.0°C ( MAX = +99.0°C )

Core 6: +45.0°C ( MAX = +82.0°C )

Core 8: +44.0°C ( MAX = +84.0°C )

Core 9: +43.0°C ( MAX = +90.0°C )

Core 10: +43.0°C ( MAX = +93.0°C )

Core 11: +44.0°C ( MAX = +80.0°C )

Core 12: +43.0°C ( MAX = +93.0°C )

Core 13: +46.0°C ( MAX = +79.0°C )

Core 14: +44.0°C ( MAX = +81.0°C )

----------------

kraken2-hid-3-1

----------------

Fan: 0 RPM

Pump: 2826 RPM

Coolant: +45.1°C ( MAX = +45.4°C )

----------------

nvme-pci-0c00

----------------

Composite: +42.9°C ( MAX = +46.9°C )

----------------

enp10s0-pci-0a00

----------------

MAC Temperature: +53.9°C ( MAX = +59.3°C )

----------------

nvme-pci-b300

----------------

GitHub

misc-public-noscripts/sensors_t.bash at main · jkool702/misc-public-noscripts

miscellanuious noscripts that I want to make publically available - jkool702/misc-public-noscripts

Composite: +40.9°C ( MAX = +42.9°C )

Sensor 1: +40.9°C ( MAX = +42.9°C )

Sensor 2: +42.9°C ( MAX = +48.9°C )

----------------

nvme-pci-0200

----------------

Composite: +37.9°C ( MAX = +39.9°C )

----------------

Additional Temps

----------------

CPU HOT TEMP: +48.0°C ( CPU HOT MAX = +99.0°C )

GPU TEMP: +36.0°C ( GPU MAX = 39.0°C )

----------------

----------------

***

I hope some of you find this useful. Feel free to leave comments / questions / suggestions / bug reports.

https://redd.it/1gddbgq

@r_bash

Sensor 1: +40.9°C ( MAX = +42.9°C )

Sensor 2: +42.9°C ( MAX = +48.9°C )

----------------

nvme-pci-0200

----------------

Composite: +37.9°C ( MAX = +39.9°C )

----------------

Additional Temps

----------------

CPU HOT TEMP: +48.0°C ( CPU HOT MAX = +99.0°C )

GPU TEMP: +36.0°C ( GPU MAX = 39.0°C )

----------------

----------------

***

I hope some of you find this useful. Feel free to leave comments / questions / suggestions / bug reports.

https://redd.it/1gddbgq

@r_bash

Reddit

From the bash community on Reddit: sensors_t: a simple bash function for monitoring the temperature of various system components…

Explore this post and more from the bash community

Two values separated by control character

I have a file from a program which I need to parse. The contents is simple, but the control character in the middle is really throwing me off

This is in a file, so my original thought was to just look each line of the file

But what I'm trying to do is for each loop, I get access to both the number on the left, and the name, because a folder is going to be created based on the left number, and then the filename will be the value on the right.

In a perfect world, I could just call each where I need it

This is just an example, but the objective is to have control of calling each side when I need.

I've tried sed filtering

https://redd.it/1gdezbe

@r_bash

I have a file from a program which I need to parse. The contents is simple, but the control character in the middle is really throwing me off

01 Brad

05 Dan

12 Tim

This is in a file, so my original thought was to just look each line of the file

for line in $(cat list.txt); do

done

But what I'm trying to do is for each loop, I get access to both the number on the left, and the name, because a folder is going to be created based on the left number, and then the filename will be the value on the right.

In a perfect world, I could just call each where I need it

for line in $(cat list.txt); do

mkdir -p ${leftValue}

touch ${rightValue}

done

This is just an example, but the objective is to have control of calling each side when I need.

I've tried sed filtering

\009, \u0009, etc. Even trying [:alnum:] just to do something simple like replace the control character with a | pipe. Just to get some type of result.https://redd.it/1gdezbe

@r_bash

Reddit

Two values separated by control character : r/bash

69K subscribers in the bash community. Wake me up when September ends.

shellm: A one-file Ollama CLI client written in bash

https://github.com/Biont/shellm

https://redd.it/1gdp44f

@r_bash

https://github.com/Biont/shellm

https://redd.it/1gdp44f

@r_bash

GitHub

GitHub - Biont/shellm: A one-file Ollama CLI client written in bash

A one-file Ollama CLI client written in bash. Contribute to Biont/shellm development by creating an account on GitHub.

Confirming speed / bash practices

Long story short, I have a CSV file, 5 fields.

I read it into bash, and used IFS / while loop to read through the delimiters.

I'd say the CSV file in total is roughly 40MB. Pretty large for a CSV.

I went back and just decided to add a condition to check if the folder existed, because each row can be placed in different folders depending on the category.

When I added that check along with mkdir, I noticed that the speed dramatically went to hell. Without mkdir, the file completed in about 2 minutes. When I add mkdir -p, the speed to run the file went above 30 minutes, and then I just killed the noscript because it wasn't worth waiting anymore.

Is mkdir that heavy in a while loop? Or is their a better way to do this to ensure the sub-folders are created. Obviously I can't do what I was doing, because that just tanked the speed.

Just to give an idea, all I did was:

Which I guess I could just do mkdir -p and the check doesn't really matter. But I was just wondering what aspect is what caused the slow-down, using

I could see it adding a few minutes on, but I wasn't expecting such a dramatic change.

I'm sitting here running tests, and it appears if I do anything at all command based, it kills the speed. I tried

https://redd.it/1gee908

@r_bash

Long story short, I have a CSV file, 5 fields.

I read it into bash, and used IFS / while loop to read through the delimiters.

I'd say the CSV file in total is roughly 40MB. Pretty large for a CSV.

I went back and just decided to add a condition to check if the folder existed, because each row can be placed in different folders depending on the category.

When I added that check along with mkdir, I noticed that the speed dramatically went to hell. Without mkdir, the file completed in about 2 minutes. When I add mkdir -p, the speed to run the file went above 30 minutes, and then I just killed the noscript because it wasn't worth waiting anymore.

Is mkdir that heavy in a while loop? Or is their a better way to do this to ensure the sub-folders are created. Obviously I can't do what I was doing, because that just tanked the speed.

Just to give an idea, all I did was:

if test ! -d ${CAT}; then

mkdir -p ${CAT}

fi

Which I guess I could just do mkdir -p and the check doesn't really matter. But I was just wondering what aspect is what caused the slow-down, using

test or mkdir, and if there's a much faster set of commands when working inside a loop, or should I try to do as much as possible outside the loop and do the bare minimum inside.I could see it adding a few minutes on, but I wasn't expecting such a dramatic change.

I'm sitting here running tests, and it appears if I do anything at all command based, it kills the speed. I tried

tr just to manipulate a string, and that also did it.https://redd.it/1gee908

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

cat match string / move to end of file

i've been over a few different websites reading up on this, but I feel like I'm missing something stupid.

I have a file, which contains a mix of ipv4 and ipv6 addresses. I'd like to use sed to match all ipv6 addresses in the file, cut them from their current position, and move them to the end of the file.

I've tried a few ways to do this, including using cat to read in the file, then using sed to do the action. It seems to be finding the right lines, but I read online that /d should be delete, and I'm trying to just get that to work before I even try to append to the end of the file.

I haven't even figured out the part of appending to the end of the file yet, I just wanted to get it to delete the right lines, and then add it back

https://redd.it/1gfgi10

@r_bash

i've been over a few different websites reading up on this, but I feel like I'm missing something stupid.

I have a file, which contains a mix of ipv4 and ipv6 addresses. I'd like to use sed to match all ipv6 addresses in the file, cut them from their current position, and move them to the end of the file.

I've tried a few ways to do this, including using cat to read in the file, then using sed to do the action. It seems to be finding the right lines, but I read online that /d should be delete, and I'm trying to just get that to work before I even try to append to the end of the file.

cat iplist.txt | sed -n "/::/d"

I haven't even figured out the part of appending to the end of the file yet, I just wanted to get it to delete the right lines, and then add it back

cat iplist.txt | sed -n "/::/d" >> iplist.txt

https://redd.it/1gfgi10

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

M3U file list

I know I can create a file list with ls -1 > filename.txt, but I don't know how to prepend the directory path. I'm trying to create an m3u file list I can transfer to Musicolet on my phone. Can someone point me in the right direction?

https://redd.it/1gfk5jr

@r_bash

I know I can create a file list with ls -1 > filename.txt, but I don't know how to prepend the directory path. I'm trying to create an m3u file list I can transfer to Musicolet on my phone. Can someone point me in the right direction?

https://redd.it/1gfk5jr

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

File names with spaces as arguments

I want to merge a bunch of PDF s. The file names have spaces : a 1.pdf, b 2.pdf, a 3.pdf. And they're a lot of them.

I tried this noscript:

merge $@

And called it with merge.sh *.pdf

The noscript got each separated character as an argument : a 1.pdf b 2.pdf a 3.pdf.

I there a way to feed these file names without having to enclose each in quotes?

https://redd.it/1gg0eh2

@r_bash

I want to merge a bunch of PDF s. The file names have spaces : a 1.pdf, b 2.pdf, a 3.pdf. And they're a lot of them.

I tried this noscript:

merge $@

And called it with merge.sh *.pdf

The noscript got each separated character as an argument : a 1.pdf b 2.pdf a 3.pdf.

I there a way to feed these file names without having to enclose each in quotes?

https://redd.it/1gg0eh2

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Help (Newbie)

if i gonna learning bash noscripting, where to start and how?. i know understand bash noscripting, but can'not make it myself

https://redd.it/1gge37v

@r_bash

if i gonna learning bash noscripting, where to start and how?. i know understand bash noscripting, but can'not make it myself

https://redd.it/1gge37v

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

6 Techniques I Use to Create a Great User Experience for Shell Scripts

https://nochlin.com/blog/6-techniques-i-use-to-create-a-great-user-experience-for-shell-noscripts

https://redd.it/1gi0z9o

@r_bash

https://nochlin.com/blog/6-techniques-i-use-to-create-a-great-user-experience-for-shell-noscripts

https://redd.it/1gi0z9o

@r_bash

Nochlin

6 Techniques I Use to Create a Great User Experience for Shell Scripts

Is there a CLI command to run default application against a file?

Example - you have an

the-starter-command abc.zingo

and

Real example - Libre Office for

https://redd.it/1gjid8x

@r_bash

Example - you have an

zingo file so you can enterthe-starter-command abc.zingo

and

Zingo abc.zingo gets started - the-starter-command knows about the default application for different file types.Real example - Libre Office for

.doc and .odt files.https://redd.it/1gjid8x

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community