A simple Bash function that allows the user to quickly search and match all functions loaded in the current environment

### Idea:

- The following command will display any functions in your environment that contain a direct match to the value of the first argument passed and nothing else.

###

To return any function that contains the exact text

Cheers guys!

https://redd.it/1jb2u8k

@r_bash

### Idea:

- The following command will display any functions in your environment that contain a direct match to the value of the first argument passed and nothing else.

###

To return any function that contains the exact text

Function: $func issue the below command (the listfunc() must be loaded into your environment for this to work), and it will return the entire listfunc() for display (and any other functions that matched as well).list_func 'Function: $func'

list_func() {

# Determine the directory where the bash functions are stored.

if [[ -d ~/.bash_functions.d ]]; then

bash_func_dir=~/.bash_functions.d

elif [[ -f ~/.bash_functions ]]; then

bash_func_dir=$(dirname ~/.bash_functions)

else

echo "Error: No bash functions directory or file found."

return 1

fi

echo "Listing all functions loaded from $bash_func_dir and its sourced noscripts:"

echo

# Enable nullglob so that if no files match, the glob expands to nothing.

shopt -s nullglob

# Iterate over all .sh files in the bash functions directory.

for noscript in "$bash_func_dir"/*.sh; do

# Get file details.

filename=$(basename "$noscript")

filepath=$(realpath "$noscript")

fileowner=$(stat -c '%U:%G' "$noscript") # Get owner:group

# Extract function names from the file.

while IFS= read -r func; do

# Retrieve the function definition from the current shell.

func_body=$(declare -f "$func" 2>/dev/null)

# If a search term was provided, filter functions by matching the function definition.

if [[ -n "$1" ]]; then

echo "$func_body" | grep -q "$1" || continue

fi

# Print the file header.

echo "File: $filename"

echo "Path: $filepath"

echo "Owner: $fileowner"

echo

# Print the full function definition.

echo "$func_body"

echo -e "\n\n"

done < <(grep -oP '^(?:function\s+)?\s*[\w-]+\s*\(\)' "$noscript" | sed -E 's/^(function[[:space:]]+)?\s*([a-zA-Z0-9_-]+)\s*\(\)/\2/')

done

}

Cheers guys!

https://redd.it/1jb2u8k

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Pulling hair out: SSH and sshpass standalone

I have a bit of a problem I have been scrambling to solve and am ready to give up. Ill give it one last shot:

I have a linux system that is connected to a router. THE GOAL is to ssh into the router from the linux system and run a command AND get the output. - seems simple right?

The linux system is pretty outdated. NO INTERNET ACCESS. I have access to commands on this linux system ONLY through PHP functions - don't ask me why, its stupid and I hate it. EG I can run commands by using exec(), I can create new files using file_put_contents(), etc. However because of this I can not interact with the terminal directly. I can create a .bash noscript and run that or run single commands but thats pretty much it.

It is actually over 1000 total systems. All of them running almost the same specs. SOME OF THE TARGET SYSTEMS have GNU

The router uses password authentication for ssh connections. Once logged in you are NOT presented with a full shell, instead you are given a numerical list of specific commands that you can type out and then press enter.

The behavior is as follows:

FROM AN UPDATED LINUX TEST MACHINE CONNECTED TO ROUTER WHERE THE ROUTER IP IS 192.168.1.1:

type "

type "

type the command "

the router returns a code

type the second command "

the router returns a second code

type "

A one liner of this process would look something like:

Except the router does not execute the commands because for some reason it never recieves what ssh sends it. The solution that works on the TEST MACHINE is:

This works every time on the UPDATED TEST SYSTEM without issue even after clearing known hosts file. With this command I am able to run it from php:

and I will get the output which can be parsed and handled.

FROM THE OUTDATED TARGET MACHINE CONNECTED TO THE SAME ROUTER:

target machine information:

The command that works on the updated machine fails. AND RETURNS NOTHING. I will detail the reasons I have found below:

I can use

The version of ssh on the target machine is so old it does not include an option for 'StrictHostKeyChecking=no' it returns something to the effect of "invalid option: StrictHostKeyChecking" sorry I don't have the exact thing. In fact "

After using screen however if I re-execute the first command now it will get farther - because the host is added to known hosts now - but the commands executed on the router will not return anything and neither will ssh itself even with verbose flag. I believe this behavior is caused by an old version of sshpass. I found other people online that had similar issues where the output of the ssh command does not get passed back to the client. I tried several solutions related to redirection but to no avail.

So there is two problems:

1. Old ssh version without a way to bypass host key checking.

2. Old sshpass version not passing the output back to the client.

sshpass not passing back the output of either ssh or the router CLI is the biggest issue - I

I have a bit of a problem I have been scrambling to solve and am ready to give up. Ill give it one last shot:

I have a linux system that is connected to a router. THE GOAL is to ssh into the router from the linux system and run a command AND get the output. - seems simple right?

The linux system is pretty outdated. NO INTERNET ACCESS. I have access to commands on this linux system ONLY through PHP functions - don't ask me why, its stupid and I hate it. EG I can run commands by using exec(), I can create new files using file_put_contents(), etc. However because of this I can not interact with the terminal directly. I can create a .bash noscript and run that or run single commands but thats pretty much it.

It is actually over 1000 total systems. All of them running almost the same specs. SOME OF THE TARGET SYSTEMS have GNU

screen.The router uses password authentication for ssh connections. Once logged in you are NOT presented with a full shell, instead you are given a numerical list of specific commands that you can type out and then press enter.

The behavior is as follows:

FROM AN UPDATED LINUX TEST MACHINE CONNECTED TO ROUTER WHERE THE ROUTER IP IS 192.168.1.1:

ssh `admin@192.168.1.1`type "

yes" and hit enter to allow the unknown keytype "

password" hit entertype the command "

778635" hit enterthe router returns a code

type the second command "

66452098" hit enterthe router returns a second code

type "

exit" hit enterA one liner of this process would look something like:

sshpass -p password ssh -tt -o 'StrictHostKeyChecking=no' `admin@192.168.1.1` "778635; 66452098; exit"Except the router does not execute the commands because for some reason it never recieves what ssh sends it. The solution that works on the TEST MACHINE is:

echo -e '778635\n66452098\nexit' | sshpass -p password ssh -o 'StrictHostKeyChecking=no' -tt `admin@192.168.1.1`This works every time on the UPDATED TEST SYSTEM without issue even after clearing known hosts file. With this command I am able to run it from php:

exec("echo -e '778635\n66452098\nexit' | sshpass -p password ssh -o 'StrictHostKeyChecking=no' -tt admin@192.168.1.1", $a);return $a;and I will get the output which can be parsed and handled.

FROM THE OUTDATED TARGET MACHINE CONNECTED TO THE SAME ROUTER:

target machine information:

bash --version shows 4.1.5uname -r shows 2.6.29ssh -V returns blanksshpass -V shows 1.04 The command that works on the updated machine fails. AND RETURNS NOTHING. I will detail the reasons I have found below:

I can use

screen to open a detached session and then "stuff" it with commands one by one. Effectively bypassing sshpass, this allows me to successfully accept the host key and log in to the router but at that point "stuff" does not pass any input to the router and I cannot execute commands. The version of ssh on the target machine is so old it does not include an option for 'StrictHostKeyChecking=no' it returns something to the effect of "invalid option: StrictHostKeyChecking" sorry I don't have the exact thing. In fact "

ssh -V" returns NOTHING and "man ssh" returns "no manual entry for ssh"!After using screen however if I re-execute the first command now it will get farther - because the host is added to known hosts now - but the commands executed on the router will not return anything and neither will ssh itself even with verbose flag. I believe this behavior is caused by an old version of sshpass. I found other people online that had similar issues where the output of the ssh command does not get passed back to the client. I tried several solutions related to redirection but to no avail.

So there is two problems:

1. Old ssh version without a way to bypass host key checking.

2. Old sshpass version not passing the output back to the client.

sshpass not passing back the output of either ssh or the router CLI is the biggest issue - I

cant even debug what I don't know is happening. Luckily though the router does have a command to reboot (111080) and if I execute:

I wont get anything back in the terminal BUT the router DOES reboot. So I know its working, I just cant get the output back.

So, I still have no way to get the output of the two commands I need executed. As noted above, the "

At this point I am wondering if it is possible to get the needed and updated binaries of both

A friend of mine told me I could use python to handle the ssh session but I could not find enough information on that. The python version on the target machine is 2.6.6

Any Ideas? I would give my left t6ticle to figure this out.

https://redd.it/1jbaskl

@r_bash

echo -e '111080' | sshpass -p password ssh -tt `admin@192.168.1.1`I wont get anything back in the terminal BUT the router DOES reboot. So I know its working, I just cant get the output back.

So, I still have no way to get the output of the two commands I need executed. As noted above, the "

screen" command is NOT available on all of the machines so even if I found a way to get it to pass the command to the router it would only help for a fraction of the machines. At this point I am wondering if it is possible to get the needed and updated binaries of both

ssh and sshpass and zip them up then convert to b64 and use file_put_contents() to make a file on the target machine. Although this is over my head and I would not know how to handle the libraries needed or if they would even run on the target machine's kernel. A friend of mine told me I could use python to handle the ssh session but I could not find enough information on that. The python version on the target machine is 2.6.6

Any Ideas? I would give my left t6ticle to figure this out.

https://redd.it/1jbaskl

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

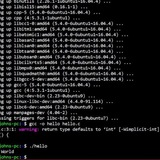

Seeking Feedback on My Bash Script for Migrating APT Keys

Hello everyone!

I recently created a Bash noscript designed to help migrate APT keys from the deprecated

# What This Script Does:

It exports existing APT keys to the `/etc/apt/trusted.gpg.d/` directory.

It verifies that the keys have been successfully exported.

It removes the old keys from `apt-key`.

It updates the APT package lists.

# Why I Want This:

As Ubuntu continues to evolve, it's important to keep our systems secure and up to date. Migrating to the new key management practices is essential for maintaining the integrity of package installations and updates.

# Questions for the Community:

1. Is this noscript safe to use? I want to ensure that it won't cause any issues with my system or package management.

2. Will this noscript work as is? I would appreciate any feedback on its functionality or any improvements that could be made.

Here’s the noscript for your review:Hello everyone!

I recently created a Bash noscript designed to help migrate APT keys from the deprecated apt-key to the new best practices in Ubuntu. The noscript includes user confirmation before each step, ensuring that users have control over the process. I developed this noscript using DuckDuckGo's AI tool, which helped me refine my approach.

What This Script Does:

It exports existing APT keys to the /etc/apt/trusted.gpg.d/ directory.

It verifies that the keys have been successfully exported.

It removes the old keys from apt-key.

It updates the APT package lists.

Why I Want This:

As Ubuntu continues to evolve, it's important to keep our systems secure and up to date. Migrating to the new key management practices is essential for maintaining the integrity of package installations and updates.

Questions for the Community:

Is this noscript safe to use? I want to ensure that it won't cause any issues with my system or package management.

Will this noscript work as is? I would appreciate any feedback on its functionality or any improvements that could be made.

Here’s the noscript for your review:

# !/bin/bash

# Directory to store the exported keys

KEY_DIR="/etc/apt/trusted.gpg.d"

# Function to handle errors

handle_error() { echo "Error: $1" exit 1 }

# Function to prompt for user confirmation

confirm() { read -p "$1 (y/n): " -n 1 -r echo # Move to a new line if [[ ! $REPLY =\~ ^([Yy\]$) \]\]; then echo "Operation aborted." exit 0 fi }

# Check if the directory exists

if [ ! -d "$KEY_DIR" \]; then handle_error "Directory $KEY_DIR does not exist. Exiting." fi

# List all keys in apt-key

KEYS=$(apt-key list | grep -E 'pub ' | awk '{print $2}' | cut -d'/' -f2)

# Check if there are no keys to export

if [ -z "$KEYS" \]; then echo "No keys found to export. Exiting." exit 0 fi

# Export each key

for KEY in $KEYS; do echo "Exporting key: $KEY" confirm "Proceed with exporting key: $KEY?" if ! sudo apt-key export "$KEY" | gpg --dearmor | sudo tee "$KEY_DIR/$KEY.gpg" > /dev/null; then handle_error "Failed to export key: $KEY" fi echo "Key $KEY exported successfully." done

# Verify the keys have been exported

echo "Verifying exported keys..." confirm "Proceed with verification of exported keys?" for KEY in $KEYS; do if [ -f "$KEY_DIR/$KEY.gpg" \]; then echo "Key $KEY successfully exported." else echo "Key $KEY failed to export." fi done

# Remove old keys from apt-key

echo "Removing old keys from apt-key..." confirm "Proceed with removing old keys from apt-key?" for KEY in $KEYS; do echo "Removing key: $KEY" if ! sudo apt-key del "$KEY"; then echo "Warning: Failed to remove key: $KEY" fi done

# Update APT

echo "Updating APT..." confirm "Proceed with

Hello everyone!

I recently created a Bash noscript designed to help migrate APT keys from the deprecated

apt-key to the new best practices in Ubuntu. The noscript includes user confirmation before each step, ensuring that users have control over the process. I developed this noscript using DuckDuckGo's AI tool, which helped me refine my approach.# What This Script Does:

It exports existing APT keys to the `/etc/apt/trusted.gpg.d/` directory.

It verifies that the keys have been successfully exported.

It removes the old keys from `apt-key`.

It updates the APT package lists.

# Why I Want This:

As Ubuntu continues to evolve, it's important to keep our systems secure and up to date. Migrating to the new key management practices is essential for maintaining the integrity of package installations and updates.

# Questions for the Community:

1. Is this noscript safe to use? I want to ensure that it won't cause any issues with my system or package management.

2. Will this noscript work as is? I would appreciate any feedback on its functionality or any improvements that could be made.

Here’s the noscript for your review:Hello everyone!

I recently created a Bash noscript designed to help migrate APT keys from the deprecated apt-key to the new best practices in Ubuntu. The noscript includes user confirmation before each step, ensuring that users have control over the process. I developed this noscript using DuckDuckGo's AI tool, which helped me refine my approach.

What This Script Does:

It exports existing APT keys to the /etc/apt/trusted.gpg.d/ directory.

It verifies that the keys have been successfully exported.

It removes the old keys from apt-key.

It updates the APT package lists.

Why I Want This:

As Ubuntu continues to evolve, it's important to keep our systems secure and up to date. Migrating to the new key management practices is essential for maintaining the integrity of package installations and updates.

Questions for the Community:

Is this noscript safe to use? I want to ensure that it won't cause any issues with my system or package management.

Will this noscript work as is? I would appreciate any feedback on its functionality or any improvements that could be made.

Here’s the noscript for your review:

# !/bin/bash

# Directory to store the exported keys

KEY_DIR="/etc/apt/trusted.gpg.d"

# Function to handle errors

handle_error() { echo "Error: $1" exit 1 }

# Function to prompt for user confirmation

confirm() { read -p "$1 (y/n): " -n 1 -r echo # Move to a new line if [[ ! $REPLY =\~ ^([Yy\]$) \]\]; then echo "Operation aborted." exit 0 fi }

# Check if the directory exists

if [ ! -d "$KEY_DIR" \]; then handle_error "Directory $KEY_DIR does not exist. Exiting." fi

# List all keys in apt-key

KEYS=$(apt-key list | grep -E 'pub ' | awk '{print $2}' | cut -d'/' -f2)

# Check if there are no keys to export

if [ -z "$KEYS" \]; then echo "No keys found to export. Exiting." exit 0 fi

# Export each key

for KEY in $KEYS; do echo "Exporting key: $KEY" confirm "Proceed with exporting key: $KEY?" if ! sudo apt-key export "$KEY" | gpg --dearmor | sudo tee "$KEY_DIR/$KEY.gpg" > /dev/null; then handle_error "Failed to export key: $KEY" fi echo "Key $KEY exported successfully." done

# Verify the keys have been exported

echo "Verifying exported keys..." confirm "Proceed with verification of exported keys?" for KEY in $KEYS; do if [ -f "$KEY_DIR/$KEY.gpg" \]; then echo "Key $KEY successfully exported." else echo "Key $KEY failed to export." fi done

# Remove old keys from apt-key

echo "Removing old keys from apt-key..." confirm "Proceed with removing old keys from apt-key?" for KEY in $KEYS; do echo "Removing key: $KEY" if ! sudo apt-key del "$KEY"; then echo "Warning: Failed to remove key: $KEY" fi done

# Update APT

echo "Updating APT..." confirm "Proceed with

updating APT?" if ! sudo apt update; then handle_error "Failed to update APT." fi

echo "Key migration completed successfully."

I appreciate any insights or suggestions you may have. Thank you for your help!

https://redd.it/1jaz34c

@r_bash

echo "Key migration completed successfully."

I appreciate any insights or suggestions you may have. Thank you for your help!

https://redd.it/1jaz34c

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Using grep / sed in a bash noscript...

Hello, I've spent a lot more time than I'd like to admit trying to figure out how to write this noscript. I've looked through the official Bash docs and many online StackOverflow posts.

This noscript is supposed to take a directory as input, i.e.

The command is supposed to be invoked by doing

#!/bin/bash

# filename: replace.sh

INDIR=$(sed -r 's/\//\\\//g' <<< "$1")

OUTDIR=$(sed -r 's/\//\\\//g' <<< "$2")

echo "$1 -> $2"

echo $1

echo "${INDIR} -> ${OUTDIR}"

grep -rl -e "$1" | xargs sed -i 's/${INDIR}/${OUTDIR}/g'

# test for white space ahead, white space behind

grep -rl -e "$1" | xargs sed -i 's/\s${INDIR}\s/\s${OUTDIR}\s/g'

# test for beginning of line ahead, white space behind

grep -rl -e "$1" | xargs sed -i 's/^${INDIR}\s/^${OUTDIR}\s/g'

# test for white space ahead, end of line behind

grep -rl -e "$1" | xargs sed -i 's/\s${INDIR}$/\s${OUTDIR}$/g'

# test for beginning of line ahead, end of line behind

grep -rl -e "$1" | xargs sed -i 's/^${INDIR}$/^${OUTDIR}$/g'

No matter what I've tried, this will not function correctly. The original file that I'm using to test the functionality remains unchanged, despite being able to do the

What am I doing wrong?

Many thanks

https://redd.it/1javegq

@r_bash

Hello, I've spent a lot more time than I'd like to admit trying to figure out how to write this noscript. I've looked through the official Bash docs and many online StackOverflow posts.

This noscript is supposed to take a directory as input, i.e.

/lib/64, and recursively change files in a directory to the new path, i.e. /lib64.The command is supposed to be invoked by doing

./replace.sh /lib/64 /lib64#!/bin/bash

# filename: replace.sh

INDIR=$(sed -r 's/\//\\\//g' <<< "$1")

OUTDIR=$(sed -r 's/\//\\\//g' <<< "$2")

echo "$1 -> $2"

echo $1

echo "${INDIR} -> ${OUTDIR}"

grep -rl -e "$1" | xargs sed -i 's/${INDIR}/${OUTDIR}/g'

# test for white space ahead, white space behind

grep -rl -e "$1" | xargs sed -i 's/\s${INDIR}\s/\s${OUTDIR}\s/g'

# test for beginning of line ahead, white space behind

grep -rl -e "$1" | xargs sed -i 's/^${INDIR}\s/^${OUTDIR}\s/g'

# test for white space ahead, end of line behind

grep -rl -e "$1" | xargs sed -i 's/\s${INDIR}$/\s${OUTDIR}$/g'

# test for beginning of line ahead, end of line behind

grep -rl -e "$1" | xargs sed -i 's/^${INDIR}$/^${OUTDIR}$/g'

IN_DIR and OUT_DIR are taking the two directory arguments, then using sed to insert a backslash before each slash. grep -rl -e "$1" | xargs sed -i 's/${IN_DIR}/${OUT_DIR}/g' is supposed to be recursively going through a directory tree from where the command is invoked, and replacing the original path (arg1) with the new path (arg2).No matter what I've tried, this will not function correctly. The original file that I'm using to test the functionality remains unchanged, despite being able to do the

grep ... | xargs sed ... manually with success.What am I doing wrong?

Many thanks

https://redd.it/1javegq

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

find, but exclude file from results if another file exists?

I found a project that locally uses whisper to generate subnoscripts from media. Bulk translations are done by passing a text file to the command line that contains absolute file paths. I can generate this file easily enough with

find /mnt/media/ -iname .mkv -o -iname .m4v -o -iname .mp4 -o -iname .avi -o -iname .mov -o -name .mpg > media.txt

The goal would be to exclude media that already has an .srt file with the same filename. So show.mkv that also has show.srt would not show up.

I think this goes beyond find and needs to be piped else where but I am not quite sure where to go from here.

https://redd.it/1jc6ht5

@r_bash

I found a project that locally uses whisper to generate subnoscripts from media. Bulk translations are done by passing a text file to the command line that contains absolute file paths. I can generate this file easily enough with

find /mnt/media/ -iname .mkv -o -iname .m4v -o -iname .mp4 -o -iname .avi -o -iname .mov -o -name .mpg > media.txt

The goal would be to exclude media that already has an .srt file with the same filename. So show.mkv that also has show.srt would not show up.

I think this goes beyond find and needs to be piped else where but I am not quite sure where to go from here.

https://redd.it/1jc6ht5

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Install NVM with bash

Anyone have a handy noscript that will install nvm + LTS nodejs with a bash noscript?

I use the following commands on an interactive shell fine, but for the life of me I can't get it to install with a bash noscript on Ubuntu 22.04.

https://redd.it/1jc4ge2

@r_bash

Anyone have a handy noscript that will install nvm + LTS nodejs with a bash noscript?

I use the following commands on an interactive shell fine, but for the life of me I can't get it to install with a bash noscript on Ubuntu 22.04.

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.1/install.sh | bash && source ~/.bashrc && nvm install --lts

https://redd.it/1jc4ge2

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to make a noscript to populate an array in another noscript?

I'm too new to know what I even need to look up in the docs, here. Hopefully this makes sense.

I have this noscript:

#!/bin/bash

arr0="0"

arr1="1"

arr2="2"

rand=$$RANDOM % ${#arr[@}]

xdotool type ${arr$rand}

Which, when executed, types one of the characters 0, 1, or 2 at random. Instead of hard coding those values to be selected at random, I would like to make another noscript that prompts the user for the values in the arrays.

Ie. Execute new noscript. It asks for a list of items. I enter "r", "g", "q". Now the example noscript above will type one of the characters r, g, or q at random.

I'm trying to figure out how to set the arrays arbitrarily without editing the noscript manually every time I want to change the selection of possible random characters.

https://redd.it/1jc3m5l

@r_bash

I'm too new to know what I even need to look up in the docs, here. Hopefully this makes sense.

I have this noscript:

#!/bin/bash

arr0="0"

arr1="1"

arr2="2"

rand=$$RANDOM % ${#arr[@}]

xdotool type ${arr$rand}

Which, when executed, types one of the characters 0, 1, or 2 at random. Instead of hard coding those values to be selected at random, I would like to make another noscript that prompts the user for the values in the arrays.

Ie. Execute new noscript. It asks for a list of items. I enter "r", "g", "q". Now the example noscript above will type one of the characters r, g, or q at random.

I'm trying to figure out how to set the arrays arbitrarily without editing the noscript manually every time I want to change the selection of possible random characters.

https://redd.it/1jc3m5l

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

My while read loop isn't looping

I have a folder structure like so:

/path/to/directory/foldernameAUTO001

/path/to/directory/foldername002

I am trying to search through /path/to/directory to find instances where the directory "foldernameAUTO" has any other directories of the same name (potentially without AUTO) with a higher number after the underscore.

For example, if I have a folder called "testfolderAUTO001" I want to find "testfolder002" or "testfolderAUTO002". Hope all that makes sense.

Here is my loop:

#!/bin/bash

Folder=/path/to/directory/

while IFS='/' read -r blank path to directory foldernameseq; do

echo "Found AUTO of $foldernameseq"

foldername=$(echo "$foldernameseq" | cut -d -f1) && echo "foldername is $foldername"

seq=$(echo "$foldernameseq" | cut -d -f2) && echo "sequence is $seq"

printf -v int '%d/n' "$seq"

(( newseq=seq+1 )) && echo "New sequence is 00$newseq"

echo "Finding successors for $foldername"

find $Folder -name "$foldername"00"$newseq"

noauto=$(echo "${foldername:0:-4}") && echo "NoAuto is $noauto"

find $Folder -name "noauto"00"newseq"

echo ""

done < <(find $Folder -name "*AUTO*")

And this is what I'm getting as output. It just lists the same directory over and over:

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

https://redd.it/1jdel1z

@r_bash

I have a folder structure like so:

/path/to/directory/foldernameAUTO001

/path/to/directory/foldername002

I am trying to search through /path/to/directory to find instances where the directory "foldernameAUTO" has any other directories of the same name (potentially without AUTO) with a higher number after the underscore.

For example, if I have a folder called "testfolderAUTO001" I want to find "testfolder002" or "testfolderAUTO002". Hope all that makes sense.

Here is my loop:

#!/bin/bash

Folder=/path/to/directory/

while IFS='/' read -r blank path to directory foldernameseq; do

echo "Found AUTO of $foldernameseq"

foldername=$(echo "$foldernameseq" | cut -d -f1) && echo "foldername is $foldername"

seq=$(echo "$foldernameseq" | cut -d -f2) && echo "sequence is $seq"

printf -v int '%d/n' "$seq"

(( newseq=seq+1 )) && echo "New sequence is 00$newseq"

echo "Finding successors for $foldername"

find $Folder -name "$foldername"00"$newseq"

noauto=$(echo "${foldername:0:-4}") && echo "NoAuto is $noauto"

find $Folder -name "noauto"00"newseq"

echo ""

done < <(find $Folder -name "*AUTO*")

And this is what I'm getting as output. It just lists the same directory over and over:

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

Found AUTO of foldernameAUTO001

foldername is foldernameAUTO

sequence is 001

New sequence is 002

Finding successors for foldernameAUTO

NoAUTO is foldername

https://redd.it/1jdel1z

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Here's how I use Bash Aliases in the Command Line, including the Just-for-Fun Commands

https://mechanisticmind.substack.com/p/using-the-terminal-bash-aliases

https://redd.it/1jfvxwh

@r_bash

https://mechanisticmind.substack.com/p/using-the-terminal-bash-aliases

https://redd.it/1jfvxwh

@r_bash

Substack

Using the Terminal — Bash Aliases

Some practical advice about using and getting used to the Linux terminal

DD strange behavior

Im sending 38bytes string from one device via uart to pc. Stty is configured as needed 9600 8n1. I want to catch incoming data via dd and i know its exactly 38bytes.

dd if=/dev/ttyusb0 bs=1 count=38

But after receiving 38bytes it still sits and waits till more data will come. As a workaround i used timeout 1 which makes dd work as expected but i dont like that solution. I switched to using cat eventually but still want to understand the reasons for dd to behave like that shouldnt it terminate with a status code right after 38bytes?

https://redd.it/1jgxyit

@r_bash

Im sending 38bytes string from one device via uart to pc. Stty is configured as needed 9600 8n1. I want to catch incoming data via dd and i know its exactly 38bytes.

dd if=/dev/ttyusb0 bs=1 count=38

But after receiving 38bytes it still sits and waits till more data will come. As a workaround i used timeout 1 which makes dd work as expected but i dont like that solution. I switched to using cat eventually but still want to understand the reasons for dd to behave like that shouldnt it terminate with a status code right after 38bytes?

https://redd.it/1jgxyit

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

"return" doesn't return the exit code of the last command in a function

#!/bin/bash

bar() {

echo bar

return

}

foo() {

echo foo

bar

echo "Return code from bar(): $?"

exit

}

trap foo SIGINT

while :; do

sleep 1;

done

I have this example noscript. When I start it and press CTRL-C (SIGINT):

# Expected output:

^Cfoo

bar

Return code from bar(): 0

# Actual output:

^Cfoo

bar

Return code from bar(): 130

I understand, that 130 (128 + 2) is SIGINT. But why is the

https://redd.it/1jgvheu

@r_bash

#!/bin/bash

bar() {

echo bar

return

}

foo() {

echo foo

bar

echo "Return code from bar(): $?"

exit

}

trap foo SIGINT

while :; do

sleep 1;

done

I have this example noscript. When I start it and press CTRL-C (SIGINT):

# Expected output:

^Cfoo

bar

Return code from bar(): 0

# Actual output:

^Cfoo

bar

Return code from bar(): 130

I understand, that 130 (128 + 2) is SIGINT. But why is the

return statement in the bar function ignoring the successful echo?https://redd.it/1jgvheu

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

send commands via stdin

Hi every one, I am working with gdb, and I want to send commands to it via stdin,

look at this commands:

`echo -e "i r\n" > /proc/\`ps aux | grep "gdb ./args2" | awk 'NR == 1 {print $2}'\`/fd/0`

and I tried this

`echo -e "i r\r\n" > /proc/\`ps aux | grep "gdb ./args2" | awk 'NR == 1 {print $2}'\`/fd/0`

I expected to send `i r` to gdb, and when I check gdb, I found the string I send "i r", but it did not execute, and I was need to press enter, how to do it without press enter.

note: I do not want to use any tools "like expect", I want to do it through echo and stdin only.

https://redd.it/1jhergb

@r_bash

Hi every one, I am working with gdb, and I want to send commands to it via stdin,

look at this commands:

`echo -e "i r\n" > /proc/\`ps aux | grep "gdb ./args2" | awk 'NR == 1 {print $2}'\`/fd/0`

and I tried this

`echo -e "i r\r\n" > /proc/\`ps aux | grep "gdb ./args2" | awk 'NR == 1 {print $2}'\`/fd/0`

I expected to send `i r` to gdb, and when I check gdb, I found the string I send "i r", but it did not execute, and I was need to press enter, how to do it without press enter.

note: I do not want to use any tools "like expect", I want to do it through echo and stdin only.

https://redd.it/1jhergb

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Continue the noscript after an if ?

Hi there, I'm struggling :)

trying to make a small bash noscript, here it is :

I am wondering why commands after the if statement won't execute ?

https://redd.it/1ji02u4

@r_bash

Hi there, I'm struggling :)

trying to make a small bash noscript, here it is :

#!/bin/bashset -x #;)read userif [[ -n $user ]]; thenexec adduser $userelseexit 1fimkdir $HOME/$user && chown $usermkdir -p $HOME/$user/{Commun,Work,stuff}I am wondering why commands after the if statement won't execute ?

https://redd.it/1ji02u4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Why is this echo command printing the error to terminal?

I was expecting the following command to print nothing. But for some reason it prints the error message from ls. Why? (I am on Fedora 41, GNOME terminal, on bash 5.2.32)

If someone knows, please explain? I just can't get this off my head.

https://redd.it/1ji5o1o

@r_bash

I was expecting the following command to print nothing. But for some reason it prints the error message from ls. Why? (I am on Fedora 41, GNOME terminal, on bash 5.2.32)

echo $(ls /sdfsdgd) &>/dev/nullIf someone knows, please explain? I just can't get this off my head.

https://redd.it/1ji5o1o

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to run every noscript in directory one-at-a-time, pause after each, and wait for user input to advance to the next noscript?

https://redd.it/1jiimyg

@r_bash

find . -type f -executable -executable {} \; runs every noscript in the directory, automatically running each as soon as the previous one is finished. I would like to see the output of each noscript individually and manually advance to the next.https://redd.it/1jiimyg

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Sourcing for bash -c fails, but bash -i -c works

I think I am going insane already....

I need to run a lot of commands in parallel, but I want to ensure there is a timeout. So I tried this and any mutation I can think off:

I am 100% unable to get this to work. I tried cat to ensure that bashsource is defined properly. Yes, the file prints to stdout perfectly fine. So the path definitely is correct. Sourcing with && echo Success || echo Failed prints Success, so the sourcing itself is working. I tried with export. I tried eval. Eval does not work, as it is not a program, but just a function of the noscript and it cannot find it. Here commmes the issue:

Does not output anything.

This outputs the result as expected to console. But now combining the timeout with an & at the end to make it parallel and the loop being followed with a wait statement, the noscript never finishes executing, also not after 5 minutes. Adding an exit after the command also does nothing. I am now at 500 processes. What is going on?

There MUST be a way, to run a function from a noscript file (with a relative path like from $BASH_SOURCE) with a given timeout in parallel. I cannot get it to work. I tried like 100 different mutations of this command and none work. The first book of moses is short to the list of variations I tried.

You want to know, what pisses me off further?

This works:

But of course it is dang slow.

This does not work:

It just is stuck forever. HUUUUH????????? I am really going insane. What is so wrong with the & symbol? Any idea please? :(

https://redd.it/1jive1g

@r_bash

I think I am going insane already....

I need to run a lot of commands in parallel, but I want to ensure there is a timeout. So I tried this and any mutation I can think off:

timeout 2 bash -c ". ${BASH_SOURCE}; function_inside_this_file "$count"" > temp_findstuff_$count &I am 100% unable to get this to work. I tried cat to ensure that bashsource is defined properly. Yes, the file prints to stdout perfectly fine. So the path definitely is correct. Sourcing with && echo Success || echo Failed prints Success, so the sourcing itself is working. I tried with export. I tried eval. Eval does not work, as it is not a program, but just a function of the noscript and it cannot find it. Here commmes the issue:

timeout 2 bash -c ". ${BASH_SOURCE}; function_inside_this_file "$count""Does not output anything.

timeout 2 bash -i -c ". ${BASH_SOURCE}; function_inside_this_file "$count""This outputs the result as expected to console. But now combining the timeout with an & at the end to make it parallel and the loop being followed with a wait statement, the noscript never finishes executing, also not after 5 minutes. Adding an exit after the command also does nothing. I am now at 500 processes. What is going on?

There MUST be a way, to run a function from a noscript file (with a relative path like from $BASH_SOURCE) with a given timeout in parallel. I cannot get it to work. I tried like 100 different mutations of this command and none work. The first book of moses is short to the list of variations I tried.

You want to know, what pisses me off further?

This works:

timeout 2 bash -i -c ". ${BASH_SOURCE}; function_inside_this_file "$count"; exit;"But of course it is dang slow.

This does not work:

timeout 2 bash -i -c ". ${BASH_SOURCE}; function_inside_this_file "$count"; exit;" &It just is stuck forever. HUUUUH????????? I am really going insane. What is so wrong with the & symbol? Any idea please? :(

https://redd.it/1jive1g

@r_bash

Did I miss something

What happened to r/bash did someone rm -rf / from it? I could have sworn there were posts here.

https://redd.it/1jiuztz

@r_bash

What happened to r/bash did someone rm -rf / from it? I could have sworn there were posts here.

https://redd.it/1jiuztz

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

GitHub - helpermethod/alias-investigations

This is a small Bash function to detect if an alias clashes with an existing command or shell builtin.

Just source

https://github.com/helpermethod/alias-investigations

https://redd.it/1jmkk6m

@r_bash

This is a small Bash function to detect if an alias clashes with an existing command or shell builtin.

Just source

ai and give it a try.https://github.com/helpermethod/alias-investigations

https://redd.it/1jmkk6m

@r_bash

GitHub

GitHub - helpermethod/alias-investigations

Contribute to helpermethod/alias-investigations development by creating an account on GitHub.

What is the professional/veteran take on use / over-use of "exit 1" ?

Is it okay, or lazy, or taboo, or just plain wrong to use to many EXIT's in a noscript?

For context... I've been dabbling with multiple languages over the past years in my off time (self-taught, not at all a profession), but noticed in this one program I'm building (that is kinda getting away from me, if u know what I mean), I've got multiple Functions and IF statements and noticed tonight it seems like I have a whole lot of "exit". As I write noscript, I'm using them to stop the noscript when an error occurs. But guarantee there are some redundancies there... between the functions that call other functions and other noscripts. If that makes sense.

It's working and I get the results I'm after. I'm just curious what the community take is outside of my little word...

https://redd.it/1jll0j9

@r_bash

Is it okay, or lazy, or taboo, or just plain wrong to use to many EXIT's in a noscript?

For context... I've been dabbling with multiple languages over the past years in my off time (self-taught, not at all a profession), but noticed in this one program I'm building (that is kinda getting away from me, if u know what I mean), I've got multiple Functions and IF statements and noticed tonight it seems like I have a whole lot of "exit". As I write noscript, I'm using them to stop the noscript when an error occurs. But guarantee there are some redundancies there... between the functions that call other functions and other noscripts. If that makes sense.

It's working and I get the results I'm after. I'm just curious what the community take is outside of my little word...

https://redd.it/1jll0j9

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community