Multiple sourcing issue

Hi, I have a problem in my project, the problem is in my library,

i have directory called lib, containing multiple .sh files

├── application

│ │

│ └── main.sh

│

├── lib

│ ├── arrays.sh

│ ├── files.sh

│ ├── math.sh

│ └── out.sh

in `out. sh` file I have some function for error handling, also contains some `readonly` variables , and other files in the library use the error handling function in `out. sh`

example:

`application/main.sh:`

source "../lib/out.sh"

source "../lib/array.sh"

do_something || error_handle .......

`lib/arrays.sh:`

source "out.sh"

func1(){

# some code

}

`lib/out.sh:`

readonly __STDOUT=1

readonly __STDERR=2

readonly __ERROR_MESSAGE_ENABLE=0

error_handle(){

# some code

}

now the problem is when I run the project, it tells me that the `$__STDOUT` and the other vars are `readonly` and cannot be modified, because the variables are declared twice, first by my app when it source the `out. sh` , second when `arrays. sh` source `out. sh` , so my question, how to solve it in the best way, I already think about make variables not read only, but I feel it is not the best way, because we still have multiple sourcing, and I want to keep all library functions to have access to error handling functions

https://redd.it/1kj9wya

@r_bash

Hi, I have a problem in my project, the problem is in my library,

i have directory called lib, containing multiple .sh files

├── application

│ │

│ └── main.sh

│

├── lib

│ ├── arrays.sh

│ ├── files.sh

│ ├── math.sh

│ └── out.sh

in `out. sh` file I have some function for error handling, also contains some `readonly` variables , and other files in the library use the error handling function in `out. sh`

example:

`application/main.sh:`

source "../lib/out.sh"

source "../lib/array.sh"

do_something || error_handle .......

`lib/arrays.sh:`

source "out.sh"

func1(){

# some code

}

`lib/out.sh:`

readonly __STDOUT=1

readonly __STDERR=2

readonly __ERROR_MESSAGE_ENABLE=0

error_handle(){

# some code

}

now the problem is when I run the project, it tells me that the `$__STDOUT` and the other vars are `readonly` and cannot be modified, because the variables are declared twice, first by my app when it source the `out. sh` , second when `arrays. sh` source `out. sh` , so my question, how to solve it in the best way, I already think about make variables not read only, but I feel it is not the best way, because we still have multiple sourcing, and I want to keep all library functions to have access to error handling functions

https://redd.it/1kj9wya

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to better structure this gitfzf noscript

This is my new noscript for getting fzf list of all git repos in my home directory and applying git commands with respect to the the repo selected.

I want a better structure of this noscript minimizing the loc and adding more functionality.

#!/usr/bin/env bash

# use fzf for selecting the repos from the desktop

# and then select one, apply a command such as status, sync with remote, etc.

localrepo(){ # searches the .git dir in $1

if -z $REPOS ; then

export REPOS="$(dirname $(find $1 -name ".git" -type d))"

fi

echo $REPOS | tr ' ' '\n'

}

reposelection(){ # fzf selection from allrepo

local selected

local repo

repo=\localrepo $1``

selected=$( printf "$repo" | fzf +m --height 50% --style full --input-label ' Destination ' --preview 'tree -C {}')

echo $selected

}

gitfnc(){

gitcmda=( "branch_commit"="branch -vva" )

gitcmd=("status" "status -uno" "log" "branch -vva" "commit" "add" "config") # simple git commands

selected=$( printf '%s\n' "${gitcmd@}" | fzf +m --height 50% --style full --input-label ' Destination ' --preview 'tree -C {}')

echo $selected

}

repo=\reposelection $HOME``

cmd="cmd"

while [ ! -z "$cmd" ]; do

cmd=\gitfnc

if [ -z "$repo" || -z "$cmd" ]; then

exit 0

fi

printf "\n git -C $repo $cmd"

git -C $repo $cmd

done

https://redd.it/1kjei14

@r_bash

This is my new noscript for getting fzf list of all git repos in my home directory and applying git commands with respect to the the repo selected.

I want a better structure of this noscript minimizing the loc and adding more functionality.

#!/usr/bin/env bash

# use fzf for selecting the repos from the desktop

# and then select one, apply a command such as status, sync with remote, etc.

localrepo(){ # searches the .git dir in $1

if -z $REPOS ; then

export REPOS="$(dirname $(find $1 -name ".git" -type d))"

fi

echo $REPOS | tr ' ' '\n'

}

reposelection(){ # fzf selection from allrepo

local selected

local repo

repo=\localrepo $1``

selected=$( printf "$repo" | fzf +m --height 50% --style full --input-label ' Destination ' --preview 'tree -C {}')

echo $selected

}

gitfnc(){

gitcmda=( "branch_commit"="branch -vva" )

gitcmd=("status" "status -uno" "log" "branch -vva" "commit" "add" "config") # simple git commands

selected=$( printf '%s\n' "${gitcmd@}" | fzf +m --height 50% --style full --input-label ' Destination ' --preview 'tree -C {}')

echo $selected

}

repo=\reposelection $HOME``

cmd="cmd"

while [ ! -z "$cmd" ]; do

cmd=\gitfnc

if [ -z "$repo" || -z "$cmd" ]; then

exit 0

fi

printf "\n git -C $repo $cmd"

git -C $repo $cmd

done

https://redd.it/1kjei14

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

pickleBerry a TUI based file manager all written as a shell noscript

Running in terminal - kitty

home directory

Moving through directories

help menu

https://redd.it/1kjx88r

@r_bash

Running in terminal - kitty

home directory

Moving through directories

help menu

https://redd.it/1kjx88r

@r_bash

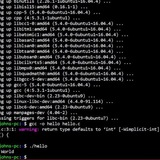

Installing Newer Versions of Bash with Mise

Hey all,

I'm working on some developer tooling for my company and want to make sure everyone is running the latest version of Bash when I'm noscripting tools.

I'm using Mise to pin various other languages, but don't see Bash support. Do you all have a good way of pinning the Bash version for other engineers so that when noscripts are run they use the correct version? Has anyone had success with Mise for this, preferably, or another method?

https://redd.it/1kkexhq

@r_bash

Hey all,

I'm working on some developer tooling for my company and want to make sure everyone is running the latest version of Bash when I'm noscripting tools.

I'm using Mise to pin various other languages, but don't see Bash support. Do you all have a good way of pinning the Bash version for other engineers so that when noscripts are run they use the correct version? Has anyone had success with Mise for this, preferably, or another method?

https://redd.it/1kkexhq

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Visualize (convert) list of files as tree hiearchy

894 Apr 20 2024 /media/wd8000-1/music/unsorted/

2.0K Apr 20 2024 /media/wd8000-1/music/unsorted/A/

19M Apr 20 2024 /media/wd8000-1/music/unsorted/A/AA.mp4

...

I run this command on an external drive to save it into a text file before unplugging it so I can grep it to see what files I have on the drive along with basic metadata. It's an 8 TB drive with 20k+ files.

I would like a way to visualize the tree structure (e.g. understand how it's organized), e.g. convert it back to the standard

Any tips? Another workaround would be parse the file and create the file tree as empty files on the filesystem but that wouldn't be efficient.

https://redd.it/1kkerqz

@r_bash

tree -afhDFci <dir> produces a list of files in the following format:894 Apr 20 2024 /media/wd8000-1/music/unsorted/

2.0K Apr 20 2024 /media/wd8000-1/music/unsorted/A/

19M Apr 20 2024 /media/wd8000-1/music/unsorted/A/AA.mp4

...

I run this command on an external drive to save it into a text file before unplugging it so I can grep it to see what files I have on the drive along with basic metadata. It's an 8 TB drive with 20k+ files.

I would like a way to visualize the tree structure (e.g. understand how it's organized), e.g. convert it back to the standard

tree command output with directory hierarchy and indentation. Or show list of directories that are X levels deep. Unfortunately I won't have access to the drives for a month so I can't simply save another tree output to another file (I also prefer to parse from one saved file).Any tips? Another workaround would be parse the file and create the file tree as empty files on the filesystem but that wouldn't be efficient.

https://redd.it/1kkerqz

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

What's the weirdest or most unexpected thing you've automated with Bash?

If we don't count all sysadmin tasks, backups, and cron jobs... what's the strangest or most out-of-the-box thing you've noscripted?

I once rigged a Bash noscript + smart plug to auto-feed my cats while I was away.

https://redd.it/1kkpg7l

@r_bash

If we don't count all sysadmin tasks, backups, and cron jobs... what's the strangest or most out-of-the-box thing you've noscripted?

I once rigged a Bash noscript + smart plug to auto-feed my cats while I was away.

https://redd.it/1kkpg7l

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

.config files in $XDGCONFIGHOME

This is not technically a

Let's say I'm writing a noscript that needs a

Leading dots are great for reducing clutter, but that's not an issue if the file is in an uncluttered subdirectory

What's the accepted best practice on naming a config file that sits inside a config directory - with a leading dot or not? I don't see any advantages to leading dots in this case, but decades of noscripting tells me that config files start with a dot ;-)

Note: I'm interested in people's opinions, so please don't reply with a ChatGPT generated opinion

https://redd.it/1km1u0s

@r_bash

This is not technically a

bash question, but it's shell related and this place is full of smart people. Let's say I'm writing a noscript that needs a

.config file, but I want the location to be in $XDG_CONFIG_HOME/noscriptname. Leading dots are great for reducing clutter, but that's not an issue if the file is in an uncluttered subdirectory

What's the accepted best practice on naming a config file that sits inside a config directory - with a leading dot or not? I don't see any advantages to leading dots in this case, but decades of noscripting tells me that config files start with a dot ;-)

Note: I'm interested in people's opinions, so please don't reply with a ChatGPT generated opinion

https://redd.it/1km1u0s

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

how to parallelize a for loop in batches?

On a Proxmox PVE host, I'd like to do scheduled live migrations. My "oneliner" already works, but I've got 2 NICs in adaptive-alb, so I can do 2 migrations at the same time, I presume nearly doubling the speed.

This oneliner will "serialize" the migrations of all VMs it finds on a host (except VM with VMID 120).

Question: how do I change the oneliner below so it does 2 parallel migrations, if those finish, continue with the next two VMs. Ideally, if one finishes, it could immediately start another migration, but it's OK if I can do 100, 101, wait, then 102, 103 wait, then 104 and 105, ... until all VMs are done.

time for vmid in $(qm list | awk '$3=="running" && $1!="120" { print $1 }'); do qm migrate $vmid pve3 --online --migration_network 10.100.80.0/24 --bwlimit 400000; done

https://redd.it/1km88ka

@r_bash

On a Proxmox PVE host, I'd like to do scheduled live migrations. My "oneliner" already works, but I've got 2 NICs in adaptive-alb, so I can do 2 migrations at the same time, I presume nearly doubling the speed.

This oneliner will "serialize" the migrations of all VMs it finds on a host (except VM with VMID 120).

Question: how do I change the oneliner below so it does 2 parallel migrations, if those finish, continue with the next two VMs. Ideally, if one finishes, it could immediately start another migration, but it's OK if I can do 100, 101, wait, then 102, 103 wait, then 104 and 105, ... until all VMs are done.

time for vmid in $(qm list | awk '$3=="running" && $1!="120" { print $1 }'); do qm migrate $vmid pve3 --online --migration_network 10.100.80.0/24 --bwlimit 400000; done

https://redd.it/1km88ka

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

I'm thinking to start to work as a freelancer with shelll scrippting. Realistically, is there a market for that out there?

Just adding to the noscript: I'm thinking about doing this from home on sites like Upwork, Fiverr, **Freelancer.com**, Toptal, and PeoplePerHour. Working in an organization isn't an option at the moment.

I have some portfolio to show, but let's say the noscript's purposes are kind of shallow to show in this context, I'd guess. They're noscripts to help my day-to-day flow. The code itself is fine, most are pure-bash and performance-sensitive noscripts, but their purpose.. Anyway, if you guys say that's an issue, I can find projects to engage in. It's a good way to learn anyway, which is never enough. Thankfully, I have no rush to start working. I've been solely on Linux for around 10 years, and improving or switching my portfolio is not a huge deal.

https://redd.it/1kmdrxc

@r_bash

Just adding to the noscript: I'm thinking about doing this from home on sites like Upwork, Fiverr, **Freelancer.com**, Toptal, and PeoplePerHour. Working in an organization isn't an option at the moment.

I have some portfolio to show, but let's say the noscript's purposes are kind of shallow to show in this context, I'd guess. They're noscripts to help my day-to-day flow. The code itself is fine, most are pure-bash and performance-sensitive noscripts, but their purpose.. Anyway, if you guys say that's an issue, I can find projects to engage in. It's a good way to learn anyway, which is never enough. Thankfully, I have no rush to start working. I've been solely on Linux for around 10 years, and improving or switching my portfolio is not a huge deal.

https://redd.it/1kmdrxc

@r_bash

Freelancer

Hire Freelancers & Find Freelance Jobs Online

Find & hire top freelancers, web developers & designers inexpensively. World's largest marketplace of 50m. Receive quotes in seconds. Post your job online now.

Advance a pattern of numbers incrementally

Hi, I am trying to advance a pattern of numbers incrementally.

The pattern is: 4 1 2 3 8 5 6 7

Continuing the pattern the digits should produce: 4,1,2,3,8,5,6,7,12,9,10,11,16,13,14,15... onwards etc.

What I am trying to archive is to print a book on A4 paper, 2 pages each side so that's 4 pages per sheet when folded and then bind it myself. I have a program that can rearrange pages in a PDF but I have to feed it the correct sequence and I am not able to do this via the printer settings for various reasons hence setting up the PDF page order first. I know I can increment a simple sequence in using something like:

for i in \seq -s, 1 1 100

But obviously I am missing the the important arithmetic bits in between to repeat the pattern

Start with: 4

take the 1st input and: -3

take that last input +1

take that last input +1

take that last input +5 etc etc

I am not sure how to do this.

Thanks!

https://redd.it/1kn6ozs

@r_bash

Hi, I am trying to advance a pattern of numbers incrementally.

The pattern is: 4 1 2 3 8 5 6 7

Continuing the pattern the digits should produce: 4,1,2,3,8,5,6,7,12,9,10,11,16,13,14,15... onwards etc.

What I am trying to archive is to print a book on A4 paper, 2 pages each side so that's 4 pages per sheet when folded and then bind it myself. I have a program that can rearrange pages in a PDF but I have to feed it the correct sequence and I am not able to do this via the printer settings for various reasons hence setting up the PDF page order first. I know I can increment a simple sequence in using something like:

for i in \seq -s, 1 1 100

; do echo $i; doneBut obviously I am missing the the important arithmetic bits in between to repeat the pattern

Start with: 4

take the 1st input and: -3

take that last input +1

take that last input +1

take that last input +5 etc etc

I am not sure how to do this.

Thanks!

https://redd.it/1kn6ozs

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

help with noscript to verify rom, output variable, and flash

I tried to write a bash noscript that verifies a rom file using sha256sum.

The output should go into a variable

If the variable contains data exit

Else, flash the rom.

i cant seem to get the output variable to work correctly

I'm still learning bash noscript, so if anyone has any ideas on how to make it work, or improve it?

Thank you

\#!/bin/bash

output=`$(cat *.sha256) *.rom | sha256sum --check --status`

\#output=`ls`

if ! test -z "$output"

then

echo $output

exit

fi

echo "sha256sum correct"

echo

read -p "Are you sure you want to flash? [y/n\]" -n 1 -r

echo

if [[ $REPLY =\~ \^[Yy\]$ \]\]

then

echo

\#flashrom -w *.rom -p internal:boardmismatch=force

echo

echo "finished"

fi

https://redd.it/1kphj67

@r_bash

I tried to write a bash noscript that verifies a rom file using sha256sum.

The output should go into a variable

If the variable contains data exit

Else, flash the rom.

i cant seem to get the output variable to work correctly

I'm still learning bash noscript, so if anyone has any ideas on how to make it work, or improve it?

Thank you

\#!/bin/bash

output=`$(cat *.sha256) *.rom | sha256sum --check --status`

\#output=`ls`

if ! test -z "$output"

then

echo $output

exit

fi

echo "sha256sum correct"

echo

read -p "Are you sure you want to flash? [y/n\]" -n 1 -r

echo

if [[ $REPLY =\~ \^[Yy\]$ \]\]

then

echo

\#flashrom -w *.rom -p internal:boardmismatch=force

echo

echo "finished"

fi

https://redd.it/1kphj67

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Stop auto execute after exit ^x^e

Hi, \

As noscript, how do I stop Bash from auto executing command after I'm done editing using ^x^e?

https://redd.it/1kph7k1

@r_bash

Hi, \

As noscript, how do I stop Bash from auto executing command after I'm done editing using ^x^e?

https://redd.it/1kph7k1

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Version managers ARE SLOW

It really is too much. You add one nvm here, another rbenv there and top it of with some zoxide. I had to wait about one second just for my shell to start up! That really is suckless-less.

Well, I thought you could fix that problem by lazy-loading all those init noscripts. But when writing all that directly in bash, it quickly gets kind of bloated and not really easy to maintain. So, I've gotten to write a simple lazy-loader to do all that for you.

With lazysh (very creative name), you can list your init noscripts inside of your bashrc (or your shell's rc-file) with the lazy loader and it figures out which commands a single init command modifies by itself and caches the result such that your shell will start up blazingly fast next time!

You can view the project on Github. After installing, you simply add the following lines to your *rc-file and put in your init commands (replacing

source $(echo '

# Initializing zoxide

eval "$(zoxide init bash)"

# Initializing rbenv

eval "$(rbenv init - bash)"

# ... any other init command

' | lazysh bash)

https://redd.it/1kpmq9g

@r_bash

It really is too much. You add one nvm here, another rbenv there and top it of with some zoxide. I had to wait about one second just for my shell to start up! That really is suckless-less.

Well, I thought you could fix that problem by lazy-loading all those init noscripts. But when writing all that directly in bash, it quickly gets kind of bloated and not really easy to maintain. So, I've gotten to write a simple lazy-loader to do all that for you.

With lazysh (very creative name), you can list your init noscripts inside of your bashrc (or your shell's rc-file) with the lazy loader and it figures out which commands a single init command modifies by itself and caches the result such that your shell will start up blazingly fast next time!

You can view the project on Github. After installing, you simply add the following lines to your *rc-file and put in your init commands (replacing

bash with zsh or fish, respectively):source $(echo '

# Initializing zoxide

eval "$(zoxide init bash)"

# Initializing rbenv

eval "$(rbenv init - bash)"

# ... any other init command

' | lazysh bash)

https://redd.it/1kpmq9g

@r_bash

GitHub

GitHub - theaino/lazysh: Lazy-loading commands to speed up shell startup times

Lazy-loading commands to speed up shell startup times - theaino/lazysh

Check if gzipped file is valid (fast).

I have a tgz, and I want to be sure that the download was not cut.

I could run

For the current use case, t would be enough to somehow check the final bytes. Afaik gzipped files have a some special bytes at the end.

How would you do that?

https://redd.it/1kqbdib

@r_bash

I have a tgz, and I want to be sure that the download was not cut.

I could run

tar -tzf foo.tgz >/dev/null. But this takes 30 seconds.For the current use case, t would be enough to somehow check the final bytes. Afaik gzipped files have a some special bytes at the end.

How would you do that?

https://redd.it/1kqbdib

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Bash Shell Scripting and Automated Backups with Cron: Your Comprehensive Guide

I just published a comprehensive guide on Medium that walks through bash shell noscripting fundamentals and how to set up automated backups using cron jobs.

If you have any questions or suggestions for improvements, I'd love to hear your feedback!

PS: This is my first time writing an article

Link: https://medium.com/@sharmamanav34568/bash-shell-noscripting-and-automated-backups-with-cron-your-comprehensive-guide-3435a3409e16

https://redd.it/1kqihm6

@r_bash

I just published a comprehensive guide on Medium that walks through bash shell noscripting fundamentals and how to set up automated backups using cron jobs.

If you have any questions or suggestions for improvements, I'd love to hear your feedback!

PS: This is my first time writing an article

Link: https://medium.com/@sharmamanav34568/bash-shell-noscripting-and-automated-backups-with-cron-your-comprehensive-guide-3435a3409e16

https://redd.it/1kqihm6

@r_bash

Medium

Bash Shell Scripting and Automated Backups with Cron: Your Comprehensive Guide

Creating a simple backup noscript.

is there any naming convention for functions in bash noscripting?

Hello friends, I'm a c programmer who every once in a while makes little bash noscripts to automatize process.

right now I'm making a noscript a bit more complex than usual and I'm using functions for the first time in quite a while. I think it's the first time I use them since I started learning c, so it does bother me a bit to find that the parenthesis are used to define the function and not to call it and that to call a function you just have to write the name.

I have the impression that when reading a code I might have a difficult time remembering that the line that only has "get_path" is a call to the get_path function since I'm used to using get_path() to call said function. So my question is, is there any kind of naming convention for functions in bash noscripting? maybe something like ft_get_path ?

https://redd.it/1kqnyw5

@r_bash

Hello friends, I'm a c programmer who every once in a while makes little bash noscripts to automatize process.

right now I'm making a noscript a bit more complex than usual and I'm using functions for the first time in quite a while. I think it's the first time I use them since I started learning c, so it does bother me a bit to find that the parenthesis are used to define the function and not to call it and that to call a function you just have to write the name.

I have the impression that when reading a code I might have a difficult time remembering that the line that only has "get_path" is a call to the get_path function since I'm used to using get_path() to call said function. So my question is, is there any kind of naming convention for functions in bash noscripting? maybe something like ft_get_path ?

https://redd.it/1kqnyw5

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Minimal Bash noscript to convert media files using yt-dlp + ffmpeg

Hey folks!!, I just wrote my first Bash noscript and packaged it into a tool called `m2m`.

It's a simple command-line utility that uses yt-dlp and ffmpeg to download and convert videos.

GitHub repo: https://github.com/Saffron-sh/m2m

It's very minimal, but I’m open to feedback, improvements, and general bash advice. Cheers!

https://redd.it/1kpjxq4

@r_bash

Hey folks!!, I just wrote my first Bash noscript and packaged it into a tool called `m2m`.

It's a simple command-line utility that uses yt-dlp and ffmpeg to download and convert videos.

GitHub repo: https://github.com/Saffron-sh/m2m

It's very minimal, but I’m open to feedback, improvements, and general bash advice. Cheers!

https://redd.it/1kpjxq4

@r_bash

GitHub

GitHub - Saffron-sh/m2m: A minimal bash tool to convert video files to any other supported media files using yt-dlp and ffmpeg.

A minimal bash tool to convert video files to any other supported media files using yt-dlp and ffmpeg. - Saffron-sh/m2m

Any tips for a new bash enthusiast_?

Never will I ever use vim for text editing again 🥴.

Nano is my favorite for now, until I become a "pro" in bash noscripting 😁.

I heard this is the place to be to get proper help with bash, anyone here have time to teach me a bit more about it please.

https://redd.it/1kqqlbj

@r_bash

Never will I ever use vim for text editing again 🥴.

Nano is my favorite for now, until I become a "pro" in bash noscripting 😁.

I heard this is the place to be to get proper help with bash, anyone here have time to teach me a bit more about it please.

https://redd.it/1kqqlbj

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community