List to php array

Hi guys,

we have a shared nextcloud for the entire company, the users are syncing with the desktop client. Sometimes a ransomware infection happen and the sync client see: "oh! there's a change on this file. I need to sync this!" The last unencrypted files (in the cloud) get encrypted too. I could use the build-in feature 'blacklisted_files' with this list. The final array looks like this:

<?php

$CONFIG = array (

array (

0 => '.htaccess',

1 => 'Thumbs.db',

2 => 'thumbs.db',

),

);

Nextcloud can have multiple

​

https://redd.it/1843o1q

@r_bash

Hi guys,

we have a shared nextcloud for the entire company, the users are syncing with the desktop client. Sometimes a ransomware infection happen and the sync client see: "oh! there's a change on this file. I need to sync this!" The last unencrypted files (in the cloud) get encrypted too. I could use the build-in feature 'blacklisted_files' with this list. The final array looks like this:

<?php

$CONFIG = array (

array (

0 => '.htaccess',

1 => 'Thumbs.db',

2 => 'thumbs.db',

),

);

Nextcloud can have multiple

*.config.php files, they get merged, so I can provide an exclusive blacklist file. I want to get this extension list every day and put that into the array above. sed and awk are nice tools, but can they insert a enumeration too? ​

https://redd.it/1843o1q

@r_bash

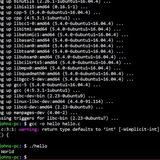

Function that automatically resubmits last command with "--help" instead of "-h"

https://i.vgy.me/P8v1aY.png

https://redd.it/1849by3

@r_bash

https://i.vgy.me/P8v1aY.png

https://redd.it/1849by3

@r_bash

Bash Sugar ( a bash threads)

There used to be a bash thread, now there's not.

Lets change that.

Here's a thing for expanding aliases in zsh

https://redd.it/1858sjf

@r_bash

There used to be a bash thread, now there's not.

Lets change that.

Here's a thing for expanding aliases in zsh

#-------------------------------

# ealias

#-------------------------------

typeset -a ealiases

ealiases=()

function ealias()

{

alias $1

ealiases+=(${1%%\=*})

}

function expand-ealias()

{

if [[ $LBUFFER =~ "(^|[;|&])\s*(${(j:|:)ealiases})\$" ]]; then

zle _expand_alias

zle expand-word

fi

zle magic-space

}

zle -N expand-ealias

bindkey -M viins ' ' expand-ealias

bindkey -M viins '^ ' magic-space # control-space to bypass completion

bindkey -M isearch " " magic-space # normal space during searches

#-------------------------

ealias gc='git commit . -m '

ealias gp='git push'

https://redd.it/1858sjf

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Using a shell noscript to check if one file's variable contents exist in another?

Hi everyone, kind of new to shell noscripting, so in a CSV file I have two fields "ip addresses," and the "timestamp." When it comes to the destination IP field, to narrow/return only that field and all the associated IPs I used use

cat files.csv | cut -d "," -f 1 file.csv

and I stored this to a variable value, lets call it IP=$(cat files.csv | cut -d "," -f 1 file.csv)

For the timestamp, I did something similar, and also stored it to a variable value

cat file.csv | cut -d "," -f 2 file.csv

timestamp=$(cat files.csv | cut -d "," -f 2 file.csv)

Now, with these variables for the destination IP and the timestamp, how would I go about using a shell noscript to see if both of these variable values (the specific destination ips, and the timestamp as well), exist in the json.logs file (the file which the values of the CSV would be checking against from my shell noscript). I would then return the lines of the json.logs file where there is a match, and output it to a new file.

https://redd.it/18584kc

@r_bash

Hi everyone, kind of new to shell noscripting, so in a CSV file I have two fields "ip addresses," and the "timestamp." When it comes to the destination IP field, to narrow/return only that field and all the associated IPs I used use

cat files.csv | cut -d "," -f 1 file.csv

and I stored this to a variable value, lets call it IP=$(cat files.csv | cut -d "," -f 1 file.csv)

For the timestamp, I did something similar, and also stored it to a variable value

cat file.csv | cut -d "," -f 2 file.csv

timestamp=$(cat files.csv | cut -d "," -f 2 file.csv)

Now, with these variables for the destination IP and the timestamp, how would I go about using a shell noscript to see if both of these variable values (the specific destination ips, and the timestamp as well), exist in the json.logs file (the file which the values of the CSV would be checking against from my shell noscript). I would then return the lines of the json.logs file where there is a match, and output it to a new file.

https://redd.it/18584kc

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Using tail to pipe stdin ?

I wanted to update a yad notification with data from a subshell, so I tried using an external temporary file; I had various issues trying to do this, then tried this:

yad --notification --listen < <(tail -f -n1 $TMP 2>/dev/null | cat)

( $TMP is a tempfile where another part of the noscript put updates for the notification ; pipe to cat seems needed to avoid "buffering issues" ... )

It seems to work nicely, it's someway wrong to do this?

https://redd.it/18686zs

@r_bash

I wanted to update a yad notification with data from a subshell, so I tried using an external temporary file; I had various issues trying to do this, then tried this:

yad --notification --listen < <(tail -f -n1 $TMP 2>/dev/null | cat)

( $TMP is a tempfile where another part of the noscript put updates for the notification ; pipe to cat seems needed to avoid "buffering issues" ... )

It seems to work nicely, it's someway wrong to do this?

https://redd.it/18686zs

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Does anyone know how to highlight specific characters when pasting output from a text file?

I'm making a wrapper for ncal that, just for fun, replaces the month, year, and weekday abbreviations with those from The Elder Scrolls (kind of a fun "to see if I could" project). I've used 'ncal -C' to do this, and I've sorted out most of the process, redirecting output to a text file, using sed to replace the month/year header and the day abbreviations, but there's one thing I can't seem to figure out how to do, and that's changing the text style of the current day to be black on white when catting out the .tmpdate file after making the changes to the first two lines with sed, so the current date is highlighted as normal with 'ncal-C'. I've worked with ChatGPT to see if it can get it to do it, but nothing it comes up with has worked.

Currently have this as what was last tried to highlight the current date:

`awk -v today="$(date +'%e')" '{gsub(/\\y'"$today"'\\y/, "\\033[1;31m&\\033[0m")}1' .tmpdate`

Though that doesn't do much more that `tail -n +2 .tmpdate`

Any thoughts would be welcome

https://redd.it/186c3fa

@r_bash

I'm making a wrapper for ncal that, just for fun, replaces the month, year, and weekday abbreviations with those from The Elder Scrolls (kind of a fun "to see if I could" project). I've used 'ncal -C' to do this, and I've sorted out most of the process, redirecting output to a text file, using sed to replace the month/year header and the day abbreviations, but there's one thing I can't seem to figure out how to do, and that's changing the text style of the current day to be black on white when catting out the .tmpdate file after making the changes to the first two lines with sed, so the current date is highlighted as normal with 'ncal-C'. I've worked with ChatGPT to see if it can get it to do it, but nothing it comes up with has worked.

Currently have this as what was last tried to highlight the current date:

`awk -v today="$(date +'%e')" '{gsub(/\\y'"$today"'\\y/, "\\033[1;31m&\\033[0m")}1' .tmpdate`

Though that doesn't do much more that `tail -n +2 .tmpdate`

Any thoughts would be welcome

https://redd.it/186c3fa

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to create argument suggestion for a programme?

I was wondering how one could replicate the default behaviour of many bash utilities, that show all available arguments after writting -+Tab.

https://redd.it/186mlii

@r_bash

I was wondering how one could replicate the default behaviour of many bash utilities, that show all available arguments after writting -+Tab.

https://redd.it/186mlii

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Why is my bash for loop printing from the end?

Here is my code -

```declare -A mydict=(["key1"\]="value1"["key2"\]="value2")

for arg in "${!mydict[@\]}"; dojson_string="{\\"$arg\\":\\"${mydict[$arg\]}\\"}"echo $json_stringdone

```

This is the output -

```{"key2":"value2"}

{"key1":"value1"}

```

I was expecting it to do -

```{"key1":"value1"}

{"key2":"value2"}

​

```

https://redd.it/186rdhf

@r_bash

Here is my code -

```declare -A mydict=(["key1"\]="value1"["key2"\]="value2")

for arg in "${!mydict[@\]}"; dojson_string="{\\"$arg\\":\\"${mydict[$arg\]}\\"}"echo $json_stringdone

```

This is the output -

```{"key2":"value2"}

{"key1":"value1"}

```

I was expecting it to do -

```{"key1":"value1"}

{"key2":"value2"}

​

```

https://redd.it/186rdhf

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Wildcard to only match certain files ?

So I am trying to create a wildcard that will only match certain files but I am not sure how to do so. In my dir the files look like this

10.0.0.1.csv

10.0.0.2.csv

10.0.0.3.csv

10.0.0.4.csv

10.0.0.5.csv

10.0.0.6.csv

10.0.0.7.csv

10.0.0.8.csv

10.0.0.9.csv

10.0.0.10.csv

toplist.csv

usage.csv

trial.csv

I only want to match the files with the IP addresses in their names , but the IP address in the filename may change. How can I accomplish this ?

https://redd.it/186tv00

@r_bash

So I am trying to create a wildcard that will only match certain files but I am not sure how to do so. In my dir the files look like this

10.0.0.1.csv

10.0.0.2.csv

10.0.0.3.csv

10.0.0.4.csv

10.0.0.5.csv

10.0.0.6.csv

10.0.0.7.csv

10.0.0.8.csv

10.0.0.9.csv

10.0.0.10.csv

toplist.csv

usage.csv

trial.csv

I only want to match the files with the IP addresses in their names , but the IP address in the filename may change. How can I accomplish this ?

https://redd.it/186tv00

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

A single function to extract all archive file types at once: tar{gz,tgz,xz,lz,bz2} + zip and 7z

####################################################################################

##

## How to demonstrate:

##

### 1) Add all of the following types of archives to an empty directory

## - name.tar.gz

## - name.tar.tgz

## - name.tar.xz

## - name.tar.bz2

## - name.tar.lz

## - name.zip

## - name.7z

##

## 2) Add the untar function to your .bash_functions or .bashrc file and source it or restart your terminal

##

## 3) Simply type "untar" in the same directory as the above archive files

## - Second method is to run this noscript in the same direcotry with the archive files using the command "bash untar.sh"

##

## Result: All archives will be extracted and ready to use

##

####################################################################################

You need 7z installed for the 7z and zip archive files and tar command for the tar files.

Also for the lz tar files you can install it using `sudo apt install lzip`

If you like you can use my GitHub 7zip noscript to instantly install the latest 7zip version 23.01.

bash <(curl -fsSL https://7z.optimizethis.net)

[GitHub Script: 7-zip installer](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Installer%20Scripts/SlyFox1186%20Scripts/build-7zip)

I hope you guys think it's worthy!

Cheers!

[GitHub Script: untar.sh](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Misc/untar.sh)

https://redd.it/186zwdp

@r_bash

####################################################################################

##

## How to demonstrate:

##

### 1) Add all of the following types of archives to an empty directory

## - name.tar.gz

## - name.tar.tgz

## - name.tar.xz

## - name.tar.bz2

## - name.tar.lz

## - name.zip

## - name.7z

##

## 2) Add the untar function to your .bash_functions or .bashrc file and source it or restart your terminal

##

## 3) Simply type "untar" in the same directory as the above archive files

## - Second method is to run this noscript in the same direcotry with the archive files using the command "bash untar.sh"

##

## Result: All archives will be extracted and ready to use

##

####################################################################################

You need 7z installed for the 7z and zip archive files and tar command for the tar files.

Also for the lz tar files you can install it using `sudo apt install lzip`

If you like you can use my GitHub 7zip noscript to instantly install the latest 7zip version 23.01.

bash <(curl -fsSL https://7z.optimizethis.net)

[GitHub Script: 7-zip installer](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Installer%20Scripts/SlyFox1186%20Scripts/build-7zip)

I hope you guys think it's worthy!

Cheers!

[GitHub Script: untar.sh](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Misc/untar.sh)

https://redd.it/186zwdp

@r_bash

Bash projects

What are some bash projects that you would think as a “trophy” in a resume/ or that have some potential in general (I’m mostly interested in blue team/ red team related projects).

https://redd.it/186zu8j

@r_bash

What are some bash projects that you would think as a “trophy” in a resume/ or that have some potential in general (I’m mostly interested in blue team/ red team related projects).

https://redd.it/186zu8j

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to return lines from a JSON log file which contain matched IPs and timestamps values from a CSV?

IP and timestamp values exists in some way in the line entries of the JSON log file, and if so, return that specific JSON log entry to another file. I tried to make it universal so it's applicable to all IP addresses. Here's what the sample CSV file would look like;

"clientip,""destip","desthostname","timestamp"

"127.0.0.1","0.0.0.0","randomhost","2023-09-09T04:18:22.542Z"

And a sample line entry from the Json Log File

{"log": "09-Sept-2023 rate-limit: info: client @xyz 127.0.0.1, "stream":"stderr", "time": 2023-09-09T04:18:22.542Z"}

It's the lines from the JSON log file we want to return in the output.txt file when there's a match. The JSON file doesn't have the same fields and organization like the CSV does (with clientip, destip, dest\hostname, timestamp, but I was hoping that I could still at least return lines from the JSON log files to a new file that had matches on the clientip (like we see here with 127.0.0.1 in "info: client @xyz 127.0.0.1) and maybe the timestamp.

I tried using the

https://redd.it/1877qzz

@r_bash

IP and timestamp values exists in some way in the line entries of the JSON log file, and if so, return that specific JSON log entry to another file. I tried to make it universal so it's applicable to all IP addresses. Here's what the sample CSV file would look like;

"clientip,""destip","desthostname","timestamp"

"127.0.0.1","0.0.0.0","randomhost","2023-09-09T04:18:22.542Z"

And a sample line entry from the Json Log File

{"log": "09-Sept-2023 rate-limit: info: client @xyz 127.0.0.1, "stream":"stderr", "time": 2023-09-09T04:18:22.542Z"}

It's the lines from the JSON log file we want to return in the output.txt file when there's a match. The JSON file doesn't have the same fields and organization like the CSV does (with clientip, destip, dest\hostname, timestamp, but I was hoping that I could still at least return lines from the JSON log files to a new file that had matches on the clientip (like we see here with 127.0.0.1 in "info: client @xyz 127.0.0.1) and maybe the timestamp.

I tried using the

join command like, join file.csv xyz-json.log > output.txt . Andawk did not yield much either.https://redd.it/1877qzz

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

set -e, but show error message and line-number

I usually use

Unfortunately, you only get a non-zero exit value if a command fails, but no warning message.

Is there a simple way to get and error message and the line-number, if

a bash noscript stops because a command returned a non-zero status?

https://redd.it/187fr0c

@r_bash

I usually use

set -e in my bash noscripts.Unfortunately, you only get a non-zero exit value if a command fails, but no warning message.

Is there a simple way to get and error message and the line-number, if

a bash noscript stops because a command returned a non-zero status?

https://redd.it/187fr0c

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

incremental Backup

Hey

working on a rsync noscript to create incremental backups. I’ve used many different sources around the net including chatgpt to get to where I’m at. I understand approx 75% of whats going on and I’ve run to the point where I’m not grasping why somethings are the way they are... The noscript creates three directories: daily, weekly, monthly. each of these has sub directories ie, (daily)2023-11-30-02:00, 2023-11-29-02:00, etc (weekly)46,47,48. The noscript is supposed to delete backups past a defined date (I’ll use “daily” as example here on out) $DAILY_RETENTION_DAYS. TDirectory name correctly corresponds to the date the directory was actually created but the mtime is the date I first ran the noscript which is nov 9. All the directories are Nov 9 mtime regardless of when they were actually created. I assume this is due to how links operate. Why are the directories mtime off?

!/bin/bash

# debugging

export PS4='${LINENO}: ' #Prints out the line number being executed by debug

set -xv #Turn on debugging

######### Change Directory as Needed - Must Match Source Dir Name ###########

DESTOPT="MyFiles"

# Define source and destination servers and directories

SOURCESERVER="username@192.168.100.55"

SOURCEDIR="/srv/dev-disk-by-uuid-532c2087-63ea-4d82-9194-b2d49e371aa9/$DESTOPT"

DESTSERVER="localhost"

DESTROOT="/media/Storage01"

DESTDIR="$DESTROOT/$DESTOPT"

# Define backup retention periods in days

DAILYRETENTIONDAYS=7

WEEKLYRETENTIONDAYS=30

MONTHLYRETENTIONDAYS=365

# Define current date/time for use in backup directory name

CURRENTDATE=

CURRENTWEEK=`date "+%V"`

CURRENTMONTH=

# Define logs

if -d $DEST_ROOT/log ; then

echo "log directory exists"

else

mkdir -p $DESTROOT/log

fi

LOGDIR="$DESTROOT/log"

LOGFILE="$DESTOPT"-"$CURRENTDATE.log"

# Define rsync options

RSYNCOPTS="-rlthv --exclude=.Trash* --delete-after --itemize-changes --link-dest=$DESTDIR/current --log-file=$LOGDIR/$LOGFILE"

# Define notification threshold

DAILYTHRESHOLD=20

WEEKLYTHRESHOLD=100

MONTHLYTHRESHOLD=400

# Create backup directories on local destination server

mkdir -p "$DESTDIR/daily/$CURRENTDATE"

mkdir -p "$DESTDIR/weekly/$CURRENTWEEK"

mkdir -p "$DESTDIR/monthly/$CURRENTMONTH"

mkdir -p "$DESTDIR/current"

# Run rsync to perform daily backup

RSYNCOUTPUT=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/daily/$CURRENTDATE/)

# Count number of files changed

DAILYNUMCHANGED=$(echo "$RSYNCOUTPUT" | grep -c "^[\>\<cd]")

# Send email notification if number of files changed exceeds threshold

if [[ $DAILYNUMCHANGED -gt $DAILYTHRESHOLD ]]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

# Remove daily backups older than retention period

# 11-13-23 commented out as results are usually deleting all directories

#find $DESTDIR/daily -maxdepth 1 -type d -mtime +$DAILYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most recent daily backup

rm -rf $DESTDIR/current && ln -s $DESTDIR/daily/$CURRENTDATE $DESTDIR/current

#

# Run rsync to perform weekly backup on Monday

if [ `date +%u` -eq 1 ]; then

rsyncoutput=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/weekly/$CURRENTWEEK/ 2>&1)

# Count number of files changed

WEEKLYNUMCHANGED=$(echo "$rsyncoutput" | grep -E '^sent|^total size' | awk '{print $NF}' | paste -sd+ - | bc)

# Remove weekly backups older than retention period

# find $DESTDIR/weekly -maxdepth 1 -type d -mtime +$WEEKLYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most recent weekly backup

rm -f $DESTDIR/weekly/current && ln -s $DESTDIR/weekly/$CURRENTWEEK

Hey

working on a rsync noscript to create incremental backups. I’ve used many different sources around the net including chatgpt to get to where I’m at. I understand approx 75% of whats going on and I’ve run to the point where I’m not grasping why somethings are the way they are... The noscript creates three directories: daily, weekly, monthly. each of these has sub directories ie, (daily)2023-11-30-02:00, 2023-11-29-02:00, etc (weekly)46,47,48. The noscript is supposed to delete backups past a defined date (I’ll use “daily” as example here on out) $DAILY_RETENTION_DAYS. TDirectory name correctly corresponds to the date the directory was actually created but the mtime is the date I first ran the noscript which is nov 9. All the directories are Nov 9 mtime regardless of when they were actually created. I assume this is due to how links operate. Why are the directories mtime off?

!/bin/bash

# debugging

export PS4='${LINENO}: ' #Prints out the line number being executed by debug

set -xv #Turn on debugging

######### Change Directory as Needed - Must Match Source Dir Name ###########

DESTOPT="MyFiles"

# Define source and destination servers and directories

SOURCESERVER="username@192.168.100.55"

SOURCEDIR="/srv/dev-disk-by-uuid-532c2087-63ea-4d82-9194-b2d49e371aa9/$DESTOPT"

DESTSERVER="localhost"

DESTROOT="/media/Storage01"

DESTDIR="$DESTROOT/$DESTOPT"

# Define backup retention periods in days

DAILYRETENTIONDAYS=7

WEEKLYRETENTIONDAYS=30

MONTHLYRETENTIONDAYS=365

# Define current date/time for use in backup directory name

CURRENTDATE=

date "+%Y-%m-%d-%H:%M"CURRENTWEEK=`date "+%V"`

CURRENTMONTH=

date "+%m"# Define logs

if -d $DEST_ROOT/log ; then

echo "log directory exists"

else

mkdir -p $DESTROOT/log

fi

LOGDIR="$DESTROOT/log"

LOGFILE="$DESTOPT"-"$CURRENTDATE.log"

# Define rsync options

RSYNCOPTS="-rlthv --exclude=.Trash* --delete-after --itemize-changes --link-dest=$DESTDIR/current --log-file=$LOGDIR/$LOGFILE"

# Define notification threshold

DAILYTHRESHOLD=20

WEEKLYTHRESHOLD=100

MONTHLYTHRESHOLD=400

# Create backup directories on local destination server

mkdir -p "$DESTDIR/daily/$CURRENTDATE"

mkdir -p "$DESTDIR/weekly/$CURRENTWEEK"

mkdir -p "$DESTDIR/monthly/$CURRENTMONTH"

mkdir -p "$DESTDIR/current"

# Run rsync to perform daily backup

RSYNCOUTPUT=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/daily/$CURRENTDATE/)

# Count number of files changed

DAILYNUMCHANGED=$(echo "$RSYNCOUTPUT" | grep -c "^[\>\<cd]")

# Send email notification if number of files changed exceeds threshold

if [[ $DAILYNUMCHANGED -gt $DAILYTHRESHOLD ]]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

# Remove daily backups older than retention period

# 11-13-23 commented out as results are usually deleting all directories

#find $DESTDIR/daily -maxdepth 1 -type d -mtime +$DAILYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most recent daily backup

rm -rf $DESTDIR/current && ln -s $DESTDIR/daily/$CURRENTDATE $DESTDIR/current

#

# Run rsync to perform weekly backup on Monday

if [ `date +%u` -eq 1 ]; then

rsyncoutput=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/weekly/$CURRENTWEEK/ 2>&1)

# Count number of files changed

WEEKLYNUMCHANGED=$(echo "$rsyncoutput" | grep -E '^sent|^total size' | awk '{print $NF}' | paste -sd+ - | bc)

# Remove weekly backups older than retention period

# find $DESTDIR/weekly -maxdepth 1 -type d -mtime +$WEEKLYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most recent weekly backup

rm -f $DESTDIR/weekly/current && ln -s $DESTDIR/weekly/$CURRENTWEEK

$DESTDIR/weekly/current

# Send email notification if number of files changed exceeds threshold

if [[ $WEEKLYNUMCHANGED -gt $NOTIFICATIONTHRESHOLD ]]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

fi

#

# Run rsync to perform monthly backup on 1st day of month

if `date +%d` -eq 1 ; then

rsyncoutput=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/monthly/$CURRENTMONTH/ 2>&1)

# Count number of files changed

MONTHLYNUMCHANGED=$(echo "$rsyncoutput" | grep -E '^sent|^total size' | awk '{print $NF}' | paste -sd+ - | bc)

# Remove monthly backup older than retention period

# find $DESTDIR/monthly -maxdepth 1 -type d -mtime +$MONTHLYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most rescent monthly backup

rm -f $DESTDIR/monthly/current && ln -s $DESTDIR/monthly/$CURRENTMONTH $DESTDIR/monthly/current

# Send email notification if number of files changed exceeds threshold

if [ $MONTHLY_NUM_CHNAGED -gt $NOTIFICATION_THRESHOLD ]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

fi

# Turn off debugging

set +xv

ls -la

user@backup:/media/Storage01/myfiles/daily$ ls -la

total 72

drwxrwxr-x 18 user user 4096 Nov 30 01:00 .

drwxrwxr-x 5 user user 4096 Nov 30 01:00 ..

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-15-09:25

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-17-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-18-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-19-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-20-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-20-14:07

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-21-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-22-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-23-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-24-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-25-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-26-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-27-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-28-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-29-01:00

Notice I have commented out the lines to delete directories past the defined retention time as it deletes all the directories because mtime is Nov 9th. How do I fix this?

https://redd.it/187p2ly

@r_bash

# Send email notification if number of files changed exceeds threshold

if [[ $WEEKLYNUMCHANGED -gt $NOTIFICATIONTHRESHOLD ]]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

fi

#

# Run rsync to perform monthly backup on 1st day of month

if `date +%d` -eq 1 ; then

rsyncoutput=$(rsync $RSYNCOPTS $SOURCESERVER:$SOURCEDIR/ $DESTDIR/monthly/$CURRENTMONTH/ 2>&1)

# Count number of files changed

MONTHLYNUMCHANGED=$(echo "$rsyncoutput" | grep -E '^sent|^total size' | awk '{print $NF}' | paste -sd+ - | bc)

# Remove monthly backup older than retention period

# find $DESTDIR/monthly -maxdepth 1 -type d -mtime +$MONTHLYRETENTIONDAYS -exec rm -rf {} \;

# Update symlink to most rescent monthly backup

rm -f $DESTDIR/monthly/current && ln -s $DESTDIR/monthly/$CURRENTMONTH $DESTDIR/monthly/current

# Send email notification if number of files changed exceeds threshold

if [ $MONTHLY_NUM_CHNAGED -gt $NOTIFICATION_THRESHOLD ]; then

sendmail -t < /home/user/Mail/backupthresholdexceeded.txt

fi

fi

# Turn off debugging

set +xv

ls -la

user@backup:/media/Storage01/myfiles/daily$ ls -la

total 72

drwxrwxr-x 18 user user 4096 Nov 30 01:00 .

drwxrwxr-x 5 user user 4096 Nov 30 01:00 ..

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-15-09:25

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-17-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-18-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-19-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-20-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-20-14:07

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-21-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-22-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-23-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-24-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-25-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-26-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-27-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-28-01:00

drwxrwxr-x 13 user user 4096 Nov 9 16:30 2023-11-29-01:00

Notice I have commented out the lines to delete directories past the defined retention time as it deletes all the directories because mtime is Nov 9th. How do I fix this?

https://redd.it/187p2ly

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

String comparison unexpected results?

I have the following noscript:

if sqlite3 webapp/instance/app.db "SELECT EXISTS(SELECT 1 FROM users WHERE username='admin');" > /dev/null

then

read -p "admin user exists, overwrite? " answer

if "$answer" = "y" ;

then

echo "Removing old admin account..."

sed -i '/ADMINPASSWORD/d' .env

sqlite3 webapp/instance/app.db "DELETE FROM users WHERE username='admin';"

ADMINPWMAYBE=ADMINPASSWORD

else

ADMINPWMAYBE=NULLPASSWORD

fi

fi

I run this and it goes as follows:

admin user exists, overwrite? y

There is no output and it doesn't delete the old ADMIN\PASSWORD

I have added

so what am I doing wrong?

https://redd.it/187meau

@r_bash

I have the following noscript:

if sqlite3 webapp/instance/app.db "SELECT EXISTS(SELECT 1 FROM users WHERE username='admin');" > /dev/null

then

read -p "admin user exists, overwrite? " answer

if "$answer" = "y" ;

then

echo "Removing old admin account..."

sed -i '/ADMINPASSWORD/d' .env

sqlite3 webapp/instance/app.db "DELETE FROM users WHERE username='admin';"

ADMINPWMAYBE=ADMINPASSWORD

else

ADMINPWMAYBE=NULLPASSWORD

fi

fi

I run this and it goes as follows:

admin user exists, overwrite? y

There is no output and it doesn't delete the old ADMIN\PASSWORD

I have added

echo "$answer" and it is indeed "y"so what am I doing wrong?

https://redd.it/187meau

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

grep to output only needed capturing group

https://shnoscripts.com/grep-to-output-only-needed-capturing-group/

https://redd.it/187tjxd

@r_bash

https://shnoscripts.com/grep-to-output-only-needed-capturing-group/

https://redd.it/187tjxd

@r_bash

#!/bin/bash

grep to output only needed capturing group

Say you have some text and some pattern that you want to provide for grep. Everything is easy, until you want to extract only the pattern-matching part, not the whole line that has the match. What to do?

You can "grep" and use pipe for further processing…

You can "grep" and use pipe for further processing…

Calculating with Logs in Bash...

I think BC can do it, or maybe EXPR, but can't find enough documentation or examples even.

I want to calculate this formula and display a result in a noscript I am building...

N = Log2 (S^L)

It's for calculating the password strength of a given password.

I have S and I have L, i need to calculate N. Short of generating Log tables and storing them in an array, I am stuck in finding an elegant solution.

Here are the notes I have received on how it works...

\----

**Password Entropy**

Password entropy is a measure of the randomness or unpredictability of a password. It is often expressed in bits and gives an indication of the strength of a password against brute-force attacks. The formula to calculate password entropy is:

\[ \\text{Entropy} = \\log\2(\\text{Number of Possible Combinations}) \]

Where:

(\\text{Entropy}) is the password entropy in bits.

( \\log_2 ) is the base-2 logarithm.

(\\text{Number of Possible Combinations}) is the total number of possible combinations of the characters used in the password.

The formula takes into account the length of the password and the size of the character set.

Here's a step-by-step guide to calculating password entropy:

Determine the Character Set:

Identify the character set used in the password. This includes uppercase letters, lowercase letters, numbers, and special characters.

Calculate the Size of the Character Set ((S)):

Add up the number of characters in the character set.

Determine the Password Length ((L)):

Identify the length of the password.

Calculate the Number of Possible Combinations ((N)):

Raise the size of the character set ((S)) to the power of the password length ((L)). \[ N = S\^L \]

Calculate the Entropy ((\\text{Entropy})):

Take the base-2 logarithm of the number of possible combinations ((N)). [ \\text{Entropy} = \\log_2(N) \]

This entropy value gives an indication of the strength of the password. Generally, higher entropy values indicate stronger passwords that are more resistant to brute-force attacks. Keep in mind that the actual strength of a password also depends on other factors, such as the effectiveness of the password generation method and the randomness of the chosen characters.

https://redd.it/18804ax

@r_bash

I think BC can do it, or maybe EXPR, but can't find enough documentation or examples even.

I want to calculate this formula and display a result in a noscript I am building...

N = Log2 (S^L)

It's for calculating the password strength of a given password.

I have S and I have L, i need to calculate N. Short of generating Log tables and storing them in an array, I am stuck in finding an elegant solution.

Here are the notes I have received on how it works...

\----

**Password Entropy**

Password entropy is a measure of the randomness or unpredictability of a password. It is often expressed in bits and gives an indication of the strength of a password against brute-force attacks. The formula to calculate password entropy is:

\[ \\text{Entropy} = \\log\2(\\text{Number of Possible Combinations}) \]

Where:

(\\text{Entropy}) is the password entropy in bits.

( \\log_2 ) is the base-2 logarithm.

(\\text{Number of Possible Combinations}) is the total number of possible combinations of the characters used in the password.

The formula takes into account the length of the password and the size of the character set.

Here's a step-by-step guide to calculating password entropy:

Determine the Character Set:

Identify the character set used in the password. This includes uppercase letters, lowercase letters, numbers, and special characters.

Calculate the Size of the Character Set ((S)):

Add up the number of characters in the character set.

Determine the Password Length ((L)):

Identify the length of the password.

Calculate the Number of Possible Combinations ((N)):

Raise the size of the character set ((S)) to the power of the password length ((L)). \[ N = S\^L \]

Calculate the Entropy ((\\text{Entropy})):

Take the base-2 logarithm of the number of possible combinations ((N)). [ \\text{Entropy} = \\log_2(N) \]

This entropy value gives an indication of the strength of the password. Generally, higher entropy values indicate stronger passwords that are more resistant to brute-force attacks. Keep in mind that the actual strength of a password also depends on other factors, such as the effectiveness of the password generation method and the randomness of the chosen characters.

https://redd.it/18804ax

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Trying to understand what the period does in a regular expression/wild card?

I'm a little confused about the difference here:

This is true (returns 0 exit code): [[ "aramel" =\~ c* \]\]; echo $?

This is false (returns 1): [[ "aramel" =\~ c.* \]\]; echo $?

(notice the period after the 'c' in the latter.

​

Now, both will return a success if instead of writing 'aramel' we writ the word 'caramel'.

So it seems like one of them requires the c and the other does not?

​

I've been asking and searching but I am still unsure about what specifically the dot affects.

https://redd.it/185ti39

@r_bash

I'm a little confused about the difference here:

This is true (returns 0 exit code): [[ "aramel" =\~ c* \]\]; echo $?

This is false (returns 1): [[ "aramel" =\~ c.* \]\]; echo $?

(notice the period after the 'c' in the latter.

​

Now, both will return a success if instead of writing 'aramel' we writ the word 'caramel'.

So it seems like one of them requires the c and the other does not?

​

I've been asking and searching but I am still unsure about what specifically the dot affects.

https://redd.it/185ti39

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Basename breaks everything somehow

\#!/bin/bash

baseFolder=\~/gameconverter/psx

​

outputFolder=\~/gameconverter/output

rm -rf ${outputFolder}

mkdir ${outputFolder}

​

nonSplitted=$(find ${baseFolder} -maxdepth 1 -not -regex ".*(Disc [12\]).*")

disc1=$(find ${baseFolder} -maxdepth 1 -regex ".*(Disc [1\]).*")

disc2=$(find ${baseFolder} -maxdepth 1 -regex ".*(Disc [2\]).*")

​

for game in "${nonSplitted[@\]}"; do

gameName=$(basename "${game}")

input="${baseFolder}/${gameName}/${gameName}.cue"

output="${outputFolder}/${gameName}.chd"

chdman createcd -i "${input}" -o "${output}"

done

There are at least 20 psx games in basefolder but somehow, if I use basename or ##*/, it only runs once but with echo ${game} shows all the subfolders, why?

https://redd.it/188m8ch

@r_bash

\#!/bin/bash

baseFolder=\~/gameconverter/psx

​

outputFolder=\~/gameconverter/output

rm -rf ${outputFolder}

mkdir ${outputFolder}

​

nonSplitted=$(find ${baseFolder} -maxdepth 1 -not -regex ".*(Disc [12\]).*")

disc1=$(find ${baseFolder} -maxdepth 1 -regex ".*(Disc [1\]).*")

disc2=$(find ${baseFolder} -maxdepth 1 -regex ".*(Disc [2\]).*")

​

for game in "${nonSplitted[@\]}"; do

gameName=$(basename "${game}")

input="${baseFolder}/${gameName}/${gameName}.cue"

output="${outputFolder}/${gameName}.chd"

chdman createcd -i "${input}" -o "${output}"

done

There are at least 20 psx games in basefolder but somehow, if I use basename or ##*/, it only runs once but with echo ${game} shows all the subfolders, why?

https://redd.it/188m8ch

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community