A noscript to rename folders

Hi! I have posted this : https://www.reddit.com/r/bash/comments/1bu34ld/a\_noscript\_to\_automatically\_rename\_music\_folders/?utm\_source=share&utm\_medium=web3x&utm\_name=web3xcss&utm\_term=1&utm\_content=share\_button

I want to rename all of the albums's folders of my music library like : Music/Artist/Album (YYYY)/ --> Music/Artist/YYYY_Album/

'YYYY' is the year of release year of the album.

I have now the following noscript :

#!/bin/bash

for dir in //;do

[[ $dir =~ (.)\ \(([:digit:]{4})\)/$ ]] &&

echo "${BASHREMATCH[0]}" "${BASHREMATCH2}${BASHREMATCH1% }/"

done#!/bin/bash

for dir in //;do

[[ $dir =~ (.)\ \(([:digit:]{4})\)/$ ]] &&

echo "${BASHREMATCH[0]}" "${BASHREMATCH2}${BASHREMATCH1% }/"

done

But it renames from : Music/Artist/Album (YYYY)/ to : Music/YYYY_Artist/Album/

What can I change to get the folders named like : Music/Artist/YYYY_Album/, i.e. YYYY_ to be set before the album's name and not before the artist's?

https://redd.it/1by4w09

@r_bash

Hi! I have posted this : https://www.reddit.com/r/bash/comments/1bu34ld/a\_noscript\_to\_automatically\_rename\_music\_folders/?utm\_source=share&utm\_medium=web3x&utm\_name=web3xcss&utm\_term=1&utm\_content=share\_button

I want to rename all of the albums's folders of my music library like : Music/Artist/Album (YYYY)/ --> Music/Artist/YYYY_Album/

'YYYY' is the year of release year of the album.

I have now the following noscript :

#!/bin/bash

for dir in //;do

[[ $dir =~ (.)\ \(([:digit:]{4})\)/$ ]] &&

echo "${BASHREMATCH[0]}" "${BASHREMATCH2}${BASHREMATCH1% }/"

done#!/bin/bash

for dir in //;do

[[ $dir =~ (.)\ \(([:digit:]{4})\)/$ ]] &&

echo "${BASHREMATCH[0]}" "${BASHREMATCH2}${BASHREMATCH1% }/"

done

But it renames from : Music/Artist/Album (YYYY)/ to : Music/YYYY_Artist/Album/

What can I change to get the folders named like : Music/Artist/YYYY_Album/, i.e. YYYY_ to be set before the album's name and not before the artist's?

https://redd.it/1by4w09

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Can you use GNU grep to check if a file is binary, in a fast and robust way?

In another thread, someone mentioned that neofetch is written in bash. I did not know that, so I made a small noscript to check what interpreters are being used by the executable files in my `$PATH`.

The main problem is testing if the file is text or binary. I found this 10-year-old discussion on Stack Overflow: https://stackoverflow.com/questions/16760378/how-to-check-if-a-file-is-binary

Anyway, here is my noscript:

#!/bin/bash

time for f in ${PATH//:/\/* }

do

[[ -f $f ]] &&

#checking if file is binary or noscript, some improvement would be nice

head -c 1024 "$f" | grep -qIF "" &&

value=$(awk 'NR==1 && /bash/ {printf "\033[1;32m%s is bash\033",FILENAME }

NR==1 && /\/sh/ {printf "\033[1;35m%s\033[0m is shell",FILENAME}

NR==1 && /python/ {printf "\033[1;33m%s\033[0m is python",FILENAME }

NR==1 && /perl/ {printf "\033[1;34m%s\033[0m is perl",FILENAME}

NR==1 && /ruby/ {printf "\033[31m%s\033[0m is ruby",FILENAME}

NR==1 && /awk/ {printf "\033[36m%s\033[0m is awk",FILENAME}' "$f")

[[ $value = *[[:print:]]* ]] && arr+=("$value"); unset value

#I first assign file to a $value because if I would have sent it directly to the the array, a '\n' would be added to `arr[]` if awk evalutes to nothing.

#for example, if the file would be written in a language not mentioned in the awk program, like lua, awk would return nothing and then arr+=('\n').

done

files=$(fzf --multi --ansi <<<"${arr[@]/%/$'\n'}" | cut -d " " -f 2) #f2 cause the first field is the ansi escape code for fzf, I guess...

#shellcheck disable=SC2086

[[ $files ]] && "${VISUAL:-${EDITOR:-cat}}" ${files/$'\n'/\ }

Any way to make it faster and more robust?

The idea behind it is to type in fzf `is\ bash` `is\ perl` `is\ shell` `is\ python` to see the numbers of noscripts you have for each language in your PATH and if you want multi-select the noscripts you want to read the source code in your EDITOR of choice, or it will be printed on the terminal via `cat`

https://redd.it/1byfsce

@r_bash

In another thread, someone mentioned that neofetch is written in bash. I did not know that, so I made a small noscript to check what interpreters are being used by the executable files in my `$PATH`.

The main problem is testing if the file is text or binary. I found this 10-year-old discussion on Stack Overflow: https://stackoverflow.com/questions/16760378/how-to-check-if-a-file-is-binary

Anyway, here is my noscript:

#!/bin/bash

time for f in ${PATH//:/\/* }

do

[[ -f $f ]] &&

#checking if file is binary or noscript, some improvement would be nice

head -c 1024 "$f" | grep -qIF "" &&

value=$(awk 'NR==1 && /bash/ {printf "\033[1;32m%s is bash\033",FILENAME }

NR==1 && /\/sh/ {printf "\033[1;35m%s\033[0m is shell",FILENAME}

NR==1 && /python/ {printf "\033[1;33m%s\033[0m is python",FILENAME }

NR==1 && /perl/ {printf "\033[1;34m%s\033[0m is perl",FILENAME}

NR==1 && /ruby/ {printf "\033[31m%s\033[0m is ruby",FILENAME}

NR==1 && /awk/ {printf "\033[36m%s\033[0m is awk",FILENAME}' "$f")

[[ $value = *[[:print:]]* ]] && arr+=("$value"); unset value

#I first assign file to a $value because if I would have sent it directly to the the array, a '\n' would be added to `arr[]` if awk evalutes to nothing.

#for example, if the file would be written in a language not mentioned in the awk program, like lua, awk would return nothing and then arr+=('\n').

done

files=$(fzf --multi --ansi <<<"${arr[@]/%/$'\n'}" | cut -d " " -f 2) #f2 cause the first field is the ansi escape code for fzf, I guess...

#shellcheck disable=SC2086

[[ $files ]] && "${VISUAL:-${EDITOR:-cat}}" ${files/$'\n'/\ }

Any way to make it faster and more robust?

The idea behind it is to type in fzf `is\ bash` `is\ perl` `is\ shell` `is\ python` to see the numbers of noscripts you have for each language in your PATH and if you want multi-select the noscripts you want to read the source code in your EDITOR of choice, or it will be printed on the terminal via `cat`

https://redd.it/1byfsce

@r_bash

Stack Overflow

How to check if a file is binary?

How can I know if a file is a binary file?

For example, a compiled C file is a binary file.

I want to read all files from some directory, but I want to ignore binary files.

For example, a compiled C file is a binary file.

I want to read all files from some directory, but I want to ignore binary files.

why shall IFS be set to "\n\b" and not just "\n" to work with space containing filenames ?

Hi all,

I don't get why simply setting IFS to "\\n" doesn't work .

let's say I have 2 files in the current directory named "big banana" and "huge apple" and "small orange"

It doesn't work, the output is :

file: big banana

huge apple

small orange

instead of

file: big banana

file: huge apple

file: small orange

As a matter of fact it works if IFS is set the following way:

Does someone know why \\b is also needed in IFS definition?

Thanks for your help

https://redd.it/1byha3q

@r_bash

Hi all,

I don't get why simply setting IFS to "\\n" doesn't work .

let's say I have 2 files in the current directory named "big banana" and "huge apple" and "small orange"

$ IFS=$( echo -ne "\n" )for i in $(ls *);do echo "file: $i";doneIt doesn't work, the output is :

file: big banana

huge apple

small orange

instead of

file: big banana

file: huge apple

file: small orange

As a matter of fact it works if IFS is set the following way:

$ IFS=$( echo -ne "\n\b" )Does someone know why \\b is also needed in IFS definition?

Thanks for your help

https://redd.it/1byha3q

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Why won't it log ps -p?

read -r -p "Enter process name: " cpid

apid=$(pgrep "$cpid")

ps -p "$apid"

read -r -p "Log process yes/no " log

if [ $log == "yes" ]

then

ps -p "$apid" >> pslog.txt # this is where it fails

This is what I get when I run the noscript:

https://preview.redd.it/vpxc65zn9btc1.png?width=444&format=png&auto=webp&s=d7602ecad132d3ee980f6462a0c72b25f86a4d62

https://redd.it/1bz7mvy

@r_bash

read -r -p "Enter process name: " cpid

apid=$(pgrep "$cpid")

ps -p "$apid"

read -r -p "Log process yes/no " log

if [ $log == "yes" ]

then

ps -p "$apid" >> pslog.txt # this is where it fails

This is what I get when I run the noscript:

https://preview.redd.it/vpxc65zn9btc1.png?width=444&format=png&auto=webp&s=d7602ecad132d3ee980f6462a0c72b25f86a4d62

https://redd.it/1bz7mvy

@r_bash

How can I improve this recursive noscript?

**tl:dr; Need to handle git submodule recursion, so I wrote this. How can it be better?**

if [ -f ".gitmodules" ]; then # continue...

submodules=($(grep -oP '"\K[^"\047]+(?=["\047])' .gitmodules))

if [ "$1" == "--TAIL" ]; then

"${@:2}" # execute!

fi

for sm in "${submodules[@]}"; do

pushd "$sm" > /dev/null

if [ "$1" == "--TAIL" ] && [ ! -f ".gitmodules" ]; then

"${@:2}" # execute!

fi

if [ -f ".gitmodules" ]; then # recurse!

cp ../"${BASH_SOURCE[0]}" .

source "${BASH_SOURCE[0]}"

rm "${BASH_SOURCE[0]}"

fi

if [ "$1" == "--HEAD" ] && [ ! -f ".gitmodules" ]; then

"${@:2}" # execute!

fi

popd > /dev/null

done

if [ "$1" == "--HEAD" ]; then

"${@:2}" # execute!

fi

fi

The main reasons for this are the coupled use of git-submodules and the maven, where the we have one-overall-project aka the ROOT and it has NESTED submodules, while each submodule is a different maven module in the pom.xml file. This means we need to commit from the deepest edges before their parent-modules, while checking out should be done inversely.

*Yes, I know the 'submodule foreach' mechanism exists*, but it seems to use tail-recursion which does not work for what I'm trying to, though admittedly in a lot of cases it is sufficient.

**If anyone can offer up a better way than the noscript copying/removing itself, I'd be ecstatic!**

​

https://redd.it/1bz6lb9

@r_bash

**tl:dr; Need to handle git submodule recursion, so I wrote this. How can it be better?**

if [ -f ".gitmodules" ]; then # continue...

submodules=($(grep -oP '"\K[^"\047]+(?=["\047])' .gitmodules))

if [ "$1" == "--TAIL" ]; then

"${@:2}" # execute!

fi

for sm in "${submodules[@]}"; do

pushd "$sm" > /dev/null

if [ "$1" == "--TAIL" ] && [ ! -f ".gitmodules" ]; then

"${@:2}" # execute!

fi

if [ -f ".gitmodules" ]; then # recurse!

cp ../"${BASH_SOURCE[0]}" .

source "${BASH_SOURCE[0]}"

rm "${BASH_SOURCE[0]}"

fi

if [ "$1" == "--HEAD" ] && [ ! -f ".gitmodules" ]; then

"${@:2}" # execute!

fi

popd > /dev/null

done

if [ "$1" == "--HEAD" ]; then

"${@:2}" # execute!

fi

fi

The main reasons for this are the coupled use of git-submodules and the maven, where the we have one-overall-project aka the ROOT and it has NESTED submodules, while each submodule is a different maven module in the pom.xml file. This means we need to commit from the deepest edges before their parent-modules, while checking out should be done inversely.

*Yes, I know the 'submodule foreach' mechanism exists*, but it seems to use tail-recursion which does not work for what I'm trying to, though admittedly in a lot of cases it is sufficient.

**If anyone can offer up a better way than the noscript copying/removing itself, I'd be ecstatic!**

​

https://redd.it/1bz6lb9

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

jq with variable containing a space, dash or dot

I have a json file that contains:

{

"disk_compatbility_info": {

"WD_BLACK SN770 500GB": {

"731030WD": {

"compatibility_interval": [{

"compatibility": "support",

}

]

}

}

},

"WD40PURX-64GVNY0": {

"80.00A80": {

"compatibility_interval": [{

"compatibility": "support",

}

]

}

}

},

}

If I quote the elements and keys that have spaces, dashes or dots, it works:

jq -r '.disk_compatbility_info."WD_BLACK SN770 500GB"' /<path>/<json-file>

jq -r '.disk_compatbility_info."WD40PURX-64GVNY0"."80.00A80"' /<path>/<json-file>

But I can't get it work with the elements and/or keys as variables. I either get "null" or an error. Here's what I've tried so far:

hdmodel="WD_BLACK SN770 500GB"

#jq -r '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq --arg hdmodel "$hdmodel" -r '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info."${hdmodel}"' /<path>/<json-file>

#jq -r --arg hdmodel "${hdmodel}" '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "${hdmodel}" '.disk_compatbility_info.$hdmodel' /<path>/<json-file>

jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info.${hdmodel}' /<path>/<json-file>

I clearly have no idea when it comes to jq :) And my google foo is failing at finding an answer.

What am I missing?

https://redd.it/1bzhl55

@r_bash

I have a json file that contains:

{

"disk_compatbility_info": {

"WD_BLACK SN770 500GB": {

"731030WD": {

"compatibility_interval": [{

"compatibility": "support",

}

]

}

}

},

"WD40PURX-64GVNY0": {

"80.00A80": {

"compatibility_interval": [{

"compatibility": "support",

}

]

}

}

},

}

If I quote the elements and keys that have spaces, dashes or dots, it works:

jq -r '.disk_compatbility_info."WD_BLACK SN770 500GB"' /<path>/<json-file>

jq -r '.disk_compatbility_info."WD40PURX-64GVNY0"."80.00A80"' /<path>/<json-file>

But I can't get it work with the elements and/or keys as variables. I either get "null" or an error. Here's what I've tried so far:

hdmodel="WD_BLACK SN770 500GB"

#jq -r '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq --arg hdmodel "$hdmodel" -r '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info."${hdmodel}"' /<path>/<json-file>

#jq -r --arg hdmodel "${hdmodel}" '.disk_compatbility_info."$hdmodel"' /<path>/<json-file>

#jq -r --arg hdmodel "${hdmodel}" '.disk_compatbility_info.$hdmodel' /<path>/<json-file>

jq -r --arg hdmodel "$hdmodel" '.disk_compatbility_info.${hdmodel}' /<path>/<json-file>

I clearly have no idea when it comes to jq :) And my google foo is failing at finding an answer.

What am I missing?

https://redd.it/1bzhl55

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Help with curl noscript

Hey guys, I have a noscript to list pages directory. The noscript is from a course and when the instructor runs in the video class works fine, but when I try to run on my PC it does't work. The test.txt file has a directory I know exists.

#!/bin/bash

for dir in $(cat test.txt); do

httpCode=$(curl -s -H "User-Agent: Teste" -o /dev/null -w "%{http_code}\n" $1/$dir/)

if [[ $httpCode == "200" ]]; then

echo "Directory found: $1/$dir"

fi

done

The var httpCode never gets 200, but when I run curl line in terminal, works fine. Can someone give me a hand here?

https://redd.it/1bzhilw

@r_bash

Hey guys, I have a noscript to list pages directory. The noscript is from a course and when the instructor runs in the video class works fine, but when I try to run on my PC it does't work. The test.txt file has a directory I know exists.

#!/bin/bash

for dir in $(cat test.txt); do

httpCode=$(curl -s -H "User-Agent: Teste" -o /dev/null -w "%{http_code}\n" $1/$dir/)

if [[ $httpCode == "200" ]]; then

echo "Directory found: $1/$dir"

fi

done

The var httpCode never gets 200, but when I run curl line in terminal, works fine. Can someone give me a hand here?

https://redd.it/1bzhilw

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

learning bash but why?

Hey guys and gals

Im new to the dev world and really new to bash but im just wondering whats the end game? like what is possible with bash can i change networks with a single .sh file, can i build and compile automation... im having a hard time finding anything about the more advanced process involved.

Just a noob looking to see why i should take the bash game to the end or just get the fundamentals and move on. by all means light me up as a noob but please bring some legit convo about it too.

https://redd.it/1c04j4f

@r_bash

Hey guys and gals

Im new to the dev world and really new to bash but im just wondering whats the end game? like what is possible with bash can i change networks with a single .sh file, can i build and compile automation... im having a hard time finding anything about the more advanced process involved.

Just a noob looking to see why i should take the bash game to the end or just get the fundamentals and move on. by all means light me up as a noob but please bring some legit convo about it too.

https://redd.it/1c04j4f

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to extract a single string (containing a ) from a longer string

On KDE/Wayland. In a terminal I run:

kscreen-doctor --outputs

I get a long output:

Modes: 0:2560x1440@60! 1:2560x1440@170\ 2:2560x1440@165......

I want to process the output so I just get the result shown in bold (the active setting). I think I should use grep, but not sure how to get it to select just the part of the output with the *. I searched for using grep to recognize a literal "*", but it's extracting just this bit of the output that I can't think how to approach. Any help appreciated.

https://redd.it/1c041ed

@r_bash

On KDE/Wayland. In a terminal I run:

kscreen-doctor --outputs

I get a long output:

Modes: 0:2560x1440@60! 1:2560x1440@170\ 2:2560x1440@165......

I want to process the output so I just get the result shown in bold (the active setting). I think I should use grep, but not sure how to get it to select just the part of the output with the *. I searched for using grep to recognize a literal "*", but it's extracting just this bit of the output that I can't think how to approach. Any help appreciated.

https://redd.it/1c041ed

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Will this work?

I want to parallelize a noscript, which is under a function() in .bashrc, and have the output logged to a text file. Will this work?

parallel noscript Log.txt noscriptname && exit

https://redd.it/1c0bews

@r_bash

I want to parallelize a noscript, which is under a function() in .bashrc, and have the output logged to a text file. Will this work?

parallel noscript Log.txt noscriptname && exit

https://redd.it/1c0bews

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

trap '... ' ERR not working

I use that:

This works for most cases.

But in one noscript the noscript terminates on non-zero exit, but I don't see the above message.

What could be the reason for that?

https://redd.it/1c0embh

@r_bash

I use that:

trap 'echo "ERROR: A command has failed. Exiting the noscript. Line was ($0:$LINENO): $(sed -n "${LINENO}p" "$0")"; exit 3' ERR

set -euo pipefail

This works for most cases.

But in one noscript the noscript terminates on non-zero exit, but I don't see the above message.

What could be the reason for that?

https://redd.it/1c0embh

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

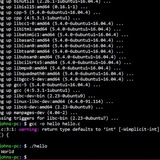

What should I do to get over this? I cant find a solution online for this.

https://redd.it/1c0hwdf

@r_bash

https://redd.it/1c0hwdf

@r_bash

Reddit

From the bash community on Reddit: What should I do to get over this? I cant find a solution online for this.

Explore this post and more from the bash community

What is the utility of read in the following noscript, and why we put genes.txt in the end of the loop?

https://redd.it/1c0ngvz

@r_bash

https://redd.it/1c0ngvz

@r_bash

sed and tr not changing whitespace when piped inside a bash noscript

Hey folks, I'm experiencing an odd occurrence where sed seems to be unable to change \s when running inside a bash noscript. My end goal is to create a csv with newlines that I can open up in calc/excel. Here's the code:

# Set the start and end dates for the time period of cost report

start_date=2024-02-01

end_date=2024-03-01

# Create tag-based usage report

usage_report=$(aws ce get-cost-and-usage --time-period Start=$start_date,End=$end_date --granularity MONTHLY --metrics "UnblendedCost" --group-by Type=TAG,Key=Workstream --query 'ResultsByTime[0].Groups')

# # Format the usage and cost report by resource as a table

report_table=$(echo "$usage_report" | jq -r '.[] | [.Keys[], .Metrics.UnblendedCost.Amount] | @csv')

cleaned_report=$(echo "$report_table" | sed -u 's/\\s/\\n/g')

echo $cleaned_report

In this example, $usage_report is:

[ { "Keys": [ "Workstream$blah" ], "Metrics": { "UnblendedCost": { "Amount": "1369.6332285425", "Unit": "USD" } } }, { "Keys": [ "Workstream$teams2" ], "Metrics": { "UnblendedCost": { "Amount": "5.3844278507", "Unit": "USD" } } }, { "Keys": [ "Workstream$team3" ], "Metrics": { "UnblendedCost": { "Amount": "257.3202611246", "Unit": "USD" } } }, { "Keys": [ "Workstream$team1" ], "Metrics": { "UnblendedCost": { "Amount": "23.2939083734", "Unit": "USD" } } } ]

So that's what comes in and is processed by jq, outputting:

"Workstream$blah","1369.6332285425" "Workstream$teams2","5.3844278507" "Workstream$team3","257.3202611246" "Workstream$team1","23.2939083734"

All I want to do with that output now is to replace the whitespaces with newlines, so I do "$report_table" | sed -u 's/\\s/\\n/g', but the end result is the same as the $report_table input. I also tried using cleaned_report=$(echo "$report_table" | tr ' ' '\n'), but that also produced the same result. In contrast, if I output the results of this noscript to a test.csv file, then run sed s/\\s/\\n/g test.csv from the command line, it formats as intended:

"Workstream$blah","1369.6332285425"

"Workstream$teams2","5.3844278507"

"Workstream$team3","257.3202611246"

"Workstream$team1","23.2939083734"

Any guidance is appreciated!

https://redd.it/1c0vy1i

@r_bash

Hey folks, I'm experiencing an odd occurrence where sed seems to be unable to change \s when running inside a bash noscript. My end goal is to create a csv with newlines that I can open up in calc/excel. Here's the code:

# Set the start and end dates for the time period of cost report

start_date=2024-02-01

end_date=2024-03-01

# Create tag-based usage report

usage_report=$(aws ce get-cost-and-usage --time-period Start=$start_date,End=$end_date --granularity MONTHLY --metrics "UnblendedCost" --group-by Type=TAG,Key=Workstream --query 'ResultsByTime[0].Groups')

# # Format the usage and cost report by resource as a table

report_table=$(echo "$usage_report" | jq -r '.[] | [.Keys[], .Metrics.UnblendedCost.Amount] | @csv')

cleaned_report=$(echo "$report_table" | sed -u 's/\\s/\\n/g')

echo $cleaned_report

In this example, $usage_report is:

[ { "Keys": [ "Workstream$blah" ], "Metrics": { "UnblendedCost": { "Amount": "1369.6332285425", "Unit": "USD" } } }, { "Keys": [ "Workstream$teams2" ], "Metrics": { "UnblendedCost": { "Amount": "5.3844278507", "Unit": "USD" } } }, { "Keys": [ "Workstream$team3" ], "Metrics": { "UnblendedCost": { "Amount": "257.3202611246", "Unit": "USD" } } }, { "Keys": [ "Workstream$team1" ], "Metrics": { "UnblendedCost": { "Amount": "23.2939083734", "Unit": "USD" } } } ]

So that's what comes in and is processed by jq, outputting:

"Workstream$blah","1369.6332285425" "Workstream$teams2","5.3844278507" "Workstream$team3","257.3202611246" "Workstream$team1","23.2939083734"

All I want to do with that output now is to replace the whitespaces with newlines, so I do "$report_table" | sed -u 's/\\s/\\n/g', but the end result is the same as the $report_table input. I also tried using cleaned_report=$(echo "$report_table" | tr ' ' '\n'), but that also produced the same result. In contrast, if I output the results of this noscript to a test.csv file, then run sed s/\\s/\\n/g test.csv from the command line, it formats as intended:

"Workstream$blah","1369.6332285425"

"Workstream$teams2","5.3844278507"

"Workstream$team3","257.3202611246"

"Workstream$team1","23.2939083734"

Any guidance is appreciated!

https://redd.it/1c0vy1i

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

An app for finding locations of text within large directories

I made an app called FindIt that makes it easier to find all occurrences of text within large directories and files.

I mainly made this because it's difficult to find where symbols are defined when decompiling .Net applications, but I'm sure it could be used outside of decompiling apps.

More information can be found in the GitHub repo.

https://github.com/ScripturaOpus/FindIt

https://redd.it/1c113uy

@r_bash

I made an app called FindIt that makes it easier to find all occurrences of text within large directories and files.

I mainly made this because it's difficult to find where symbols are defined when decompiling .Net applications, but I'm sure it could be used outside of decompiling apps.

More information can be found in the GitHub repo.

https://github.com/ScripturaOpus/FindIt

https://redd.it/1c113uy

@r_bash

GitHub

GitHub - ScripturaOpus/FindIt: Locate all occurrences of a string in any and all files within a directory

Locate all occurrences of a string in any and all files within a directory - ScripturaOpus/FindIt

output not redirecting to a file.

/usr/sbin/logrotate /etc/logrotate.conf -d 2>&1 /tmp/log.txt

Creates an empty file while text flies pas the screen. Why?

https://redd.it/1c1h8o6

@r_bash

/usr/sbin/logrotate /etc/logrotate.conf -d 2>&1 /tmp/log.txt

Creates an empty file while text flies pas the screen. Why?

https://redd.it/1c1h8o6

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Quickly find the largest files and folders in a directory + subs

This function will quickly return the largest files and folders in the directory and its subdirectories with the full path to each folder and file.

You just pass the number of results you want returned to the function.

You can get the function on GitHub [here](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Misc/Functions/big-files.sh).

To return 5 results for files and folders execute:

big_files 5

I saved this in my `.bash_functions` file and love using it to find stuff that is hogging space.

Cheers!

https://redd.it/1c1ioa5

@r_bash

This function will quickly return the largest files and folders in the directory and its subdirectories with the full path to each folder and file.

You just pass the number of results you want returned to the function.

You can get the function on GitHub [here](https://github.com/slyfox1186/noscript-repo/blob/main/Bash/Misc/Functions/big-files.sh).

To return 5 results for files and folders execute:

big_files 5

I saved this in my `.bash_functions` file and love using it to find stuff that is hogging space.

Cheers!

https://redd.it/1c1ioa5

@r_bash

GitHub

noscript-repo/Bash/Misc/Functions/big-files.sh at main · slyfox1186/noscript-repo

My personal noscript repository with multiple languages supported. AHK v1+v2 | BASH | BATCH | JSON | POWERSHELL | POWERSHELL | PYTHON | WINDOWS REGISTRY | XML - slyfox1186/noscript-repo

Judge the setup noscript

I need feedback for a setup noscript :

#!/bin/bash

chmod 777 ./src/fbd.sh

sudo pacman -S gum

echo "Setup successful. Execute ./src/fbd.sh to run FBD"

​

https://redd.it/1c1odwx

@r_bash

I need feedback for a setup noscript :

#!/bin/bash

chmod 777 ./src/fbd.sh

sudo pacman -S gum

echo "Setup successful. Execute ./src/fbd.sh to run FBD"

​

https://redd.it/1c1odwx

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Make the list with size, path and filename

Hello,

find . -type f will show us all files under current directory, how to simple split the path name and filename and add the file sizes in the output for each line

or

du -a . will show all files and directories under current one, how to hide the directories and split the path names and file names in the each line?

thanks

​

https://redd.it/1c1pi0i

@r_bash

Hello,

find . -type f will show us all files under current directory, how to simple split the path name and filename and add the file sizes in the output for each line

or

du -a . will show all files and directories under current one, how to hide the directories and split the path names and file names in the each line?

thanks

​

https://redd.it/1c1pi0i

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community