How can i create a bash noscript to check that there is packet activity on either host IP A or host IP B?

I have this bash noscript but it is not working as intended since it gets stuck on the case that only one of the hosts have packet activity and wondering if there is a better way to solve the original problem? I do not really like having to manually check the /tmp/output files generated but it is fine for now. I just need a way to support `OR` for either host instead of waiting for both to have 10 packets worth of traffic.

#!/bin/bash

capturednstraffic() {

tcpdump -i any port 53 and host 208.40.283.283 -nn -c 10 > /tmp/output1.txt

tcpdump -i any port 53 and host 208.40.293.293 -nn -c 10 > /tmp/output2.txt

}

capturednstraffic & ping -c 10 www.instagram.com

wait

https://redd.it/1eh259z

@r_bash

I have this bash noscript but it is not working as intended since it gets stuck on the case that only one of the hosts have packet activity and wondering if there is a better way to solve the original problem? I do not really like having to manually check the /tmp/output files generated but it is fine for now. I just need a way to support `OR` for either host instead of waiting for both to have 10 packets worth of traffic.

#!/bin/bash

capturednstraffic() {

tcpdump -i any port 53 and host 208.40.283.283 -nn -c 10 > /tmp/output1.txt

tcpdump -i any port 53 and host 208.40.293.293 -nn -c 10 > /tmp/output2.txt

}

capturednstraffic & ping -c 10 www.instagram.com

wait

https://redd.it/1eh259z

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

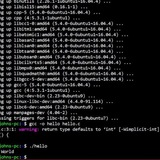

User Creation Script - Is there a better way?

I've been an admin for many years but never really learned to noscript. Been working on this lately and I've written a couple of noscripts for creating/deleting users & files for when I want to do a lab.

The User creation and deletion noscripts work but throw some duplicate errors related to groups. I'm wondering if there is a better way to do this.

Error on Creation Script:

https://preview.redd.it/gxucc73qfyfd1.png?width=510&format=png&auto=webp&s=a29d0783dc735633a5c9d0897ac1a4a701f5b56d

Here is the noscript I'm using:

#!/bin/bash

### Declare Input File

InputFile="/home/user/noscript/newUsers.csv"

declare -a fname

declare -a lname

declare -a user

declare -a dept

declare -a pass

### Read Input File

while IFS=, read -r FirstName LastName UserName Department Password;

do

fname+=("$FirstName")

lname+=("$LastName")

user+=("$UserName")

dept+=("$Department")

pass+=("$Password")

done<$InputFile

### Loop throught input file and create user groups and users

for index in "${!user@}";

do

sudo groupadd "${dept$index}";

sudo useradd -g "${dept$index}" \

-d "/home/${user$index}" \

-s "/bin/bash" \

-p "$(echo "${pass$index}" | openssl passwd -1 -stdin)" "${user$index}"

done

### Finish Script

I'm guessing I probably need to sort the incoming CSV first and possibly run this as two separate loops, but I'm real green to noscripting and not sure where to start with something like that.

I get similar errors on the delete process because users are still in groups during the loop until the final user is removed from a group.

https://redd.it/1eh4dye

@r_bash

I've been an admin for many years but never really learned to noscript. Been working on this lately and I've written a couple of noscripts for creating/deleting users & files for when I want to do a lab.

The User creation and deletion noscripts work but throw some duplicate errors related to groups. I'm wondering if there is a better way to do this.

Error on Creation Script:

https://preview.redd.it/gxucc73qfyfd1.png?width=510&format=png&auto=webp&s=a29d0783dc735633a5c9d0897ac1a4a701f5b56d

Here is the noscript I'm using:

#!/bin/bash

### Declare Input File

InputFile="/home/user/noscript/newUsers.csv"

declare -a fname

declare -a lname

declare -a user

declare -a dept

declare -a pass

### Read Input File

while IFS=, read -r FirstName LastName UserName Department Password;

do

fname+=("$FirstName")

lname+=("$LastName")

user+=("$UserName")

dept+=("$Department")

pass+=("$Password")

done<$InputFile

### Loop throught input file and create user groups and users

for index in "${!user@}";

do

sudo groupadd "${dept$index}";

sudo useradd -g "${dept$index}" \

-d "/home/${user$index}" \

-s "/bin/bash" \

-p "$(echo "${pass$index}" | openssl passwd -1 -stdin)" "${user$index}"

done

### Finish Script

I'm guessing I probably need to sort the incoming CSV first and possibly run this as two separate loops, but I'm real green to noscripting and not sure where to start with something like that.

I get similar errors on the delete process because users are still in groups during the loop until the final user is removed from a group.

https://redd.it/1eh4dye

@r_bash

How to run noscripts in the background in Ubuntu?

Hello everyone,

I know that you can run your noscripts with “&” in the background, but this option does not work so well for me, are there perhaps other commands with which I can achieve a similar result?

Thanks for your help guys

https://redd.it/1ehb8dn

@r_bash

Hello everyone,

I know that you can run your noscripts with “&” in the background, but this option does not work so well for me, are there perhaps other commands with which I can achieve a similar result?

Thanks for your help guys

https://redd.it/1ehb8dn

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Can I push a config file and a noscript to run with ssh?

I have a noscript to run on a remote box and there is a separate config file with variables in it that the noscript needs. What would be a smart way to handle this? Can I push both somehow?

https://redd.it/1ehtyty

@r_bash

I have a noscript to run on a remote box and there is a separate config file with variables in it that the noscript needs. What would be a smart way to handle this? Can I push both somehow?

https://redd.it/1ehtyty

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

QEMU-QuickBoot.sh | Zenity GUI launcher for quick deployment of QEMU Virtual Machines

QEMU-QuickBoot is a Bash noscript i made with the help of chatGPT, It's designed to simplify the deployment of Virtual Machines (VMs) using QEMU, with a user-friendly GUI interface provided by Zenity. It allows users to quickly create and boot VMs directly from their desktop, using connected physical devices or bootable image files as the source media. User-Friendly Interface, Utilizes Zenity to present a straightforward interface for selecting VM boot sources and configurations. Multiple Boot Options: Supports booting VMs from connected devices, various file formats (.vhd, .img, .iso), and ISO images with virtual drives or physical devices. Dynamic RAM Configuration: Allows users to specify the amount of RAM (in MB) allocated to the VM. BIOS and UEFI Support: Provides options for booting in BIOS or UEFI mode depending on the user's preference. Includes error handling to ensure smooth operation and user feedback throughout the VM setup process.

noscript here at GITHUB: https://github.com/GlitchLinux/QEMU-QuickBoot/tree/main

I appreciate any feedback or advice on how to improve this noscript!

Thank You!

https://redd.it/1ehv23e

@r_bash

QEMU-QuickBoot is a Bash noscript i made with the help of chatGPT, It's designed to simplify the deployment of Virtual Machines (VMs) using QEMU, with a user-friendly GUI interface provided by Zenity. It allows users to quickly create and boot VMs directly from their desktop, using connected physical devices or bootable image files as the source media. User-Friendly Interface, Utilizes Zenity to present a straightforward interface for selecting VM boot sources and configurations. Multiple Boot Options: Supports booting VMs from connected devices, various file formats (.vhd, .img, .iso), and ISO images with virtual drives or physical devices. Dynamic RAM Configuration: Allows users to specify the amount of RAM (in MB) allocated to the VM. BIOS and UEFI Support: Provides options for booting in BIOS or UEFI mode depending on the user's preference. Includes error handling to ensure smooth operation and user feedback throughout the VM setup process.

noscript here at GITHUB: https://github.com/GlitchLinux/QEMU-QuickBoot/tree/main

I appreciate any feedback or advice on how to improve this noscript!

Thank You!

https://redd.it/1ehv23e

@r_bash

GitHub

GitHub - GlitchLinux/QEMU-QuickBoot: QEMU-QuickBoot - Zenity GUI Interface for quick and easy deployment of QEMU Virtual Machines

QEMU-QuickBoot - Zenity GUI Interface for quick and easy deployment of QEMU Virtual Machines - GlitchLinux/QEMU-QuickBoot

Any "auto echo command" generator app or website?

Hello. I have been wondering if there is any "auto echo command" generating website or app. For example, I'd be able to put the color, format, symbols etc. easily using GUI sliders or dropdown menu, and it will generate the bash "echo" command to display it. If I select the text, and select red color, the whole text will become red; if I select bold, it will become bold. If I select both, it'll become both.

It will make it easier to generate the echo commands for various bash noscripts.

https://redd.it/1eibzu4

@r_bash

Hello. I have been wondering if there is any "auto echo command" generating website or app. For example, I'd be able to put the color, format, symbols etc. easily using GUI sliders or dropdown menu, and it will generate the bash "echo" command to display it. If I select the text, and select red color, the whole text will become red; if I select bold, it will become bold. If I select both, it'll become both.

It will make it easier to generate the echo commands for various bash noscripts.

https://redd.it/1eibzu4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Crontab to capture bash history to a file

The issue is crontab start a new session and history command will show empty.

It works fine on line command but not via crontab.

I tried also history <bash_history_file>

And I need to capture daily the history of an user to a file.

Thank you

https://redd.it/1eieobx

@r_bash

The issue is crontab start a new session and history command will show empty.

It works fine on line command but not via crontab.

I tried also history <bash_history_file>

And I need to capture daily the history of an user to a file.

Thank you

https://redd.it/1eieobx

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Connecting Docker to MySQL with Bash

Mac user here who has very little experience with bash. I am trying to dockerize my spring boot app and am struggling. I ran this command to start the image:

docker run -p 3307:3006 --name my-mysql -e MYSQL_ROOT_PASSWORD=root -d mysql:8.0.36

That worked fine, so I ran:

docker exec -t my-mysql /bin/bash

And then tried to log in with:

mysql -u root -p

After hitting enter it just goes to a new line and does nothing. I have to hit control c to get out otherwise I can't do anything. What is going wrong here? Isn't it supposed to prompt me to enter a password?

https://redd.it/1eifxtc

@r_bash

Mac user here who has very little experience with bash. I am trying to dockerize my spring boot app and am struggling. I ran this command to start the image:

docker run -p 3307:3006 --name my-mysql -e MYSQL_ROOT_PASSWORD=root -d mysql:8.0.36

That worked fine, so I ran:

docker exec -t my-mysql /bin/bash

And then tried to log in with:

mysql -u root -p

After hitting enter it just goes to a new line and does nothing. I have to hit control c to get out otherwise I can't do anything. What is going wrong here? Isn't it supposed to prompt me to enter a password?

https://redd.it/1eifxtc

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Question about Bash Function

Hii, I had clicked on [this link](https://github.com/dylanaraps/pure-bash-bible) to get some information about using bash's own functionality instead of using external commands or binaries, depending on the context.

The thing is that I was looking at this function and I have some doubts:

remove_array_dups() {

# Usage: remove_array_dups "array"

declare -A tmp_array

for i in "$@"; do

[[ $i ]] && IFS=" " tmp_array["${i:- }"]=1

done

printf '%s\n' "${!tmp_array[@]}"

}

remove_array_dups "${array[@]}"

Inside the loop, I guess `[[ $i ]]` is used to not add empty strings as keys to the associative array, but I don't understand why then a parameter expansion is used in the array allocation, which adds a blank space in case the variable is empty or undefined

I don't know if it does this to add an element with an empty key and value 1 to the array in case `$i` is empty or unallocated, but it doesn't make much sense because `$i` will never be empty due to `[[ $i ]] && ...`, isn't it?

I also do not understand why the `IFS` value is changed to a blank space.

Please correct me if I am wrong or I am making a mistake in what I am saying. I understand that `IFS` acts when word splitting is performed after the different types of existing expansions or when `"$*"` is expanded.

But if the expansion is performed inside double quotes, word splitting does not act and therefore the previous `IFS` assignment would not apply, no?

Another thing I do not understand either, is the following, I have seen that for the `IFS` modification does not act on the shell environment, it can be used in conjunction with certain utilities such as read ( to affect only that input line ), you can make use of local or declare within a function or make use of a subshell, but being a parameter assignment, it would not see outside the context of the subshell ( I do not know if there is any attribute of the shell that modifies this behavior ).

In this case, this modification would affect `IFS` globally, no? Why would it do that in this case?

Another thing, in the short time I've been part of this community, I've noticed, reading other posts, that whenever possible, we usually choose to make use of bash's own functionalities instead of requiring external utilities (grep, awk, sed, basename...).

Do you know of any source of information, besides the github repo I posted above, that explains this?

At some point, I'd like to be able to be able to apply all these concepts whenever possible, and use bash itself instead of non builtin bash functionalities.

Thank you very much in advance for the resolution of the doubt.

https://redd.it/1ej6sg6

@r_bash

Hii, I had clicked on [this link](https://github.com/dylanaraps/pure-bash-bible) to get some information about using bash's own functionality instead of using external commands or binaries, depending on the context.

The thing is that I was looking at this function and I have some doubts:

remove_array_dups() {

# Usage: remove_array_dups "array"

declare -A tmp_array

for i in "$@"; do

[[ $i ]] && IFS=" " tmp_array["${i:- }"]=1

done

printf '%s\n' "${!tmp_array[@]}"

}

remove_array_dups "${array[@]}"

Inside the loop, I guess `[[ $i ]]` is used to not add empty strings as keys to the associative array, but I don't understand why then a parameter expansion is used in the array allocation, which adds a blank space in case the variable is empty or undefined

I don't know if it does this to add an element with an empty key and value 1 to the array in case `$i` is empty or unallocated, but it doesn't make much sense because `$i` will never be empty due to `[[ $i ]] && ...`, isn't it?

I also do not understand why the `IFS` value is changed to a blank space.

Please correct me if I am wrong or I am making a mistake in what I am saying. I understand that `IFS` acts when word splitting is performed after the different types of existing expansions or when `"$*"` is expanded.

But if the expansion is performed inside double quotes, word splitting does not act and therefore the previous `IFS` assignment would not apply, no?

Another thing I do not understand either, is the following, I have seen that for the `IFS` modification does not act on the shell environment, it can be used in conjunction with certain utilities such as read ( to affect only that input line ), you can make use of local or declare within a function or make use of a subshell, but being a parameter assignment, it would not see outside the context of the subshell ( I do not know if there is any attribute of the shell that modifies this behavior ).

In this case, this modification would affect `IFS` globally, no? Why would it do that in this case?

Another thing, in the short time I've been part of this community, I've noticed, reading other posts, that whenever possible, we usually choose to make use of bash's own functionalities instead of requiring external utilities (grep, awk, sed, basename...).

Do you know of any source of information, besides the github repo I posted above, that explains this?

At some point, I'd like to be able to be able to apply all these concepts whenever possible, and use bash itself instead of non builtin bash functionalities.

Thank you very much in advance for the resolution of the doubt.

https://redd.it/1ej6sg6

@r_bash

GitHub

GitHub - dylanaraps/pure-bash-bible: 📖 A collection of pure bash alternatives to external processes.

📖 A collection of pure bash alternatives to external processes. - dylanaraps/pure-bash-bible

Guide to Customizing Your Prompt With Starship

I've recently switched from Oh-My-Zsh and Powerlevel10k to Starship for my shell prompt. While those are excellent tools, my config eventually felt a bit bloated. Oh-My-Zsh offers a "batteries included" approach with lots of features out of the box, but Starship's minimalist and lightweight nature made it easier for me to configure and maintain. Also, it's cross-platform and cross-shell, which is a nice bonus.

I recently made a video about my WezTerm and Starship config, but I kinda brushed over the Starship part. Since some people asked for a deeper dive, I made another video focusing on that.

Hope you find it helpful and if you're also using Starship, I'd love to see your configs! :)

https://www.youtube.com/watch?v=v2S18Xf2PRo

https://preview.redd.it/ynug9m9r5hgd1.png?width=2560&format=png&auto=webp&s=f99ddfcc4933f97a59d81a2ad4efa57333f0e820

https://redd.it/1ej7mq9

@r_bash

I've recently switched from Oh-My-Zsh and Powerlevel10k to Starship for my shell prompt. While those are excellent tools, my config eventually felt a bit bloated. Oh-My-Zsh offers a "batteries included" approach with lots of features out of the box, but Starship's minimalist and lightweight nature made it easier for me to configure and maintain. Also, it's cross-platform and cross-shell, which is a nice bonus.

I recently made a video about my WezTerm and Starship config, but I kinda brushed over the Starship part. Since some people asked for a deeper dive, I made another video focusing on that.

Hope you find it helpful and if you're also using Starship, I'd love to see your configs! :)

https://www.youtube.com/watch?v=v2S18Xf2PRo

https://preview.redd.it/ynug9m9r5hgd1.png?width=2560&format=png&auto=webp&s=f99ddfcc4933f97a59d81a2ad4efa57333f0e820

https://redd.it/1ej7mq9

@r_bash

YouTube

Ultimate Starship Shell Prompt Setup From Scratch

In this video, we're diving deep into my Starship shell prompt config from scratch, as some of you requested. Learn how to transform your terminal with Starship and turn a basic prompt into a colorful, informative display. We'll explore the benefits of using…

My first actually useful bash noscript

So this isn't my first noscript, I tend to do a lot of simple tasks with noscripts, but never actually took the time to turn them into a useful project.

I've created a backup utility, that can keep my configuration folders on one of my homelab servers backed up.

the main noscript, is called from cron jobs, with the relevant section name passed in from the cron file.

#!/bin/bash

# backup-and-sync.sh

CFG_FILE=/etc/config.ini

GREEN="\033[0;32m"

YELLOW="\033[1;33m"

NC="\033[0m"

WORK_DIR="/usr/local/bin"

LOCK_FILE="/tmp/$1.lock"

SECTION=$1

# Set the working directory

cd "$WORK_DIR" || exit

# Function to log to Docker logs

log() {

local timeStamp=$(date "+%Y-%m-%d %H:%M:%S")

echo -e "${GREEN}${timeStamp}${NC} - $@" | tee -a /proc/1/fd/1

}

# Function to log errors to Docker logs with timestamp

log_error() {

local timeStamp=$(date "+%Y-%m-%d %H:%M:%S")

while read -r line; do

echo -e "${YELLOW}${timeStamp}${NC} - ERROR - $line" | tee -a /proc/1/fd/1

done

}

# Function to read the configuration file

read_config() {

local section=$1

eval "$(awk -F "=" -v section="$section" '

BEGIN { in_section=0; exclusions="" }

/^\[/{ in_section=0 }

$0 ~ "\\["section"\\]" { in_section=1; next }

in_section && !/^#/ && $1 {

gsub(/^ +| +$/, "", $1)

gsub(/^ +| +$/, "", $2)

if ($1 == "exclude") {

exclusions = exclusions "--exclude=" $2 " "

} else {

print $1 "=\"" $2 "\""

}

}

END { print "exclusions=\"" exclusions "\"" }

' $CFG_FILE)"

}

# Function to mount the CIFS share

mount_cifs() {

local mountPoint=$1

local server=$2

local share=$3

local user=$4

local password=$5

mkdir -p "$mountPoint" 2> >(log_error)

mount -t cifs -o username="$user",password="$password",vers=3.0 //"$server"/"$share" "$mountPoint" 2> >(log_error)

}

# Function to unmount the CIFS share

unmount_cifs() {

local mountPoint=$1

umount "$mountPoint" 2> >(log_error)

}

# Function to check if the CIFS share is mounted

is_mounted() {

local mountPoint=$1

mountpoint -q "$mountPoint"

}

# Function to handle backup and sync

handle_backup_sync() {

local section=$1

local sourceDir=$2

local mountPoint=$3

local subfolderName=$4

local exclusions=$5

local compress=$6

local keep_days=$7

local server=$8

local share=$9

if [ "$compress" -eq 1 ]; then

# Create a timestamp for the backup filename

timeStamp=$(date +%d-%m-%Y-%H.%M)

mkdir -p "${mountPoint}/${subfolderName}"

backupFile="${mountPoint}/${subfolderName}/${section}-${timeStamp}.tar.gz"

#log "tar -czvf $backupFile -C $sourceDir $exclusions . 2> >(log_error)"

log "Creating archive of ${sourceDir}"

tar -czvf "$backupFile" -C "$sourceDir" $exclusions . 2> >(log_error)

log "//${server}/${share}/${subfolderName}/${section}-${timeStamp}.tar.gz was successfuly created."

else

rsync_cmd=(rsync -av --inplace --delete $exclusions "$sourceDir/" "$mountPoint/${subfolderName}/")

#log "${rsync_cmd[@]}"

log "Creating a backup of ${sourceDir}"

"${rsync_cmd[@]}" 2> >(log_error)

log "Successful backup located in //${server}/${share}/${subfolderName}."

fi

# Delete compressed backups older than specified days

find "$mountPoint/$subfolderName" -type f -name "${section}-*.tar.gz" -mtime +${keep_days} -exec rm {} \; 2> >(log_error)

}

# Check if the noscript is run as superuser

if [[ $EUID -ne 0 ]]; then

log_error <<< "This

So this isn't my first noscript, I tend to do a lot of simple tasks with noscripts, but never actually took the time to turn them into a useful project.

I've created a backup utility, that can keep my configuration folders on one of my homelab servers backed up.

the main noscript, is called from cron jobs, with the relevant section name passed in from the cron file.

#!/bin/bash

# backup-and-sync.sh

CFG_FILE=/etc/config.ini

GREEN="\033[0;32m"

YELLOW="\033[1;33m"

NC="\033[0m"

WORK_DIR="/usr/local/bin"

LOCK_FILE="/tmp/$1.lock"

SECTION=$1

# Set the working directory

cd "$WORK_DIR" || exit

# Function to log to Docker logs

log() {

local timeStamp=$(date "+%Y-%m-%d %H:%M:%S")

echo -e "${GREEN}${timeStamp}${NC} - $@" | tee -a /proc/1/fd/1

}

# Function to log errors to Docker logs with timestamp

log_error() {

local timeStamp=$(date "+%Y-%m-%d %H:%M:%S")

while read -r line; do

echo -e "${YELLOW}${timeStamp}${NC} - ERROR - $line" | tee -a /proc/1/fd/1

done

}

# Function to read the configuration file

read_config() {

local section=$1

eval "$(awk -F "=" -v section="$section" '

BEGIN { in_section=0; exclusions="" }

/^\[/{ in_section=0 }

$0 ~ "\\["section"\\]" { in_section=1; next }

in_section && !/^#/ && $1 {

gsub(/^ +| +$/, "", $1)

gsub(/^ +| +$/, "", $2)

if ($1 == "exclude") {

exclusions = exclusions "--exclude=" $2 " "

} else {

print $1 "=\"" $2 "\""

}

}

END { print "exclusions=\"" exclusions "\"" }

' $CFG_FILE)"

}

# Function to mount the CIFS share

mount_cifs() {

local mountPoint=$1

local server=$2

local share=$3

local user=$4

local password=$5

mkdir -p "$mountPoint" 2> >(log_error)

mount -t cifs -o username="$user",password="$password",vers=3.0 //"$server"/"$share" "$mountPoint" 2> >(log_error)

}

# Function to unmount the CIFS share

unmount_cifs() {

local mountPoint=$1

umount "$mountPoint" 2> >(log_error)

}

# Function to check if the CIFS share is mounted

is_mounted() {

local mountPoint=$1

mountpoint -q "$mountPoint"

}

# Function to handle backup and sync

handle_backup_sync() {

local section=$1

local sourceDir=$2

local mountPoint=$3

local subfolderName=$4

local exclusions=$5

local compress=$6

local keep_days=$7

local server=$8

local share=$9

if [ "$compress" -eq 1 ]; then

# Create a timestamp for the backup filename

timeStamp=$(date +%d-%m-%Y-%H.%M)

mkdir -p "${mountPoint}/${subfolderName}"

backupFile="${mountPoint}/${subfolderName}/${section}-${timeStamp}.tar.gz"

#log "tar -czvf $backupFile -C $sourceDir $exclusions . 2> >(log_error)"

log "Creating archive of ${sourceDir}"

tar -czvf "$backupFile" -C "$sourceDir" $exclusions . 2> >(log_error)

log "//${server}/${share}/${subfolderName}/${section}-${timeStamp}.tar.gz was successfuly created."

else

rsync_cmd=(rsync -av --inplace --delete $exclusions "$sourceDir/" "$mountPoint/${subfolderName}/")

#log "${rsync_cmd[@]}"

log "Creating a backup of ${sourceDir}"

"${rsync_cmd[@]}" 2> >(log_error)

log "Successful backup located in //${server}/${share}/${subfolderName}."

fi

# Delete compressed backups older than specified days

find "$mountPoint/$subfolderName" -type f -name "${section}-*.tar.gz" -mtime +${keep_days} -exec rm {} \; 2> >(log_error)

}

# Check if the noscript is run as superuser

if [[ $EUID -ne 0 ]]; then

log_error <<< "This

noscript must be run as root"

exit 1

fi

# Main noscript functions

if [[ -n "$SECTION" ]]; then

log "Running backup for section: $SECTION"

(

flock -n 200 || {

log "Another noscript is already running. Exiting."

exit 1

}

read_config "$SECTION"

# Set default values for missing fields

: ${server:=""}

: ${share:=""}

: ${user:=""}

: ${password:=""}

: ${source:=""}

: ${compress:=0}

: ${exclusions:=""}

: ${keep:=3}

: ${subfolderName:=$SECTION} # Will implement in a future release

MOUNT_POINT="/mnt/$SECTION"

if [[ -z "$server" || -z "$share" || -z "$user" || -z "$password" || -z "$source" ]]; then

log "Skipping section $SECTION due to missing required fields."

exit 1

fi

log "Processing section: $SECTION"

mount_cifs "$MOUNT_POINT" "$server" "$share" "$user" "$password"

if is_mounted "$MOUNT_POINT"; then

log "CIFS share is mounted for section: $SECTION"

handle_backup_sync "$SECTION" "$source" "$MOUNT_POINT" "$subfolderName" "$exclusions" "$compress" "$keep" "$server" "$share"

unmount_cifs "$MOUNT_POINT"

log "Backup and sync finished for section: $SECTION"

else

log "Failed to mount CIFS share for section: $SECTION"

fi

) 200>"$LOCK_FILE"

else

log "No section specified. Exiting."

exit 1

fi

This reads in from the config.ini file.

# Sample backups configuration

[Configs]

server=192.168.1.208

share=Backups

user=backup

password=password

source=/src/configs

compress=0

schedule=30 1-23/2 * * *

subfolderName=configs

[ZIP-Configs]

server=192.168.1.208

share=Backups

user=backup

password=password

source=/src/configs

subfolderName=zips

compress=1

keep=3

exclude=homeassistant

exclude=cifs

exclude=*.sock

schedule=0 0 * * *

The noscripts run in a docker container, and uses the other noscript to set up the environment, cron jobs, and check mount points on container startup.

#!/bin/bash

# entry.sh

CFG_FILE=/etc/config.ini

GREEN="\033[0;32m"

YELLOW="\033[1;33m"

NC="\033[0m"

error_file=$(mktemp)

WORK_DIR="/usr/local/bin"

# Function to log to Docker logs

log() {

local TIMESTAMP=$(date "+%Y-%m-%d %H:%M:%S")

echo -e "${GREEN}${TIMESTAMP}${NC} - $@"

}

# Function to log errors to Docker logs with timestamp

log_error() {

local TIMESTAMP=$(date "+%Y-%m-%d %H:%M:%S")

while read -r line; do

echo -e "${YELLOW}${TIMESTAMP}${NC} - ERROR - $line" | tee -a /proc/1/fd/1

done

}

# Function to syncronise the timezone

set_tz() {

if [ -n "$TZ" ] && [ -f "/usr/share/zoneinfo/$TZ" ]; then

echo $TZ > /etc/timezone

ln -snf /usr/share/zoneinfo$TZ /etc/localtime

log "Setting timezone to ${TZ}"

else

log_error <<< "Invalid or unset TZ variable: $TZ"

fi

}

# Function to read the configuration file

read_config() {

local section=$1

eval "$(awk -F "=" -v section="$section" '

BEGIN { in_section=0; exclusions="" }

/^\[/{ in_section=0 }

$0 ~ "\\["section"\\]" { in_section=1; next }

in_section && !/^#/ && $1 {

gsub(/^ +| +$/, "", $1)

gsub(/^ +| +$/, "", $2)

if ($1 == "exclude") {

exclusions = exclusions "--exclude=" $2 " "

} else {

if ($1 == "schedule") {

# Escape double quotes and backslashes

gsub(/"/, "\\\"", $2)

}

exit 1

fi

# Main noscript functions

if [[ -n "$SECTION" ]]; then

log "Running backup for section: $SECTION"

(

flock -n 200 || {

log "Another noscript is already running. Exiting."

exit 1

}

read_config "$SECTION"

# Set default values for missing fields

: ${server:=""}

: ${share:=""}

: ${user:=""}

: ${password:=""}

: ${source:=""}

: ${compress:=0}

: ${exclusions:=""}

: ${keep:=3}

: ${subfolderName:=$SECTION} # Will implement in a future release

MOUNT_POINT="/mnt/$SECTION"

if [[ -z "$server" || -z "$share" || -z "$user" || -z "$password" || -z "$source" ]]; then

log "Skipping section $SECTION due to missing required fields."

exit 1

fi

log "Processing section: $SECTION"

mount_cifs "$MOUNT_POINT" "$server" "$share" "$user" "$password"

if is_mounted "$MOUNT_POINT"; then

log "CIFS share is mounted for section: $SECTION"

handle_backup_sync "$SECTION" "$source" "$MOUNT_POINT" "$subfolderName" "$exclusions" "$compress" "$keep" "$server" "$share"

unmount_cifs "$MOUNT_POINT"

log "Backup and sync finished for section: $SECTION"

else

log "Failed to mount CIFS share for section: $SECTION"

fi

) 200>"$LOCK_FILE"

else

log "No section specified. Exiting."

exit 1

fi

This reads in from the config.ini file.

# Sample backups configuration

[Configs]

server=192.168.1.208

share=Backups

user=backup

password=password

source=/src/configs

compress=0

schedule=30 1-23/2 * * *

subfolderName=configs

[ZIP-Configs]

server=192.168.1.208

share=Backups

user=backup

password=password

source=/src/configs

subfolderName=zips

compress=1

keep=3

exclude=homeassistant

exclude=cifs

exclude=*.sock

schedule=0 0 * * *

The noscripts run in a docker container, and uses the other noscript to set up the environment, cron jobs, and check mount points on container startup.

#!/bin/bash

# entry.sh

CFG_FILE=/etc/config.ini

GREEN="\033[0;32m"

YELLOW="\033[1;33m"

NC="\033[0m"

error_file=$(mktemp)

WORK_DIR="/usr/local/bin"

# Function to log to Docker logs

log() {

local TIMESTAMP=$(date "+%Y-%m-%d %H:%M:%S")

echo -e "${GREEN}${TIMESTAMP}${NC} - $@"

}

# Function to log errors to Docker logs with timestamp

log_error() {

local TIMESTAMP=$(date "+%Y-%m-%d %H:%M:%S")

while read -r line; do

echo -e "${YELLOW}${TIMESTAMP}${NC} - ERROR - $line" | tee -a /proc/1/fd/1

done

}

# Function to syncronise the timezone

set_tz() {

if [ -n "$TZ" ] && [ -f "/usr/share/zoneinfo/$TZ" ]; then

echo $TZ > /etc/timezone

ln -snf /usr/share/zoneinfo$TZ /etc/localtime

log "Setting timezone to ${TZ}"

else

log_error <<< "Invalid or unset TZ variable: $TZ"

fi

}

# Function to read the configuration file

read_config() {

local section=$1

eval "$(awk -F "=" -v section="$section" '

BEGIN { in_section=0; exclusions="" }

/^\[/{ in_section=0 }

$0 ~ "\\["section"\\]" { in_section=1; next }

in_section && !/^#/ && $1 {

gsub(/^ +| +$/, "", $1)

gsub(/^ +| +$/, "", $2)

if ($1 == "exclude") {

exclusions = exclusions "--exclude=" $2 " "

} else {

if ($1 == "schedule") {

# Escape double quotes and backslashes

gsub(/"/, "\\\"", $2)

}

print $1 "=\"" $2 "\""

}

}

END { print "exclusions=\"" exclusions "\"" }

' $CFG_FILE)"

}

# Function to check the mountpoint

check_mount() {

local mount_point=$1

if ! mountpoint -q "$mount_point"; then

log_error <<< "CIFS share is not mounted at $mount_point"

exit 1

fi

}

mount_cifs() {

local mount_point=$1

local user=$2

local password=$3

local server=$4

local share=$5

mkdir -p "$mount_point" 2> >(log_error)

mount -t cifs -o username="$user",password="$password",vers=3.0 //"$server"/"$share" "$mount_point" 2> >(log_error)

}

# Create or clear the crontab file

sync_cron() {

crontab -l > mycron 2> "$error_file"

if [ -s "$error_file" ]; then

log_error <<< "$(cat "$error_file")"

rm "$error_file"

: > mycron

else

rm "$error_file"

fi

# Loop through each section and add the cron job

for section in $(awk -F '[][]' '/\[[^]]+\]/{print $2}' $CFG_FILE); do

read_config "$section"

if [[ -n "$schedule" ]]; then

echo "$schedule /usr/local/bin/backup.sh $section" >> mycron

fi

done

}

# Set the working directory

cd "$WORK_DIR" || exit

# Set the timezone as defined by Environmental variable

set_tz

# Install the new crontab file

sync_cron

crontab mycron 2> >(log_error)

rm mycron 2> >(log_error)

# Ensure cron log file exists

touch /var/log/cron.log 2> >(log_error)

# Start cron

log "Starting cron service..."

cron 2> >(log_error) && log "Cron started successfully"

# Check if cron is running

if ! pgrep cron > /dev/null; then

log "Cron is not running."

exit 1

else

log "Cron is running."

fi

# Check if the CIFS shares are mountable

log "Checking all shares are mountable"

for section in $(awk -F '[][]' '/\[[^]]+\]/{print $2}' $CFG_FILE); do

read_config "$section"

MOUNT_POINT="/mnt/$section"

mount_cifs "$MOUNT_POINT" "$user" "$password" "$server" "$share"

check_mount "$MOUNT_POINT"

log "$section: //$server/$share succesfully mounted at $MOUNT_POINT... Unmounting"

umount "$MOUNT_POINT" 2> >(log_error)

done

log "All shares mounted successfuly. Starting cifs-backup"

# Print a message indicating we are about to tail the log

log "Tailing the cron log to keep the container running"

tail -f /var/log/cron.log

log "cifs-backup now running"

I'm sure there might be better ways of achieving the same thing. But the satisfaction that I get from knowing that I've done it myself, can't be beaten.

Let me know what you think, or anything that I could have done better.

https://redd.it/1ejcdz4

@r_bash

}

}

END { print "exclusions=\"" exclusions "\"" }

' $CFG_FILE)"

}

# Function to check the mountpoint

check_mount() {

local mount_point=$1

if ! mountpoint -q "$mount_point"; then

log_error <<< "CIFS share is not mounted at $mount_point"

exit 1

fi

}

mount_cifs() {

local mount_point=$1

local user=$2

local password=$3

local server=$4

local share=$5

mkdir -p "$mount_point" 2> >(log_error)

mount -t cifs -o username="$user",password="$password",vers=3.0 //"$server"/"$share" "$mount_point" 2> >(log_error)

}

# Create or clear the crontab file

sync_cron() {

crontab -l > mycron 2> "$error_file"

if [ -s "$error_file" ]; then

log_error <<< "$(cat "$error_file")"

rm "$error_file"

: > mycron

else

rm "$error_file"

fi

# Loop through each section and add the cron job

for section in $(awk -F '[][]' '/\[[^]]+\]/{print $2}' $CFG_FILE); do

read_config "$section"

if [[ -n "$schedule" ]]; then

echo "$schedule /usr/local/bin/backup.sh $section" >> mycron

fi

done

}

# Set the working directory

cd "$WORK_DIR" || exit

# Set the timezone as defined by Environmental variable

set_tz

# Install the new crontab file

sync_cron

crontab mycron 2> >(log_error)

rm mycron 2> >(log_error)

# Ensure cron log file exists

touch /var/log/cron.log 2> >(log_error)

# Start cron

log "Starting cron service..."

cron 2> >(log_error) && log "Cron started successfully"

# Check if cron is running

if ! pgrep cron > /dev/null; then

log "Cron is not running."

exit 1

else

log "Cron is running."

fi

# Check if the CIFS shares are mountable

log "Checking all shares are mountable"

for section in $(awk -F '[][]' '/\[[^]]+\]/{print $2}' $CFG_FILE); do

read_config "$section"

MOUNT_POINT="/mnt/$section"

mount_cifs "$MOUNT_POINT" "$user" "$password" "$server" "$share"

check_mount "$MOUNT_POINT"

log "$section: //$server/$share succesfully mounted at $MOUNT_POINT... Unmounting"

umount "$MOUNT_POINT" 2> >(log_error)

done

log "All shares mounted successfuly. Starting cifs-backup"

# Print a message indicating we are about to tail the log

log "Tailing the cron log to keep the container running"

tail -f /var/log/cron.log

log "cifs-backup now running"

I'm sure there might be better ways of achieving the same thing. But the satisfaction that I get from knowing that I've done it myself, can't be beaten.

Let me know what you think, or anything that I could have done better.

https://redd.it/1ejcdz4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How I can center the output of this Bash command

#!/bin/bash

#Stole it from https://www.putorius.net/how-to-make-countdown-timer-in-bash.html

GREEN='\0330;32m'

RED='\033[0;31m'

YELLOW='\033[0;33m'

RESET='\033[0m'

#------------------------

read -p "H:" hour

read -p "M:" min

read -p "S:" sec

#-----------------------

tput civis

#-----------------------

if [ -z "$hour" ; then

hour=0

fi

if -z "$min" ; then

min=0

fi

if -z "$sec" ; then

sec=0

fi

#----------------------

echo -ne "${GREEN}"

while $hour -ge 0 ; do

while $min -ge 0 ; do

while $sec -ge 0 ; do

if "$hour" -eq "0" && "$min" -eq "0" ; then

echo -ne "${YELLOW}"

fi

if "$hour" -eq "0" && "$min" -eq "0" && "$sec" -le "10" ; then

echo -ne "${RED}"

fi

echo -ne "$(printf "%02d" $hour):$(printf "%02d" $min):$(printf "%02d" $sec)\0330K\r"

let "sec=sec-1"

sleep 1

done

sec=59

let "min=min-1"

done

min=59

let "hour=hour-1"

done

echo -e "${RESET}"

[https://redd.it/1ejuy7c

@r_bash

#!/bin/bash

#Stole it from https://www.putorius.net/how-to-make-countdown-timer-in-bash.html

GREEN='\0330;32m'

RED='\033[0;31m'

YELLOW='\033[0;33m'

RESET='\033[0m'

#------------------------

read -p "H:" hour

read -p "M:" min

read -p "S:" sec

#-----------------------

tput civis

#-----------------------

if [ -z "$hour" ; then

hour=0

fi

if -z "$min" ; then

min=0

fi

if -z "$sec" ; then

sec=0

fi

#----------------------

echo -ne "${GREEN}"

while $hour -ge 0 ; do

while $min -ge 0 ; do

while $sec -ge 0 ; do

if "$hour" -eq "0" && "$min" -eq "0" ; then

echo -ne "${YELLOW}"

fi

if "$hour" -eq "0" && "$min" -eq "0" && "$sec" -le "10" ; then

echo -ne "${RED}"

fi

echo -ne "$(printf "%02d" $hour):$(printf "%02d" $min):$(printf "%02d" $sec)\0330K\r"

let "sec=sec-1"

sleep 1

done

sec=59

let "min=min-1"

done

min=59

let "hour=hour-1"

done

echo -e "${RESET}"

[https://redd.it/1ejuy7c

@r_bash

Putorius

How To Make a Countdown Timer in Bash - Putorius

Learn how to make a nice countdown timer in bash to use in your noscripts using while loops.

Help creating custom fuzzy seach command noscript.

https://preview.redd.it/6g4rr1jdengd1.png?width=1293&format=png&auto=webp&s=2f0008099046d63222afb9f7a1330e7be5a18f57

I want to interactively query nix pkgs using the nix-search command provided by `nix-search-cli`

Not really experiaenced in cli tools any ideas to make this work ?

https://redd.it/1ejv53s

@r_bash

https://preview.redd.it/6g4rr1jdengd1.png?width=1293&format=png&auto=webp&s=2f0008099046d63222afb9f7a1330e7be5a18f57

I want to interactively query nix pkgs using the nix-search command provided by `nix-search-cli`

Not really experiaenced in cli tools any ideas to make this work ?

https://redd.it/1ejv53s

@r_bash

Parameter expansion inserts "./" into copied string

I'm trying to loop through the results of

SERVERS=()

for word in

do

if [ $word == *".servers_minecraft_"* && $word != *".servers_minecraft_playit" ] ;

then

SERVERS+=${word#".servers_minecraft_"}

fi

done

echo ${SERVER[]}

where

https://redd.it/1ekc3rf

@r_bash

I'm trying to loop through the results of

screen -ls to look for sessions relevant to what I'm doing and add them to an array. The problem is that I need to use parameter expansion to do it, since screen sessions have an indeterminate-length number in front of them, and that adds ./ to the result. Here's the code I have so far:SERVERS=()

for word in

screen -list ;do

if [ $word == *".servers_minecraft_"* && $word != *".servers_minecraft_playit" ] ;

then

SERVERS+=${word#".servers_minecraft_"}

fi

done

echo ${SERVER[]}

where

echo ${SERVER[*]} outputs ./MyTargetString instead of MyTargetString. I already tried using parameter expansion to chop off ./, but of course that just reinserts it anyway.https://redd.it/1ekc3rf

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

curl: (3) URL using bad/illegal format or missing URL error using two parameters

Hello,

I am getting the error above when trying to use the curl command -b -j with the cookies. When just typing in -b or -c then it works perfectly, however, not when applying both parameters. Do you happen to know why?

https://redd.it/1ekv0am

@r_bash

Hello,

I am getting the error above when trying to use the curl command -b -j with the cookies. When just typing in -b or -c then it works perfectly, however, not when applying both parameters. Do you happen to know why?

https://redd.it/1ekv0am

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

remote execute screen command doesn't work from noscript, but works manually

I'm working on the thing I got set up with help in [this thread](https://www.reddit.com/r/bash/comments/1egamw2/comment/lfwxi38/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button). I've now got a new Terminal window with each of my screens in a different tab!

The problem is that now, when I try to do my remote execution outside the first loop, it doesn't work. I thought maybe it had to do with being part of a different command, but pasting that `echo hello` command into Terminal and replacing the variable name manually works fine.

gnome-terminal -- /bin/bash -c '

gnome-terminal --noscript="playit.gg" --tab -- screen -r servers_minecraft_playit

for SERVER in "$@" ; do

gnome-terminal --noscript="$SERVER" --tab -- screen -r servers_minecraft_$SERVER

done

' _ "${SERVERS[@]}"

for SERVER in "${SERVERS[@]}"

do

echo servers_minecraft_$SERVER

screen -S servers_minecraft_$SERVER -p 0 -X stuff "echo hello\n"

done;;

Is there anything I can do to fix it? The output of `echo servers_minecraft_$SERVER` matches the name of the screen session, so I don't think it could be a substitution issue.

https://redd.it/1el57w2

@r_bash

I'm working on the thing I got set up with help in [this thread](https://www.reddit.com/r/bash/comments/1egamw2/comment/lfwxi38/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button). I've now got a new Terminal window with each of my screens in a different tab!

The problem is that now, when I try to do my remote execution outside the first loop, it doesn't work. I thought maybe it had to do with being part of a different command, but pasting that `echo hello` command into Terminal and replacing the variable name manually works fine.

gnome-terminal -- /bin/bash -c '

gnome-terminal --noscript="playit.gg" --tab -- screen -r servers_minecraft_playit

for SERVER in "$@" ; do

gnome-terminal --noscript="$SERVER" --tab -- screen -r servers_minecraft_$SERVER

done

' _ "${SERVERS[@]}"

for SERVER in "${SERVERS[@]}"

do

echo servers_minecraft_$SERVER

screen -S servers_minecraft_$SERVER -p 0 -X stuff "echo hello\n"

done;;

Is there anything I can do to fix it? The output of `echo servers_minecraft_$SERVER` matches the name of the screen session, so I don't think it could be a substitution issue.

https://redd.it/1el57w2

@r_bash

Reddit

hopelessnerd-exe's comment on "Triple nest quotes, or open gnome-terminal window and execute command later?"

Explore this conversation and more from the bash community

Pulling Variables from a Json File

I'm looking for a snippet of noscript that will let me pull variables from a json file and pass it into the bash noscript. I mostly use powershell so this is a bit like writing left handed for me so far, same concept with a different execution

https://redd.it/1eljlfv

@r_bash

I'm looking for a snippet of noscript that will let me pull variables from a json file and pass it into the bash noscript. I mostly use powershell so this is a bit like writing left handed for me so far, same concept with a different execution

https://redd.it/1eljlfv

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Better autocomplete (like fish)

If I use the fish shell, I get a nice autocomplete. For example

Somehow it is sorted in a really usable way. The latest branches are at the top.

With Bash I get only a long list which looks like sorted by alphabet. This is hard to read if there are many branches.

Is there a way to get such a nice autocomplete in Bash?

https://redd.it/1eljld2

@r_bash

If I use the fish shell, I get a nice autocomplete. For example

git switch TABTAB looks like this:❯ git switch tg/avoid-warning-event-that-getting-cloud-init-output-failed

tg/installimage-async (Local Branch)

main (Local Branch)

tg/disable-bm-e2e-1716772 (Local Branch)

tg/rename-e2e-cluster-to-e2e (Local Branch)

tg/avoid-warning-event-that-getting-cloud-init-output-failed (Local Branch)

tg/fix-lychee-show-unknown-http-status-codes (Local Branch)

tg/fix-bm-e2e-1716772 (Local Branch)

tg/fix-lychee (Local Branch)

Somehow it is sorted in a really usable way. The latest branches are at the top.

With Bash I get only a long list which looks like sorted by alphabet. This is hard to read if there are many branches.

Is there a way to get such a nice autocomplete in Bash?

https://redd.it/1eljld2

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community