Running via cronjob, any way to check the server load and try again later if it's too high?

I'm writing a noscript that I'll run via cronjob at around 1am. It'll take about 15 minutes to complete, so I only want to do it if the server load is low.

This is where I am:

attempt=0

# server load is less than 3 and there have been less than 5 attempts

if (( $(awk '{ print $1; }' < /proc/loadavg) < 3 && $attempt < 5))

then

# do stuff

else

# server load is over 3, try again in an hour

let attempt++

fi

The question is, how do I get it to stop and try again in an hour without tying up server resources?

My original solution: create an empty text file and touch it upon completion, then the beginning of the noscript would look at the

Is there a better way?

https://redd.it/1f90a2b

@r_bash

I'm writing a noscript that I'll run via cronjob at around 1am. It'll take about 15 minutes to complete, so I only want to do it if the server load is low.

This is where I am:

attempt=0

# server load is less than 3 and there have been less than 5 attempts

if (( $(awk '{ print $1; }' < /proc/loadavg) < 3 && $attempt < 5))

then

# do stuff

else

# server load is over 3, try again in an hour

let attempt++

fi

The question is, how do I get it to stop and try again in an hour without tying up server resources?

My original solution: create an empty text file and touch it upon completion, then the beginning of the noscript would look at the

lastmodified time and stop if the time is less than 24 hours. Then set 5 separate cronjobs, knowing that 4 of them should fail every time.Is there a better way?

https://redd.it/1f90a2b

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Sending mail through bash, is mailx still the right option?

I'm writing a noscript that will be run via cronjob late at night, and I'd like for it to email the results to me.

When I use

If mailx is still right, does the

This is the man for mailx:

NAME

mailx - send and receive Internet mail

SYNOPSIS

mailx -BDdEFintv~ -s subject -a attachment -c cc-addr -b bcc-

addr -r from-addr -h hops -A account -S vari-

able[=value] to-addr . . .

mailx -BDdeEHiInNRv~ -T name -A account -S variable[=value] -f

name

mailx -BDdeEinNRv~ -A account -S variable[=value] -u user

https://redd.it/1f8zn0x

@r_bash

I'm writing a noscript that will be run via cronjob late at night, and I'd like for it to email the results to me.

When I use

man mail, the result is mailx. I can't find anyone talking about mailx in the last decade, though! Is this still the best way to send mail through bash, or has it been replaced with someone else?If mailx is still right, does the

[-r from_address] need to be a valid account on the server? I don't see anything about it being validated, so it seems like it could be anything :-O Ideally I would use root@myserver.com, which is the address when I get other server-related emails, but I'm not sure that I have a username/password for it.This is the man for mailx:

NAME

mailx - send and receive Internet mail

SYNOPSIS

mailx -BDdEFintv~ -s subject -a attachment -c cc-addr -b bcc-

addr -r from-addr -h hops -A account -S vari-

able[=value] to-addr . . .

mailx -BDdeEHiInNRv~ -T name -A account -S variable[=value] -f

name

mailx -BDdeEinNRv~ -A account -S variable[=value] -u user

https://redd.it/1f8zn0x

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Any way to tell if noscript is ran via command line versus cron?

Inside of a bash noscript, is there a way to tell whether the noscript was ran via command line versus crontab?

I know that I can send a variable, like so:

# bash foo.sh bar

And then in the noscript, use:

if [ $1 -eq "bar" ]

then

# it was ran via command line

fi

but is that the best way?

The goal here would be to printf results to the screen if it's ran via command line, or email them if it's ran via crontab.

https://redd.it/1f93hp3

@r_bash

Inside of a bash noscript, is there a way to tell whether the noscript was ran via command line versus crontab?

I know that I can send a variable, like so:

# bash foo.sh bar

And then in the noscript, use:

if [ $1 -eq "bar" ]

then

# it was ran via command line

fi

but is that the best way?

The goal here would be to printf results to the screen if it's ran via command line, or email them if it's ran via crontab.

https://redd.it/1f93hp3

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

A Bash + Python tool to watch a target in Satellite Imagery

I built a Bash + Python tool to watch a target in satellite imagery: [https://github.com/kamangir/blue-geo/tree/main/blue\_geo/watch](https://github.com/kamangir/blue-geo/tree/main/blue_geo/watch)

Here is the github repo: [https://github.com/kamangir/blue-geo](https://github.com/kamangir/blue-geo) The tool is also pip-installable: [https://pypi.org/project/blue-geo/](https://pypi.org/project/blue-geo/)

Here are three examples:

1. The recent Chilcotin River Landslide in British Columbia.

2. Burning Man 2024.

3. Mount Etna.

https://i.redd.it/z0b42i6huwmd1.gif

https://i.redd.it/y53fih6huwmd1.gif

https://i.redd.it/jh5cch6huwmd1.gif

This is how the tool is called,

u/batch eval - \

blue_geo watch - \

target=burning-man-2024 \

to=aws_batch - \

publish \

geo-watch-2024-09-04-burning-man-2024-a

This is how a target is defined,

burning-man-2024:

catalog: EarthSearch

collection: sentinel_2_l1c

params:

height: 0.051

width: 0.12

query_args:

datetime: 2024-08-18/2024-09-15

lat: 40.7864

lon: -119.2065

radius: 0.01

It runs a map-reduce on AWS Batch.

All targets are watched on Sentinel-2 through Copernicus and EarthSearch.

https://redd.it/1f9cvyx

@r_bash

I built a Bash + Python tool to watch a target in satellite imagery: [https://github.com/kamangir/blue-geo/tree/main/blue\_geo/watch](https://github.com/kamangir/blue-geo/tree/main/blue_geo/watch)

Here is the github repo: [https://github.com/kamangir/blue-geo](https://github.com/kamangir/blue-geo) The tool is also pip-installable: [https://pypi.org/project/blue-geo/](https://pypi.org/project/blue-geo/)

Here are three examples:

1. The recent Chilcotin River Landslide in British Columbia.

2. Burning Man 2024.

3. Mount Etna.

https://i.redd.it/z0b42i6huwmd1.gif

https://i.redd.it/y53fih6huwmd1.gif

https://i.redd.it/jh5cch6huwmd1.gif

This is how the tool is called,

u/batch eval - \

blue_geo watch - \

target=burning-man-2024 \

to=aws_batch - \

publish \

geo-watch-2024-09-04-burning-man-2024-a

This is how a target is defined,

burning-man-2024:

catalog: EarthSearch

collection: sentinel_2_l1c

params:

height: 0.051

width: 0.12

query_args:

datetime: 2024-08-18/2024-09-15

lat: 40.7864

lon: -119.2065

radius: 0.01

It runs a map-reduce on AWS Batch.

All targets are watched on Sentinel-2 through Copernicus and EarthSearch.

https://redd.it/1f9cvyx

@r_bash

lolcat reconfiguration help needed please

Was hoping you could help out a total noob. You may have seen this noscript - lolcat piped out for all commands. Its fun, it's nice, but it creates some unwanted behavior at times. Its also not my noscript (im a noob). However, i thought, at least for my purposes, it would be a better noscript if exclusion commands could be added to the noscript, for example this noscript 'lolcats' all commands, including things like 'exit' and prevents them executing. So i'd like to be able to add a list of commands in the *.bashrc noscript that excludes lolcat from executing, such as 'exit'. Any help is appreciated. Thanks.

lol()

{

if -t 1 ; then

"$@" | lolcat

else

"$@"

fi

}

bind 'RETURN: "\e1~lol \e[4~\n"'

or this one has aliases created, but i'd like to do the opposite, instead of adding every command to be lolcat, create an exclusion list of commands not to be lolcat.

lol()

{

if [ -t 1 ; then

"$@" | lolcat

else

"$@"

fi

}

COMMANDS=(

ls

cat

)

for COMMAND in "${COMMANDS@}"; do

alias "${COMMAND}=lol ${COMMAND}"

alias ".${COMMAND}=$(which ${COMMAND})"

done

https://redd.it/1f9k1q5

@r_bash

Was hoping you could help out a total noob. You may have seen this noscript - lolcat piped out for all commands. Its fun, it's nice, but it creates some unwanted behavior at times. Its also not my noscript (im a noob). However, i thought, at least for my purposes, it would be a better noscript if exclusion commands could be added to the noscript, for example this noscript 'lolcats' all commands, including things like 'exit' and prevents them executing. So i'd like to be able to add a list of commands in the *.bashrc noscript that excludes lolcat from executing, such as 'exit'. Any help is appreciated. Thanks.

lol()

{

if -t 1 ; then

"$@" | lolcat

else

"$@"

fi

}

bind 'RETURN: "\e1~lol \e[4~\n"'

or this one has aliases created, but i'd like to do the opposite, instead of adding every command to be lolcat, create an exclusion list of commands not to be lolcat.

lol()

{

if [ -t 1 ; then

"$@" | lolcat

else

"$@"

fi

}

COMMANDS=(

ls

cat

)

for COMMAND in "${COMMANDS@}"; do

alias "${COMMAND}=lol ${COMMAND}"

alias ".${COMMAND}=$(which ${COMMAND})"

done

https://redd.it/1f9k1q5

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

missing final newline:

I just discovered that this does not work as I expect it to do:

this prints only "bar" but not "foo" because the final newline is missing.

For my current use-case I found a work-around:

In my case it is ok to sort the lines.

How do you solve this, so that it is ok if the final line does not have a newline?

https://redd.it/1f9pgy9

@r_bash

| while read -r line; do ...I just discovered that this does not work as I expect it to do:

echo -en "bar\nfoo" | while read var;do echo $var; done

this prints only "bar" but not "foo" because the final newline is missing.

For my current use-case I found a work-around:

echo -en "bar\nfoo"| sort | while read var;do echo $var; done

In my case it is ok to sort the lines.

How do you solve this, so that it is ok if the final line does not have a newline?

https://redd.it/1f9pgy9

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Has anyone encountered ' An error occurred in before all hook' when using shellspec?

I have implemented a unit test for a Shell using shellspec. And I am always thrown the above error in 'before all' and 'after all' both. Even though the log contains exit code 0 which basically indicating there is no error none of my tests are executing.

I have added extra logs and also redirected the errors but still I am facing this error and am out of options. I am using the latest version of Shellspec as well.

I am mocking git commands in my test noscript. But it is quite necessary for my tests as well.

I even checked for the relevent OS type in the setup method

# Determine OS type

OS_TYPE=$(uname 2>/dev/null || echo "Unknown")

case "$OS_TYPE" in

Darwin|Linux)

TMP_DIR="/tmp"

;;

CYGWIN*|MINGW*|MSYS*)

if command -v cygpath >/dev/null 2>&1; then

TMP_DIR="$(cygpath -m "${TEMP:-/tmp}")"

else

echo "Error: cygpath not found" >&2

exit 1

fi

;;

*)

echo "Error: Unsupported OS: $OS_TYPE" >&2

exit 1

;;

esac

Any guidance is immensely appreciated.

https://redd.it/1f9s8b4

@r_bash

I have implemented a unit test for a Shell using shellspec. And I am always thrown the above error in 'before all' and 'after all' both. Even though the log contains exit code 0 which basically indicating there is no error none of my tests are executing.

I have added extra logs and also redirected the errors but still I am facing this error and am out of options. I am using the latest version of Shellspec as well.

I am mocking git commands in my test noscript. But it is quite necessary for my tests as well.

I even checked for the relevent OS type in the setup method

# Determine OS type

OS_TYPE=$(uname 2>/dev/null || echo "Unknown")

case "$OS_TYPE" in

Darwin|Linux)

TMP_DIR="/tmp"

;;

CYGWIN*|MINGW*|MSYS*)

if command -v cygpath >/dev/null 2>&1; then

TMP_DIR="$(cygpath -m "${TEMP:-/tmp}")"

else

echo "Error: cygpath not found" >&2

exit 1

fi

;;

*)

echo "Error: Unsupported OS: $OS_TYPE" >&2

exit 1

;;

esac

Any guidance is immensely appreciated.

https://redd.it/1f9s8b4

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

GitHub - oscarrivera2028/d-coding-companion: A coding companion that can be used to fix bugs in the terminal

https://github.com/oscarrivera2028/d-coding-companion

https://redd.it/1f9u6er

@r_bash

https://github.com/oscarrivera2028/d-coding-companion

https://redd.it/1f9u6er

@r_bash

GitHub

GitHub - oscarrivera2028/d-coding-companion: A coding companion that can be used to fix bugs in the terminal

A coding companion that can be used to fix bugs in the terminal - oscarrivera2028/d-coding-companion

Weird issue with sed hating on equals signs, I think?

Hey all, I been working to automate username and password updates for a kickstart file, but sed isn't playing nicely with me. The relevant code looks something like this:

Where the relevant text should go from one of these to the other:

After much tinkering, the only thing that seems to be setting this off is the = sign in the code, but then I can't seem to find a way to escape the = sign in my code! Pls help!!!

https://redd.it/1f9whl1

@r_bash

Hey all, I been working to automate username and password updates for a kickstart file, but sed isn't playing nicely with me. The relevant code looks something like this:

$username=hello$password=yeetsed -i "s/name=(*.) --password=(*.) --/name=$username --password=$password --/" ./packer/ks.cfgWhere the relevant text should go from one of these to the other:

user --groups=wheel --name=user --password=kdljdfd --iscrypted --gecos="Rocky User"user --groups=wheel --name=hello --password=yeet --iscrypted --gecos="Rocky User"After much tinkering, the only thing that seems to be setting this off is the = sign in the code, but then I can't seem to find a way to escape the = sign in my code! Pls help!!!

https://redd.it/1f9whl1

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Final noscript to clean /tmp, improvements welcome!

I wanted to get a little more practice in with bash, so (mainly for fun) I sorta reinvented the wheel a little.

*Quick backstory:*

My VPS uses WHM/cPanel, and I don't know if this is a problem strictly with them or if it's universal. But back in the good ol' days, I just had session files in the /tmp/ directory and I could run tmpwatch via cron to clear it out. But awhile back, the session files started going to:

# 56 is for PHP 5.6, which I still have for a few legacy hosting clients

/tmp/systemd-private-[foo]-ea-php56-php-fpm.service-[bar]/tmp

# 74 is for PHP 7.4, the version used for the majority of the accounts

/tmp/systemd-private-[foo]-ea-php74-php-fpm.service-[bar]/tmp

And since \[foo\] and \[bar\] were somewhat random and changed regularly, there was no good way to set up a cron to clean them.

cPanel recommended this one-liner:

find /tmp/systemd-private*php-fpm.service* -name sess_* ! -mtime -1 -exec rm -f '{}' \;

but I don't like the idea of running `rm` via cron, so I built this noscript as my own alternative.

*So this is what I built:*

My noscript loops through /tmp and the subdirectories in /tmp, and runs tmpwatch on each of them if necessary.

I've set it to run via crontab at 1am, and if the server load is greater than 3 then it tries again at 2am. If the load is still high, it tries again at 3am, and then after that it gives up. This alone is a pretty big improvement over the cPanel one-liner, because sometimes I would have a high load when it started and then the load would skyrocket!

In theory, crontab should email the printf text to the root email address. Or if you run it via command line, it'll print those results to the terminal.

I'm open to any suggestions on making it faster or better! Otherwise, maybe it'll help someone else that found themselves in the same position :-)

#!/bin/sh

#################################################

# Save as tmpwatch.sh, then upload to /root and set permission to 0755

#

# To run from SSH:

# bash tmpwatch.sh

#

# To set in crontab from SSH:

# crontab -e

# i (to insert)

# paste or type this to run daily at 1am:

# 0 1 * * * bash tmpwatch.sh

# Save it by pressing Esc, then type :wq and hit Enter

#

# Crontab Format:

# minute hour day month day-of-the-week command

# * means "every"

#

#################################################

# try to run a max of 3 times; 1am, 2am, and 3am

# you can change the 3 to whatever you prefer, I chose this to avoid high traffic times

for attempts in {1..3}

do

# only run if server load is < 3

# you can change the 3 to whatever you prefer, too

if (( $(awk '{ print int($1 * 100); }' < /proc/loadavg) < (3 * 100) ))

then

### Clean /tmp directory

# thanks to @ZetaZoid, r/linux4noobs for the "find" command line

sizeStart=$(nice -n 19 ionice -c 3 find /tmp/ -maxdepth 1 -type f -exec du -b {} + | awk '{sum += $1} END {print sum}')

# if there are files in the /tmp directory, run tmpwatch to remove them

# else, move on to the subdirectories

if [[ -n $sizeStart && $sizeStart -ge 0 ]]

then

nice -n 19 ionice -c 3 tmpwatch -m 12 /tmp

sleep 5

sizeEnd=$(nice -n 19 ionice -c 3 find /tmp/ -maxdepth 1 -type f -exec du -b {} + | awk '{sum += $1} END {print sum}')

if [[ -z $sizeEnd ]]

then

sizeEnd=0

fi

if (( $sizeStart > $sizeEnd ))

then

body+="tmpwatch -m 12 /tmp ...\n"

body+="$sizeStart -> $sizeEnd\n\n"

fi

fi

### Clean /tmp subdirectories

for i in $(ls -d /tmp/systemd-private-*)

do

i+="/tmp"

if [[ -d "$i" ]]

I wanted to get a little more practice in with bash, so (mainly for fun) I sorta reinvented the wheel a little.

*Quick backstory:*

My VPS uses WHM/cPanel, and I don't know if this is a problem strictly with them or if it's universal. But back in the good ol' days, I just had session files in the /tmp/ directory and I could run tmpwatch via cron to clear it out. But awhile back, the session files started going to:

# 56 is for PHP 5.6, which I still have for a few legacy hosting clients

/tmp/systemd-private-[foo]-ea-php56-php-fpm.service-[bar]/tmp

# 74 is for PHP 7.4, the version used for the majority of the accounts

/tmp/systemd-private-[foo]-ea-php74-php-fpm.service-[bar]/tmp

And since \[foo\] and \[bar\] were somewhat random and changed regularly, there was no good way to set up a cron to clean them.

cPanel recommended this one-liner:

find /tmp/systemd-private*php-fpm.service* -name sess_* ! -mtime -1 -exec rm -f '{}' \;

but I don't like the idea of running `rm` via cron, so I built this noscript as my own alternative.

*So this is what I built:*

My noscript loops through /tmp and the subdirectories in /tmp, and runs tmpwatch on each of them if necessary.

I've set it to run via crontab at 1am, and if the server load is greater than 3 then it tries again at 2am. If the load is still high, it tries again at 3am, and then after that it gives up. This alone is a pretty big improvement over the cPanel one-liner, because sometimes I would have a high load when it started and then the load would skyrocket!

In theory, crontab should email the printf text to the root email address. Or if you run it via command line, it'll print those results to the terminal.

I'm open to any suggestions on making it faster or better! Otherwise, maybe it'll help someone else that found themselves in the same position :-)

#!/bin/sh

#################################################

# Save as tmpwatch.sh, then upload to /root and set permission to 0755

#

# To run from SSH:

# bash tmpwatch.sh

#

# To set in crontab from SSH:

# crontab -e

# i (to insert)

# paste or type this to run daily at 1am:

# 0 1 * * * bash tmpwatch.sh

# Save it by pressing Esc, then type :wq and hit Enter

#

# Crontab Format:

# minute hour day month day-of-the-week command

# * means "every"

#

#################################################

# try to run a max of 3 times; 1am, 2am, and 3am

# you can change the 3 to whatever you prefer, I chose this to avoid high traffic times

for attempts in {1..3}

do

# only run if server load is < 3

# you can change the 3 to whatever you prefer, too

if (( $(awk '{ print int($1 * 100); }' < /proc/loadavg) < (3 * 100) ))

then

### Clean /tmp directory

# thanks to @ZetaZoid, r/linux4noobs for the "find" command line

sizeStart=$(nice -n 19 ionice -c 3 find /tmp/ -maxdepth 1 -type f -exec du -b {} + | awk '{sum += $1} END {print sum}')

# if there are files in the /tmp directory, run tmpwatch to remove them

# else, move on to the subdirectories

if [[ -n $sizeStart && $sizeStart -ge 0 ]]

then

nice -n 19 ionice -c 3 tmpwatch -m 12 /tmp

sleep 5

sizeEnd=$(nice -n 19 ionice -c 3 find /tmp/ -maxdepth 1 -type f -exec du -b {} + | awk '{sum += $1} END {print sum}')

if [[ -z $sizeEnd ]]

then

sizeEnd=0

fi

if (( $sizeStart > $sizeEnd ))

then

body+="tmpwatch -m 12 /tmp ...\n"

body+="$sizeStart -> $sizeEnd\n\n"

fi

fi

### Clean /tmp subdirectories

for i in $(ls -d /tmp/systemd-private-*)

do

i+="/tmp"

if [[ -d "$i" ]]

then

sizeStart=$(nice -n 19 ionice -c 3 du -s $i | awk '{print $1}')

nice -n 19 ionice -c 3 tmpwatch -m 12 $i

sleep 5

sizeEnd=$(nice -n 19 ionice -c 3 du -s $i | awk '{print $1}')

if (( $sizeStart > $sizeEnd ))

then

body+="tmpwatch -m 12 $i ...\n"

body+="$sizeStart -> $sizeEnd\n\n"

fi

fi

done

if [[ -n $body ]]

then

printf "$body"

fi

break

else

# server load was high, try again in an hour

sleep 3600

fi

done

https://redd.it/1fa8ccc

@r_bash

sizeStart=$(nice -n 19 ionice -c 3 du -s $i | awk '{print $1}')

nice -n 19 ionice -c 3 tmpwatch -m 12 $i

sleep 5

sizeEnd=$(nice -n 19 ionice -c 3 du -s $i | awk '{print $1}')

if (( $sizeStart > $sizeEnd ))

then

body+="tmpwatch -m 12 $i ...\n"

body+="$sizeStart -> $sizeEnd\n\n"

fi

fi

done

if [[ -n $body ]]

then

printf "$body"

fi

break

else

# server load was high, try again in an hour

sleep 3600

fi

done

https://redd.it/1fa8ccc

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

How to Replace a Line with Another Line, Programmatically?

Hi all

I would like to write a bash noscript, that takes the file

and replaces the line

with the line

I would like the match to look for a full line match (e.g.

and not a partial string in a line

(so for example, this line

even tho there's a partial string in it that matches)

If there are several ways/programs to do it, please write,

it's nice to learn various ways.

Thank you evry much

https://redd.it/1falc6z

@r_bash

Hi all

I would like to write a bash noscript, that takes the file

/etc/ssh/sshd_config, and replaces the line

#Port 22 with the line

Port 5000.I would like the match to look for a full line match (e.g.

#Port 22), and not a partial string in a line

(so for example, this line

##Port 2244 will not be matched and then replaced, even tho there's a partial string in it that matches)

If there are several ways/programs to do it, please write,

it's nice to learn various ways.

Thank you evry much

https://redd.it/1falc6z

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

AWS-RDS Schema shuttle

https://github.com/N1kh1lS1ngh25/aws-rds-schema-shuttle

https://redd.it/1fb00os

@r_bash

https://github.com/N1kh1lS1ngh25/aws-rds-schema-shuttle

https://redd.it/1fb00os

@r_bash

GitHub

GitHub - N1kh1lS1ngh25/aws-rds-schema-shuttle: A comprehensive suite of shell noscripts designed to streamline the process of taking…

A comprehensive suite of shell noscripts designed to streamline the process of taking schema backups from AWS RDS instances and uploading them to other AWS RDS instances. This project leverages myloa...

How to progress bar on ZSTD???

I'm using the following noscript to make my archives

export ZSTDCLEVEL=19

export ZSTDNBTHREADS=8

tar --create --zstd --file 56B0B219B0B20013.tar.zst 56B0B219B0B20013/

My wish is if I could have some kind of progress bar to show me - How many files is left before the end of the compression

https://i.postimg.cc/t4S2DtpX/Screenshot-from-2024-09-07-12-40-04.png

So can somebody help me to solve this dilemma?

I already checked all around the internet and it looks like the people can't really explain about tar + zstd.

https://redd.it/1fb42qr

@r_bash

I'm using the following noscript to make my archives

export ZSTDCLEVEL=19

export ZSTDNBTHREADS=8

tar --create --zstd --file 56B0B219B0B20013.tar.zst 56B0B219B0B20013/

My wish is if I could have some kind of progress bar to show me - How many files is left before the end of the compression

https://i.postimg.cc/t4S2DtpX/Screenshot-from-2024-09-07-12-40-04.png

So can somebody help me to solve this dilemma?

I already checked all around the internet and it looks like the people can't really explain about tar + zstd.

https://redd.it/1fb42qr

@r_bash

postimg.cc

Screenshot from 2024 09 07 12 40 04 — Postimages

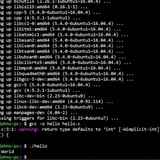

help's Command List is Truncated, Any way to Show it Correctly?

Hi all

If you run

you get the list of Bash internal commands.

It shows it in 2 columns, which makes some of the longer noscripts be truncated, with a ">" at the end.

See here:

https://i.postimg.cc/sDvSNTfD/bh.png

Any way to make

Switching to a Single Column list could solve it,

but

https://redd.it/1fb56id

@r_bash

Hi all

If you run

help, you get the list of Bash internal commands.

It shows it in 2 columns, which makes some of the longer noscripts be truncated, with a ">" at the end.

See here:

https://i.postimg.cc/sDvSNTfD/bh.png

Any way to make

help show it without truncating them?Switching to a Single Column list could solve it,

but

help help does not show a switch for Single Column..https://redd.it/1fb56id

@r_bash

postimg.cc

bh — Postimages

[UPDATE] forkrun v1.4 released!

I've just released an update (v1.4) for my [forkrun](https://github.com/jkool702/forkrun) tool.

For those not familiar with it, `forkrun` is a ridiculously fast\*\* pure-bash tool for running arbitrary code in parallel. `forkrun`'s syntax is similar to `parallel` and `xargs`, but it's faster than `parallel`, and it is comparable in speed (perhaps slightly faster) than `xargs -p` while having considerably more available options. And, being written in bash, `forkrun` natively supports bash functions, making it trivially easy to parallelize complicated multi-step tasks by wrapping them in a bash function.

forkrun's v1.4 release adds several new optimizations and a few new features, including:

1. a new flag (`-u`) that allows reading input data from an arbitrary file denoscriptor instead of stdin

2. the ability to dynamically and automatically figure out how many processor threads (well, how many worker coprocs) to use based on runtime conditions (system cpu usage and coproc read queue length)

3. on x86_64 systems, a custom loadable builtin that calls `lseek` is used, significantly reducing the time it takes forkrun to read data passed on stdin. This brings `forkrun`'s "no load" speed (running a bunch of newlines through `:`) to around 4 million lines per second on my hardware.

Questions? comments? suggestions? let me know!

***

\*\* How fast, you ask?

The other day I ran a simple speedtest for computing the `sha512sum` of around 596,000 small files with a combined size of around 15 gb. a simple loop through all the files that computed the sha512sum of each sequentially one at a time took 182 minutes (just over 3 hours).

`forkrun` computed all 596k checksum in 2.61 seconds. Which is about 4300x faster.

Soooo.....pretty damn fast :)

https://redd.it/1fbhqf5

@r_bash

I've just released an update (v1.4) for my [forkrun](https://github.com/jkool702/forkrun) tool.

For those not familiar with it, `forkrun` is a ridiculously fast\*\* pure-bash tool for running arbitrary code in parallel. `forkrun`'s syntax is similar to `parallel` and `xargs`, but it's faster than `parallel`, and it is comparable in speed (perhaps slightly faster) than `xargs -p` while having considerably more available options. And, being written in bash, `forkrun` natively supports bash functions, making it trivially easy to parallelize complicated multi-step tasks by wrapping them in a bash function.

forkrun's v1.4 release adds several new optimizations and a few new features, including:

1. a new flag (`-u`) that allows reading input data from an arbitrary file denoscriptor instead of stdin

2. the ability to dynamically and automatically figure out how many processor threads (well, how many worker coprocs) to use based on runtime conditions (system cpu usage and coproc read queue length)

3. on x86_64 systems, a custom loadable builtin that calls `lseek` is used, significantly reducing the time it takes forkrun to read data passed on stdin. This brings `forkrun`'s "no load" speed (running a bunch of newlines through `:`) to around 4 million lines per second on my hardware.

Questions? comments? suggestions? let me know!

***

\*\* How fast, you ask?

The other day I ran a simple speedtest for computing the `sha512sum` of around 596,000 small files with a combined size of around 15 gb. a simple loop through all the files that computed the sha512sum of each sequentially one at a time took 182 minutes (just over 3 hours).

`forkrun` computed all 596k checksum in 2.61 seconds. Which is about 4300x faster.

Soooo.....pretty damn fast :)

https://redd.it/1fbhqf5

@r_bash

GitHub

GitHub - jkool702/forkrun: runs multiple inputs through a noscript/function in parallel using bash coprocs

runs multiple inputs through a noscript/function in parallel using bash coprocs - jkool702/forkrun

Why sometimes mouse scroll will scroll the shell window text vs sometimes will scroll through past shell commands?

One way to reproduce it is using the "screen" command. The screen session will make the mouse scroll action scroll through past commands I have executed rather than scroll through past text from the output of my commands.

https://redd.it/1fbj2o7

@r_bash

One way to reproduce it is using the "screen" command. The screen session will make the mouse scroll action scroll through past commands I have executed rather than scroll through past text from the output of my commands.

https://redd.it/1fbj2o7

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Sed | replacing variable with new value, along with escaping

I'll put the basics of the noscript down:

All I'm attempting to do is assign a new value to

The issue with this is that if for some reason, the string contains a special character, then the noscript will error out:

So I came up with a bunch of sed rules after the variable is assigned, and the last one does an escape on the string. This seems extremely over-worked, and I was wondering if there's any way to assign the new variable as a true string, no matter what characters are within the string. Because obviously I want to use this for more than just a version number.

The end result should appear as:

I want to use this code to add another variable such as

https://redd.it/1fbl8wd

@r_bash

I'll put the basics of the noscript down:

VERSION=$(git show -s --date='format:%Y.%m.%d' --format='%cd+%h' | sed "s/^0*//g; s/\.0*/./g" | sed 's/[][ \~`!@#$%^&*()={}|;:'"'"'",<>/?]/\\&/g')

sed -i "s/VERSION_STRING = .*/VERSION_STRING = \x22${VERSION}\x22/" ./app/version.py

All I'm attempting to do is assign a new value to

VERSION, and then transfer that new value using sed and replace the current value in the file.The issue with this is that if for some reason, the string contains a special character, then the noscript will error out:

sed: -e expression #1, char 37: unknown option to `s'

So I came up with a bunch of sed rules after the variable is assigned, and the last one does an escape on the string. This seems extremely over-worked, and I was wondering if there's any way to assign the new variable as a true string, no matter what characters are within the string. Because obviously I want to use this for more than just a version number.

The end result should appear as:

VERSION = "1.0.0"

ANOTHER = "My string with @ # $ special %^& chars"

I want to use this code to add another variable such as

ANOTHER which will contain special characters. And unless I escape them using the sed rule at the top which is being used on VERSION, it'll just error out.https://redd.it/1fbl8wd

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Books that dive into applications of bash like "data science at the command line", "cyber ops with bash" etc?

PS, I am learning programming by solving problems/exercises. I want to learn bash(I am familiar with linux command line) however I am hesitant to purchase data science at command line book. Although it's free on author's website, physical books hit different.

I am from Nepal.

https://redd.it/1fbo9em

@r_bash

PS, I am learning programming by solving problems/exercises. I want to learn bash(I am familiar with linux command line) however I am hesitant to purchase data science at command line book. Although it's free on author's website, physical books hit different.

I am from Nepal.

https://redd.it/1fbo9em

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community

Is it better to loop over a command, or create a separate array that's equal to the results of that command?

This is how I do a daily backup of MySQL:

for DB in $(mysql -e 'show databases' -s --skip-column-names)

do

mysqldump --single-transaction --quick $DB | gzip > "/backup/$DB.sql.gz";

done

I have 122 databases on the server. So does this run a MySQL query 122 times to get the results of "show databases" each time?

If so, is it better / faster to process to do something like this (just typed for this post, not tested)?

databases=$(mysql -e 'show databases' -s --skip-column-names)

for DB in ${databases@}

do

mysqldump --single-transaction --quick $DB | gzip > "/backup/$DB.sql.gz";

done

https://redd.it/1fc6e0z

@r_bash

This is how I do a daily backup of MySQL:

for DB in $(mysql -e 'show databases' -s --skip-column-names)

do

mysqldump --single-transaction --quick $DB | gzip > "/backup/$DB.sql.gz";

done

I have 122 databases on the server. So does this run a MySQL query 122 times to get the results of "show databases" each time?

If so, is it better / faster to process to do something like this (just typed for this post, not tested)?

databases=$(mysql -e 'show databases' -s --skip-column-names)

for DB in ${databases@}

do

mysqldump --single-transaction --quick $DB | gzip > "/backup/$DB.sql.gz";

done

https://redd.it/1fc6e0z

@r_bash

Reddit

From the bash community on Reddit

Explore this post and more from the bash community